Pass Your Splunk Core Certified Power User Certification Easy!

Splunk Core Certified Power User Certification Exams Questions & Answers, Accurate & Verified By IT Experts

Instant Download, Free Fast Updates, 99.6% Pass Rate.

Splunk Core Certified Power User Certification Exams Screenshots

Download Free Splunk Core Certified Power User Practice Test Questions VCE Files

| Exam | Title | Files |

|---|---|---|

Exam SPLK-1002 |

Title Splunk Core Certified Power User |

Files 3 |

Splunk Core Certified Power User Certification Exam Dumps & Practice Test Questions

Prepare with top-notch Splunk Core Certified Power User certification practice test questions and answers, vce exam dumps, study guide, video training course from ExamCollection. All Splunk Core Certified Power User certification exam dumps & practice test questions and answers are uploaded by users who have passed the exam themselves and formatted them into vce file format.

Mastering Enterprise Data Analytics: Your Complete Journey to Becoming a Splunk Core Certified Power User

The realm of enterprise data analytics has undergone tremendous transformation in recent years, with organizations increasingly recognizing the paramount importance of leveraging sophisticated data intelligence platforms to drive strategic decision-making processes. Among the plethora of available solutions, Splunk stands as an indomitable force, revolutionizing how enterprises collect, index, search, and analyze machine-generated data from virtually any source. The Splunk Core Certified Power User credential represents a pivotal milestone in one's professional journey, demonstrating mastery over advanced searching techniques, sophisticated reporting methodologies, and comprehensive data modeling capabilities that distinguish exceptional practitioners from ordinary users.

This certification pathway transcends basic operational knowledge, delving deep into the intricate mechanisms that power modern data-driven organizations. Aspiring power users embark on a transformative educational journey that encompasses multifaceted skill development, ranging from elementary search command proficiency to sophisticated knowledge object creation and deployment. The certification process meticulously evaluates candidates' abilities to construct complex search queries, implement efficient data normalization strategies, develop reusable knowledge objects, and architect scalable data models that serve enterprise-wide analytical requirements.

Unleashing the Power of Advanced Data Intelligence and Analytics Capabilities

Contemporary organizations generate unprecedented volumes of machine data across diverse technological infrastructures, including web servers, application logs, network devices, security systems, and IoT sensors. This exponential data proliferation necessitates sophisticated analytical tools capable of processing, correlating, and extracting actionable insights from seemingly chaotic information streams. Splunk's powerful indexing engine and intuitive search language provide unparalleled capabilities for transforming raw machine data into meaningful business intelligence, enabling organizations to identify trends, detect anomalies, troubleshoot operational issues, and make informed strategic decisions.

The power user certification validates proficiency in leveraging Splunk's advanced features to address complex analytical challenges that extend far beyond simple log analysis. Certified professionals demonstrate expertise in creating sophisticated dashboards, implementing custom visualizations, developing automated alerting mechanisms, and establishing comprehensive data governance frameworks. These capabilities prove invaluable in modern enterprise environments where rapid decision-making, proactive monitoring, and predictive analytics drive competitive advantages and operational excellence.

Furthermore, the certification pathway establishes a solid foundation for pursuing advanced Splunk specializations, including administrator, architect, and consultant roles. Power users possess the fundamental knowledge necessary to understand enterprise deployment considerations, security implementation strategies, and performance optimization techniques that characterize senior-level positions within the Splunk ecosystem.

Comprehensive Skill Enhancement Through Advanced Search and Reporting Mastery

The cornerstone of power user expertise lies in mastering sophisticated searching and reporting capabilities that transform raw data into actionable intelligence. Advanced search techniques encompass a vast array of commands, operators, and functions that enable practitioners to extract precise information from massive datasets efficiently. These capabilities extend far beyond basic keyword searches, incorporating complex statistical calculations, temporal analysis, pattern recognition, and multi-dimensional data correlation techniques.

Proficient power users develop expertise in constructing elaborate search pipelines that leverage Splunk's extensive command repertoire to perform sophisticated data transformations. These pipelines often incorporate multiple processing stages, each applying specific analytical functions to progressively refine and enhance data quality. Advanced practitioners understand the nuanced performance implications of different command combinations and optimize their searches to minimize resource consumption while maximizing analytical precision.

Statistical analysis represents another critical dimension of power user capabilities, encompassing descriptive statistics, trend analysis, forecasting methodologies, and anomaly detection techniques. Practitioners learn to implement complex mathematical operations, calculate moving averages, identify seasonal patterns, and establish baseline metrics that enable proactive monitoring and alerting. These statistical capabilities prove invaluable for capacity planning, performance optimization, security threat detection, and business intelligence applications.

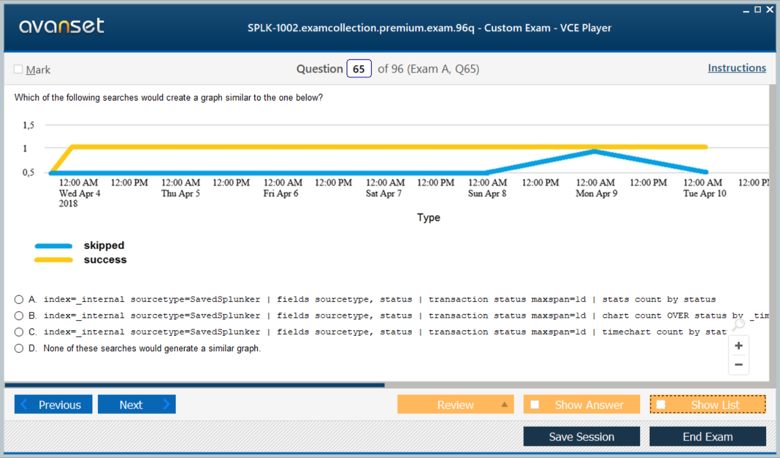

Reporting excellence requires mastery of Splunk's comprehensive visualization framework, including traditional charts, graphs, and tables, as well as advanced interactive dashboards and real-time monitoring interfaces. Power users understand how to select appropriate visualization types for different data characteristics, implement effective color schemes and layout designs, and create intuitive user experiences that facilitate rapid comprehension and decision-making.

Advanced practitioners also develop proficiency in implementing dynamic reporting solutions that automatically adapt to changing data conditions and user requirements. These solutions incorporate parameterized queries, conditional logic, and intelligent filtering mechanisms that enable users to explore data interactively without requiring technical expertise. Such capabilities democratize data access across organizations, empowering business stakeholders to derive insights independently while maintaining data integrity and security standards.

Time-based analysis constitutes another fundamental aspect of power user expertise, encompassing techniques for analyzing temporal patterns, calculating time-over-time comparisons, and implementing sophisticated scheduling mechanisms. Practitioners learn to leverage Splunk's powerful time manipulation functions to perform complex chronological analyses, identify cyclical behaviors, and establish predictive models based on historical trends.

The certification process emphasizes practical application of these advanced techniques through comprehensive hands-on exercises that simulate real-world analytical challenges. Candidates demonstrate their ability to construct complex multi-stage searches, implement sophisticated statistical calculations, create compelling visualizations, and develop comprehensive reporting solutions that address diverse stakeholder requirements.

Creating Powerful Workflow Actions and Event Types for Enhanced Operational Efficiency

Workflow actions represent sophisticated automation capabilities that enable power users to integrate Splunk seamlessly with external systems and processes. These powerful mechanisms facilitate real-time response to identified conditions, enabling organizations to implement proactive remediation strategies and streamline operational workflows. Advanced practitioners understand how to design and implement custom workflow actions that trigger specific responses based on predefined criteria, ranging from simple notification mechanisms to complex multi-step remediation processes.

The development of effective workflow actions requires comprehensive understanding of external system integration patterns, including REST API consumption, webhook implementation, and secure authentication mechanisms. Power users learn to construct robust integration solutions that maintain data integrity while enabling seamless information exchange between Splunk and diverse enterprise systems such as incident management platforms, configuration management databases, and automated deployment tools.

Event type creation represents another critical capability that enables power users to standardize data interpretation across organizational boundaries. These knowledge objects encapsulate complex search logic within reusable components that can be applied consistently across different analytical contexts. Advanced practitioners understand how to identify appropriate candidates for event type creation, implement efficient categorization strategies, and maintain version control mechanisms that ensure consistency across distributed analytical environments.

Sophisticated event type implementations often incorporate conditional logic, parameterized inputs, and dynamic field extraction mechanisms that adapt to varying data formats and structures. Power users develop expertise in creating flexible event types that accommodate diverse data sources while maintaining analytical consistency and performance optimization. These capabilities prove particularly valuable in heterogeneous environments where multiple systems generate similar types of events with varying format specifications.

The certification process evaluates candidates' abilities to design and implement comprehensive workflow solutions that address complex operational requirements. This includes understanding the performance implications of different workflow architectures, implementing appropriate error handling mechanisms, and establishing monitoring capabilities that ensure reliable operation under diverse conditions.

Advanced practitioners also develop proficiency in creating sophisticated event correlation mechanisms that enable complex analytical scenarios spanning multiple data sources and time periods. These capabilities facilitate advanced security analysis, performance troubleshooting, and business intelligence applications that require comprehensive understanding of interdependent system behaviors and temporal relationships.

Mastering Knowledge Objects and Data Models for Scalable Analytics Architecture

Knowledge objects represent the fundamental building blocks of sophisticated Splunk implementations, providing reusable analytical components that enable consistent data interpretation across enterprise environments. Power users develop comprehensive expertise in creating, managing, and optimizing various types of knowledge objects, including saved searches, reports, alerts, lookups, and macros. These components facilitate knowledge sharing, ensure analytical consistency, and enable scalable deployment of complex analytical solutions across distributed organizational structures.

Advanced practitioners understand the intricate relationships between different knowledge object types and leverage these dependencies to create sophisticated analytical frameworks. Saved searches serve as foundational components that encapsulate complex search logic within reusable entities, enabling consistent execution across different contexts and time periods. Power users learn to optimize saved search performance through efficient indexing strategies, intelligent time range selection, and appropriate scheduling mechanisms that balance analytical completeness with system resource utilization.

Report development transcends simple data presentation, encompassing sophisticated analytical workflows that transform raw information into actionable intelligence. Advanced practitioners create dynamic reporting solutions that incorporate conditional formatting, interactive filtering capabilities, and drill-down mechanisms that enable users to explore data hierarchically. These reports often integrate multiple data sources, implement complex calculations, and provide contextual information that facilitates rapid comprehension and decision-making.

Alert configuration represents a critical aspect of proactive monitoring implementations, requiring deep understanding of threshold establishment, notification mechanisms, and escalation procedures. Power users develop expertise in creating intelligent alerting systems that minimize false positives while ensuring critical conditions receive appropriate attention. These systems often incorporate machine learning algorithms, statistical analysis techniques, and adaptive threshold mechanisms that evolve based on historical patterns and operational experience.

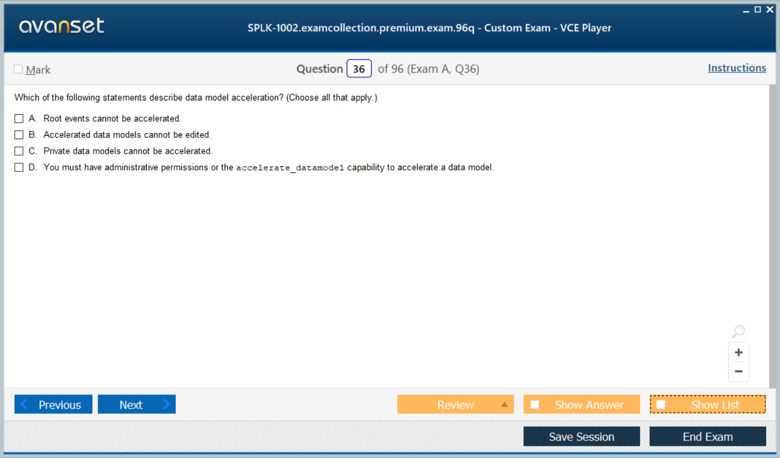

Data model creation constitutes one of the most sophisticated aspects of power user expertise, requiring comprehensive understanding of data relationships, hierarchical structures, and performance optimization strategies. Advanced practitioners learn to design logical data models that abstract complex underlying data structures while providing intuitive interfaces for business users. These models often incorporate multiple datasets, implement sophisticated join operations, and establish calculated fields that derive meaningful metrics from raw data elements.

The certification process rigorously evaluates candidates' abilities to create comprehensive knowledge object ecosystems that address diverse analytical requirements while maintaining optimal performance characteristics. This includes understanding the implications of different sharing mechanisms, implementing appropriate access control strategies, and establishing version management procedures that ensure consistency across development and production environments.

Lookup table implementation represents another critical dimension of power user capabilities, enabling enrichment of analytical data with external reference information. Advanced practitioners understand how to design efficient lookup mechanisms, optimize performance through appropriate indexing strategies, and implement dynamic lookup capabilities that adapt to changing business requirements and data conditions.

Advanced Field Manipulation Techniques and Mathematical Calculations

Field aliases provide sophisticated mechanisms for standardizing field names across diverse data sources, enabling consistent analytical approaches despite underlying data structure variations. Power users develop comprehensive expertise in implementing field alias strategies that accommodate complex data integration scenarios while maintaining analytical precision and performance optimization. These capabilities prove particularly valuable in heterogeneous environments where multiple systems generate similar information using different field naming conventions.

Advanced field alias implementations often incorporate conditional logic, pattern matching algorithms, and dynamic mapping capabilities that adapt to evolving data structures and organizational requirements. Practitioners learn to design flexible aliasing frameworks that accommodate future data source additions while maintaining backward compatibility with existing analytical solutions. These frameworks often include comprehensive documentation, testing procedures, and validation mechanisms that ensure reliable operation across diverse deployment scenarios.

Calculated fields represent powerful analytical tools that enable real-time derivation of meaningful metrics from raw data elements. Power users master the art of creating sophisticated calculated field implementations that perform complex mathematical operations, string manipulations, and conditional evaluations. These calculations often incorporate multiple input fields, implement error handling mechanisms, and optimize performance through efficient algorithm design and appropriate caching strategies.

The development of effective calculated fields requires deep understanding of Splunk's expression evaluation engine, including operator precedence, function availability, and performance characteristics. Advanced practitioners learn to leverage the full spectrum of available mathematical functions, statistical operators, and string manipulation capabilities to create powerful analytical tools that extend Splunk's native functionality.

Macro creation constitutes one of the most powerful techniques for encapsulating complex search logic within reusable components. Power users develop expertise in designing sophisticated macro implementations that accept parameters, implement conditional logic, and provide flexible interfaces for diverse analytical applications. These macros often serve as building blocks for larger analytical frameworks, enabling rapid development of complex searches while maintaining code consistency and reducing maintenance overhead.

Advanced macro implementations frequently incorporate sophisticated parameter validation, default value handling, and error reporting mechanisms that ensure robust operation across diverse usage contexts. Practitioners learn to design macro interfaces that balance flexibility with usability, providing powerful capabilities while maintaining intuitive parameter structures that facilitate adoption by less technical users.

The certification process thoroughly evaluates candidates' abilities to implement comprehensive field manipulation strategies that address complex data integration and analytical requirements. This includes understanding the performance implications of different calculation approaches, implementing appropriate validation mechanisms, and establishing testing procedures that ensure reliable operation under diverse data conditions.

Data Normalization Strategies for Enterprise-Scale Implementations

Data normalization represents a critical capability that enables consistent analytical approaches across diverse data sources and formats. Power users develop comprehensive expertise in implementing sophisticated normalization strategies that standardize field names, values, and structures while preserving original data integrity and maintaining analytical precision. These capabilities prove essential in enterprise environments where multiple systems generate similar information using different formats, conventions, and structures.

Advanced normalization implementations often incorporate complex transformation rules, conditional processing logic, and dynamic adaptation mechanisms that accommodate evolving data formats and organizational requirements. Practitioners learn to design flexible normalization frameworks that can process diverse data types while maintaining optimal performance characteristics and minimizing resource consumption.

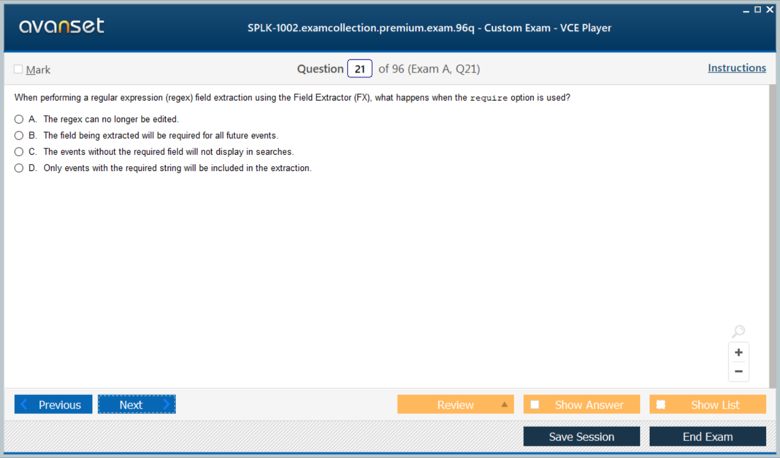

Regular expression mastery constitutes a fundamental aspect of data normalization expertise, enabling practitioners to extract structured information from unstructured text sources. Power users develop proficiency in creating sophisticated pattern matching algorithms that identify relevant data elements, validate format compliance, and implement error handling mechanisms for malformed inputs. These capabilities extend beyond simple field extraction to encompass complex data transformation scenarios that require nuanced understanding of text processing techniques.

Field extraction represents another critical dimension of normalization capabilities, encompassing automatic field discovery, custom extraction rules, and performance optimization strategies. Advanced practitioners understand how to balance extraction comprehensiveness with system performance, implementing intelligent extraction strategies that maximize analytical value while minimizing computational overhead.

The implementation of effective normalization strategies requires deep understanding of data quality assessment techniques, including completeness evaluation, consistency verification, and accuracy validation procedures. Power users learn to establish comprehensive data quality frameworks that monitor normalization effectiveness, identify potential issues, and implement corrective measures that maintain analytical reliability over time.

Advanced practitioners also develop expertise in creating sophisticated data enrichment mechanisms that augment normalized datasets with additional contextual information. These enrichment processes often incorporate external reference data, implement complex correlation algorithms, and establish dynamic updating procedures that ensure analytical datasets remain current and comprehensive.

Professional Development and Career Advancement Opportunities

The Splunk Core Certified Power User credential opens numerous pathways for professional advancement within the rapidly expanding data analytics industry. Certified professionals possess valuable skills that translate directly into enhanced job performance, increased earning potential, and expanded career opportunities across diverse industry sectors. Organizations increasingly recognize the strategic importance of data analytics capabilities and actively seek professionals who can leverage sophisticated analytical tools to drive business outcomes.

Information technology professionals find that power user certification provides significant competitive advantages in today's technology-driven marketplace. The credential demonstrates practical proficiency with industry-leading analytical tools while validating abilities to solve complex data challenges that characterize modern enterprise environments. Certified professionals often experience accelerated career progression, increased responsibility, and enhanced compensation packages that reflect their specialized expertise.

Career changers discover that Splunk certification provides an excellent entry point into the lucrative data analytics field. The comprehensive skill set developed through the certification process transfers readily to various analytical roles, including business intelligence analyst, data scientist, security analyst, and operations engineer positions. The growing demand for data analytics professionals creates abundant opportunities for individuals with verified Splunk expertise to transition into rewarding technology careers.

The certification also serves as a gateway to advanced Splunk specializations, including administrator, architect, and consultant credentials. Power users possess the foundational knowledge necessary to pursue these advanced certifications, which typically command premium compensation levels and provide access to specialized consulting opportunities. Many certified professionals eventually establish independent consulting practices that leverage their Splunk expertise to serve multiple clients across diverse industry sectors.

Continuing education represents a critical aspect of maintaining certification relevance and expanding professional capabilities. The rapidly evolving nature of data analytics technologies requires ongoing skill development and knowledge enhancement to remain current with emerging trends and best practices. Certified professionals often pursue additional training in related technologies, including machine learning platforms, cloud computing services, and cybersecurity tools that complement their Splunk expertise.

Professional networking opportunities abound within the active Splunk community, providing certified practitioners with access to knowledge sharing forums, industry events, and collaborative learning initiatives. These connections often lead to career opportunities, partnership possibilities, and knowledge exchange relationships that enhance professional growth and development.

Comprehensive Examination Preparation and Assessment Strategies

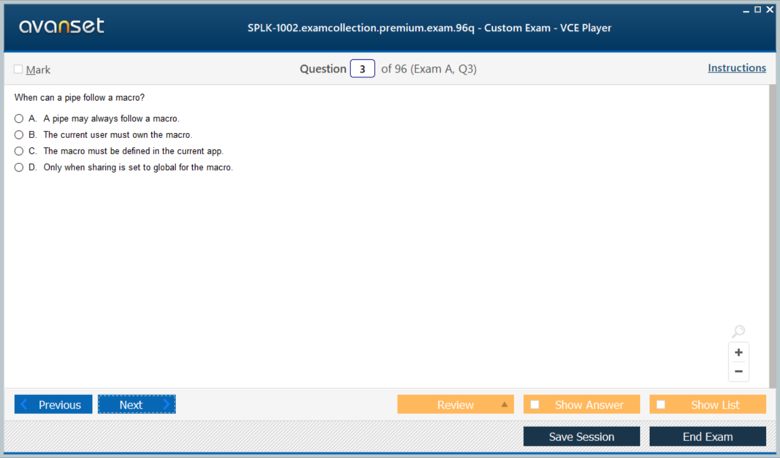

Success in the Splunk Core Certified Power User examination requires systematic preparation that encompasses theoretical knowledge acquisition, practical skill development, and strategic test-taking approaches. The examination format consists of 65 multiple-choice questions delivered within a 60-minute timeframe, demanding efficient time management and comprehensive understanding of core concepts. Candidates must demonstrate proficiency across diverse topic areas while maintaining accuracy under time pressure.

Effective preparation strategies begin with thorough review of official examination blueprints that outline specific competency areas and weightings. These blueprints provide detailed guidance regarding the relative importance of different topics, enabling candidates to allocate study time proportionally and focus intensive preparation efforts on high-impact areas. The blueprint typically encompasses searching and reporting fundamentals, knowledge object creation and management, field manipulation techniques, data model development, and practical application scenarios.

Hands-on practice represents the most critical component of effective examination preparation, requiring access to functional Splunk environments where candidates can experiment with different techniques and validate their understanding through practical application. Many successful candidates establish personal laboratory environments using freely available Splunk software or leverage cloud-based training platforms that provide realistic analytical scenarios and datasets.

Sample question analysis provides valuable insights into examination format, question complexity, and expected response approaches. Official study materials often include representative questions that illustrate the depth and breadth of knowledge required for successful certification. Candidates should analyze these questions systematically, identifying knowledge gaps and focusing additional study efforts on areas requiring improvement.

Time management strategies prove crucial for examination success, particularly given the comprehensive scope of material covered within the allocated timeframe. Experienced candidates recommend systematic approaches that allocate specific time periods for different question types while maintaining flexibility to address challenging scenarios that require extended analysis.

The examination delivery mechanism utilizes advanced proctoring technologies that ensure assessment integrity while providing flexible scheduling options. Candidates can schedule examinations at convenient times and locations through the testing partner's global network of examination centers or approved remote proctoring services.

Performance analysis and feedback mechanisms provide valuable insights for candidates who require multiple examination attempts. Detailed score reports highlight specific competency areas requiring additional development, enabling targeted preparation strategies for subsequent attempts.

Implementation Excellence Through Advanced Configuration and Optimization Techniques

Advanced configuration techniques enable power users to optimize Splunk implementations for specific organizational requirements and performance characteristics. These capabilities encompass index configuration, search optimization, knowledge object organization, and system tuning strategies that maximize analytical capabilities while minimizing resource consumption. Practitioners develop expertise in balancing performance, functionality, and maintainability considerations across complex enterprise deployments.

Index configuration represents a fundamental aspect of implementation excellence, requiring deep understanding of data retention policies, storage optimization strategies, and search performance characteristics. Power users learn to design efficient indexing architectures that accommodate diverse data types while maintaining optimal query response times and minimizing storage costs. These architectures often incorporate sophisticated partitioning schemes, compression algorithms, and archival strategies that balance accessibility with cost-effectiveness.

Search optimization techniques encompass a wide range of strategies for improving query performance, including efficient command sequencing, intelligent time range selection, and appropriate index utilization. Advanced practitioners understand how different search patterns impact system resources and develop optimization strategies that maintain analytical completeness while minimizing computational overhead. These techniques prove particularly valuable in high-volume environments where query efficiency directly impacts user experience and system scalability.

Advanced Knowledge Management and System Optimization Strategies for Enterprise Excellence

Modern enterprises face unprecedented challenges in managing vast repositories of intellectual assets while maintaining operational excellence across distributed technological ecosystems. The convergence of sophisticated analytical frameworks with robust organizational methodologies creates opportunities for transformative business outcomes. Organizations that master these intricate knowledge management paradigms position themselves advantageously within competitive landscapes, leveraging systematic approaches to harness collective intelligence while optimizing technological infrastructure performance.

Contemporary knowledge workers navigate increasingly complex informational architectures that demand sophisticated organizational capabilities. These professionals must orchestrate multifaceted analytical components while ensuring seamless collaboration across geographically dispersed teams. The proliferation of data-driven decision-making processes necessitates robust frameworks that can accommodate exponential growth in informational complexity without compromising operational efficiency or analytical accuracy.

The symbiotic relationship between knowledge organization and system performance creates cascading effects throughout organizational hierarchies. When implemented effectively, these integrated approaches yield substantial improvements in productivity, innovation capacity, and competitive positioning. Organizations that invest in comprehensive knowledge management strategies often discover enhanced problem-solving capabilities, accelerated development cycles, and improved stakeholder satisfaction metrics.

Sophisticated Knowledge Architecture Development and Implementation

Establishing comprehensive knowledge architectures requires meticulous planning and strategic forethought that extends beyond conventional organizational approaches. Modern enterprises must develop sophisticated taxonomical structures that accommodate diverse informational categories while maintaining logical relationships between disparate knowledge domains. These architectural foundations serve as the bedrock for all subsequent analytical activities and collaborative endeavors.

The development process begins with thorough auditing of existing informational assets, identifying redundancies, gaps, and optimization opportunities within current knowledge repositories. This comprehensive assessment reveals patterns in information utilization, highlighting areas where strategic reorganization can yield significant efficiency improvements. Organizations often discover that substantial portions of their intellectual capital remain underutilized due to inadequate organizational structures or insufficient accessibility mechanisms.

Strategic categorization methodologies emerge as fundamental components of effective knowledge architecture development. These approaches transcend simple hierarchical arrangements, incorporating multidimensional classification schemes that reflect the complex interrelationships between different knowledge domains. Advanced categorization frameworks utilize semantic tagging, contextual associations, and dynamic relationship mapping to create fluid organizational structures that adapt to evolving informational landscapes.

Metadata management represents another crucial element in sophisticated knowledge architecture development. Comprehensive metadata frameworks enable precise identification, retrieval, and utilization of specific informational components within vast repositories. These systems incorporate descriptive, structural, and administrative metadata elements that facilitate automated processing while maintaining human-readable organizational principles.

The integration of artificial intelligence and machine learning capabilities enhances knowledge architecture effectiveness through automated classification, content analysis, and predictive organization. These technological augmentations identify patterns in information usage, suggest optimal organizational structures, and continuously refine taxonomical arrangements based on user behavior analytics and system performance metrics.

Cross-functional collaboration emerges as a critical success factor in knowledge architecture development. Different organizational departments possess unique perspectives on informational utility and accessibility requirements. Incorporating diverse viewpoints during the architectural design process ensures that resulting frameworks accommodate varied use cases while maintaining coherent organizational principles across functional boundaries.

Scalability considerations must permeate every aspect of knowledge architecture development. Organizations anticipating rapid growth or expanding analytical requirements need frameworks that can accommodate increasing complexity without requiring fundamental restructuring. Modular architectural approaches enable incremental expansion while maintaining system integrity and organizational coherence.

Hierarchical Organization Paradigms and Structural Excellence

Contemporary hierarchical organization paradigms extend far beyond traditional tree-structured approaches, incorporating sophisticated methodologies that reflect the multifaceted nature of modern knowledge ecosystems. These advanced organizational schemes accommodate complex interdependencies while maintaining clear navigational pathways for users with varying expertise levels and analytical requirements.

Matrix-based organizational structures provide flexibility in knowledge categorization by enabling multiple classification dimensions simultaneously. These approaches recognize that individual knowledge components often possess relevance across multiple organizational categories, domains, or functional areas. Matrix structures facilitate cross-referencing while maintaining clear primary categorizations that support efficient navigation and retrieval processes.

Faceted classification systems represent evolution beyond traditional hierarchical approaches, incorporating multiple independent classification dimensions that can be combined dynamically to create customized organizational views. These sophisticated systems enable users to filter and organize knowledge according to their specific requirements while maintaining underlying structural integrity across the broader organizational framework.

Network-based organizational models leverage graph theory principles to represent complex relationships between knowledge components. These approaches excel in capturing non-linear associations, emergent patterns, and contextual dependencies that traditional hierarchical structures might obscure. Network models particularly benefit organizations dealing with highly interconnected knowledge domains where linear organizational approaches prove insufficient.

Temporal organization strategies recognize that knowledge relevance and utility evolve over time, requiring dynamic organizational frameworks that can accommodate changing priorities and shifting analytical requirements. These approaches incorporate versioning, lifecycle management, and temporal metadata that enable sophisticated temporal navigation while maintaining historical context and evolutionary trajectories.

Domain-specific organization methodologies acknowledge that different knowledge areas require specialized organizational approaches that reflect their unique characteristics and usage patterns. Scientific knowledge repositories might emphasize methodological categorization, while business intelligence frameworks focus on strategic alignment and operational relevance. Customized organizational schemes optimize user experience within specific domains while maintaining interoperability across broader organizational boundaries.

Adaptive organizational frameworks incorporate feedback mechanisms that enable continuous refinement based on usage patterns, user feedback, and analytical outcomes. These systems monitor organizational effectiveness through various metrics, automatically suggesting improvements or implementing minor organizational adjustments that enhance overall system utility without disrupting established workflows.

Comprehensive Naming Convention Standards and Methodological Consistency

Developing robust naming convention standards requires careful consideration of linguistic principles, technical constraints, and organizational culture factors that influence long-term adoption and effectiveness. These conventions serve as fundamental communication protocols that enable efficient knowledge discovery, accurate component identification, and seamless collaboration across diverse organizational contexts.

Semantic clarity emerges as the primary consideration in naming convention development. Effective naming schemes prioritize descriptive accuracy over brevity, ensuring that component names communicate essential characteristics without requiring extensive contextual knowledge. These approaches balance comprehensiveness with usability, creating names that remain meaningful across different organizational contexts and temporal periods.

Consistency frameworks establish systematic approaches to naming that eliminate ambiguity and reduce cognitive overhead associated with knowledge navigation. These frameworks define standardized patterns for different component types, incorporating prefixes, suffixes, and structural elements that convey categorical information while maintaining readable formats. Consistent application of these patterns enables intuitive navigation even within unfamiliar knowledge domains.

Scalable naming architectures anticipate organizational growth and evolving analytical requirements by incorporating extensible frameworks that can accommodate new component types and expanded categorizations without disrupting existing naming schemes. These architectures utilize hierarchical naming patterns, namespace management, and reserved terminology spaces that enable seamless expansion while preserving backward compatibility.

Cross-platform compatibility considerations ensure that naming conventions function effectively across diverse technological environments and software platforms. These considerations encompass character set limitations, length restrictions, and special character handling that might vary between different systems. Universal compatibility enables knowledge portability and reduces integration complexities when implementing multi-platform analytical environments.

Version-aware naming strategies incorporate systematic approaches to component versioning that enable parallel development, regression tracking, and release management. These strategies utilize standardized versioning patterns that communicate temporal relationships, compatibility information, and development status through nomenclature alone, reducing dependencies on external documentation or metadata systems.

Collaborative naming protocols establish governance mechanisms that ensure consistent application of naming conventions across distributed development teams. These protocols define approval processes, validation mechanisms, and conflict resolution procedures that maintain naming integrity while accommodating diverse team preferences and specialized requirements within specific analytical domains.

Advanced Documentation Standards and Knowledge Preservation

Contemporary documentation standards transcend traditional static documentation approaches, incorporating dynamic, interactive methodologies that enhance knowledge accessibility while reducing maintenance overhead. These advanced standards recognize documentation as living knowledge assets that require continuous cultivation and strategic management rather than periodic updates or reactive maintenance activities.

Structured documentation frameworks establish systematic approaches to information organization that enhance readability, searchability, and maintainability across diverse knowledge domains. These frameworks utilize standardized templates, consistent formatting conventions, and semantic markup that enable automated processing while maintaining human-readable presentation formats. Structured approaches facilitate knowledge extraction, cross-referencing, and automated validation processes.

Interactive documentation paradigms leverage multimedia capabilities, embedded demonstrations, and dynamic content generation to create engaging knowledge experiences that accommodate diverse learning styles and expertise levels. These approaches incorporate executable examples, interactive tutorials, and contextual help systems that provide immediate practical value while reducing the cognitive burden associated with knowledge acquisition and application.

Contextual documentation strategies recognize that knowledge utility depends heavily on situational relevance and user context. These approaches incorporate adaptive content presentation, role-based information filtering, and situational guidance that customize documentation experiences according to user expertise, current objectives, and environmental constraints. Contextual adaptation enhances knowledge utility while reducing information overload.

Collaborative documentation platforms enable distributed knowledge creation and maintenance through sophisticated version control, collaborative editing, and peer review mechanisms. These platforms incorporate conflict resolution, change tracking, and attribution systems that maintain documentation integrity while enabling concurrent contributions from multiple stakeholders across different organizational functions and geographical locations.

Automated documentation generation leverages code analysis, behavior monitoring, and usage analytics to create self-updating documentation that remains synchronized with evolving analytical frameworks. These systems generate technical specifications, usage examples, and troubleshooting guides automatically, reducing manual maintenance requirements while ensuring documentation accuracy and completeness.

Knowledge validation frameworks incorporate systematic review processes, accuracy verification mechanisms, and currency maintenance procedures that ensure documentation reliability over extended periods. These frameworks establish quality assurance protocols, expert review cycles, and automated validation checks that identify outdated information, inconsistencies, or gaps requiring attention from subject matter experts.

Sophisticated Access Control Architecture and Permissions Management

Advanced access control architectures encompass multidimensional security paradigms that balance information accessibility with stringent protection requirements across complex organizational hierarchies. These sophisticated systems recognize that effective knowledge management requires granular control mechanisms that can accommodate diverse access patterns while maintaining security integrity and operational efficiency.

Role-based access control frameworks establish systematic approaches to permissions management that align security policies with organizational structures and functional requirements. These frameworks define standardized roles that encapsulate specific sets of permissions, enabling efficient management of user access rights while maintaining clear accountability relationships. Advanced role definitions incorporate temporal restrictions, contextual constraints, and conditional access rules that adapt to changing organizational requirements.

Attribute-based access control systems provide enhanced flexibility through dynamic permission evaluation that considers multiple user characteristics, environmental factors, and resource attributes simultaneously. These systems enable sophisticated access policies that respond to contextual variables such as location, time, device characteristics, and current organizational status. Attribute-based approaches excel in complex environments where static role assignments prove insufficient for nuanced access requirements.

Data classification strategies establish systematic approaches to information sensitivity assessment and protection level assignment. These strategies incorporate automated classification algorithms, manual review processes, and policy-driven categorization rules that ensure appropriate protection measures without unnecessarily restricting legitimate access requirements. Effective classification schemes balance security imperatives with operational efficiency considerations.

Audit trail mechanisms provide comprehensive monitoring capabilities that track all access activities, permission changes, and security-relevant events within knowledge management systems. These mechanisms incorporate detailed logging, correlation analysis, and anomaly detection capabilities that enable proactive security monitoring while supporting compliance requirements and forensic analysis capabilities when security incidents occur.

Dynamic permission adjustment capabilities enable automatic access control modifications based on changing organizational circumstances, project requirements, or security conditions. These systems monitor contextual variables and automatically adjust permissions according to predefined policies, reducing administrative overhead while maintaining appropriate security postures across evolving operational environments.

Privacy protection frameworks ensure that access control systems comply with regulatory requirements while protecting individual privacy rights and organizational confidentiality. These frameworks incorporate data minimization principles, consent management mechanisms, and privacy impact assessment procedures that balance knowledge accessibility with privacy obligations across diverse jurisdictional requirements.

Performance Optimization Strategies and System Enhancement

Comprehensive performance optimization encompasses systematic approaches to identifying bottlenecks, implementing targeted improvements, and establishing sustainable enhancement processes that maintain optimal system operation across diverse workload conditions. These strategies recognize that performance requirements evolve continuously, demanding adaptive optimization frameworks that can respond to changing demands while preserving system stability and reliability.

Bottleneck identification methodologies utilize sophisticated monitoring tools, performance profiling techniques, and analytical modeling approaches to pinpoint specific system components that constrain overall performance. These methodologies incorporate statistical analysis, correlation studies, and predictive modeling that reveal performance patterns and identify optimization opportunities that might not be apparent through superficial system observation.

Resource allocation optimization strategies examine how computational resources, storage systems, and network bandwidth are distributed across various system components and analytical processes. These strategies identify inefficiencies in resource utilization, recommend reallocation approaches, and implement dynamic resource management policies that adapt to changing workload characteristics while maintaining performance guarantees for critical operations.

Caching architectures represent fundamental performance enhancement strategies that reduce computational overhead through intelligent data storage and retrieval mechanisms. Advanced caching implementations incorporate multilevel hierarchies, intelligent prefetching algorithms, and cache coherency protocols that maximize performance benefits while minimizing storage requirements and maintaining data consistency across distributed system components.

Parallel processing frameworks enable performance improvements through concurrent execution of analytical operations across multiple computational resources. These frameworks incorporate task decomposition algorithms, load balancing mechanisms, and result aggregation procedures that optimize computational throughput while maintaining result accuracy and system stability under varying load conditions.

Database optimization techniques focus on query performance enhancement, index management, and storage optimization strategies that improve data retrieval efficiency while maintaining transactional integrity. These techniques encompass query optimization, schema design improvements, and storage engine selection that maximize database performance for specific analytical workload characteristics and access patterns.

Network optimization strategies address communication overhead, bandwidth utilization, and latency reduction across distributed system architectures. These strategies incorporate protocol optimization, compression techniques, and communication pattern analysis that minimize network-related performance constraints while maintaining reliable data transmission and system coordination across geographically distributed components.

Proactive Monitoring Framework Implementation and Alerting Systems

Advanced monitoring frameworks establish comprehensive observability across complex analytical environments through sophisticated instrumentation, metric collection, and analytical processing capabilities. These frameworks provide real-time visibility into system behavior while enabling predictive analysis that identifies potential issues before they impact operational performance or user experience.

Metric collection strategies encompass systematic approaches to gathering performance indicators, usage statistics, and behavioral data from all system components. These strategies balance comprehensive monitoring coverage with system overhead considerations, implementing efficient data collection mechanisms that provide valuable insights without significantly impacting system performance or consuming excessive computational resources.

Alerting mechanisms incorporate intelligent thresholds, contextual analysis, and escalation procedures that ensure appropriate notification of significant events while minimizing alert fatigue and false positive occurrences. Advanced alerting systems utilize machine learning algorithms to establish dynamic thresholds, correlate multiple metrics, and provide contextual information that enables rapid issue diagnosis and resolution.

Trend analysis capabilities enable identification of gradual performance degradation, capacity utilization patterns, and emerging issues that might not trigger immediate alerts but require proactive attention. These capabilities incorporate statistical modeling, forecasting algorithms, and pattern recognition techniques that reveal long-term trends and predict future system behavior based on historical data analysis.

Dashboard design principles focus on creating intuitive visualization interfaces that communicate complex system status information effectively to diverse stakeholder groups. Effective dashboards incorporate hierarchical information presentation, customizable views, and drill-down capabilities that enable both high-level status monitoring and detailed diagnostic analysis according to user requirements and expertise levels.

Automated response capabilities enable immediate system adjustments in response to detected anomalies or performance degradation. These capabilities incorporate predefined response procedures, safety mechanisms, and escalation protocols that enable automated issue mitigation while maintaining human oversight for critical decisions that might impact system stability or data integrity.

Integration architectures ensure that monitoring frameworks operate seamlessly with existing organizational tools, notification systems, and operational procedures. These architectures incorporate standardized interfaces, data exchange protocols, and workflow integration mechanisms that embed monitoring capabilities within established operational processes without disrupting existing organizational practices.

Security Framework Architecture and Comprehensive Protection Strategies

Enterprise security frameworks require sophisticated architectural approaches that address evolving threat landscapes while maintaining operational flexibility and user productivity. These frameworks incorporate defense-in-depth strategies, zero-trust principles, and adaptive security measures that provide robust protection without unnecessarily constraining legitimate organizational activities or analytical processes.

Identity and access management systems establish centralized authentication and authorization mechanisms that provide seamless user experiences while maintaining stringent security controls. These systems incorporate single sign-on capabilities, multi-factor authentication requirements, and adaptive authentication policies that adjust security requirements based on risk assessments and contextual factors such as user behavior patterns and access locations.

Data protection strategies encompass comprehensive approaches to information security that address data at rest, data in transit, and data in processing across all system components. These strategies incorporate encryption protocols, key management systems, and data loss prevention mechanisms that ensure information confidentiality while enabling legitimate analytical processing and collaboration activities.

Threat detection capabilities utilize advanced analytics, behavioral analysis, and machine learning algorithms to identify potential security incidents before they compromise system integrity or organizational assets. These capabilities incorporate anomaly detection, pattern recognition, and correlation analysis that reveal sophisticated attack patterns while minimizing false positive alerts that could overwhelm security operations teams.

Incident response frameworks establish systematic procedures for addressing security events through coordinated response activities that minimize impact while preserving forensic evidence and maintaining operational continuity. These frameworks incorporate escalation procedures, communication protocols, and recovery strategies that enable rapid incident containment while ensuring appropriate stakeholder notification and regulatory compliance.

Compliance management systems ensure that security frameworks align with regulatory requirements, industry standards, and organizational policies across diverse jurisdictional environments. These systems incorporate automated compliance monitoring, audit trail generation, and regulatory reporting capabilities that demonstrate adherence to applicable requirements while minimizing administrative overhead associated with compliance activities.

Vulnerability management processes establish systematic approaches to identifying, assessing, and remediating security weaknesses across technological infrastructure and analytical frameworks. These processes incorporate automated vulnerability scanning, risk assessment methodologies, and remediation prioritization algorithms that ensure critical vulnerabilities receive appropriate attention while managing resource allocation across diverse security improvement initiatives.

Configuration Management Excellence and Deployment Automation

Modern configuration management transcends traditional system administration approaches, incorporating sophisticated automation frameworks that ensure consistency, reliability, and reproducibility across complex technological environments. These advanced methodologies recognize configuration management as a strategic capability that enables organizational agility while maintaining operational stability and security compliance.

Infrastructure as code paradigms revolutionize configuration management through programmatic approaches that treat infrastructure definitions as software artifacts subject to version control, automated testing, and systematic deployment procedures. These paradigms enable precise environment replication, change tracking, and rollback capabilities that dramatically improve deployment reliability while reducing manual configuration errors and inconsistencies.

Automated provisioning systems streamline environment creation through orchestrated deployment procedures that eliminate manual intervention while ensuring consistent configuration application across diverse infrastructure components. These systems incorporate dependency management, sequencing logic, and validation procedures that coordinate complex deployment activities while maintaining system integrity throughout provisioning processes.

Configuration drift detection mechanisms identify unauthorized or accidental configuration changes that might compromise system security, performance, or functionality. These mechanisms incorporate baseline comparison algorithms, continuous monitoring capabilities, and automated remediation procedures that restore approved configurations while generating alerts for investigation of configuration deviations.

Environment synchronization strategies ensure that development, testing, and production environments maintain appropriate configuration relationships while accommodating necessary differences for specific operational requirements. These strategies incorporate selective synchronization, environment-specific customization, and validation procedures that maintain configuration consistency where appropriate while preserving operational flexibility.

Change management integration connects configuration management processes with organizational change control procedures, ensuring that configuration modifications receive appropriate approval and documentation before implementation. These integrations incorporate workflow automation, approval tracking, and rollback procedures that maintain configuration governance while streamlining legitimate change processes.

Validation and testing frameworks verify configuration accuracy and functionality before deployment through automated testing procedures that identify potential issues before they impact operational environments. These frameworks incorporate functional testing, performance validation, and security verification procedures that ensure configuration changes meet quality standards while maintaining system reliability and security postures.

Advanced Analytical Framework Integration and Optimization

Sophisticated analytical framework integration requires comprehensive approaches that address technological compatibility, data flow optimization, and performance scalability across diverse analytical tools and methodologies. These integration strategies enable organizations to leverage specialized analytical capabilities while maintaining coherent data processing pipelines and consistent analytical outcomes.

Data pipeline orchestration systems coordinate complex analytical workflows that span multiple tools, platforms, and computational resources. These systems incorporate dependency management, error handling, and resource optimization algorithms that ensure reliable analytical processing while maximizing computational efficiency and minimizing processing delays across distributed analytical environments.

Interoperability frameworks establish standardized interfaces and data exchange protocols that enable seamless communication between diverse analytical tools and platforms. These frameworks incorporate data format translation, API standardization, and semantic mapping capabilities that eliminate integration barriers while preserving analytical accuracy and maintaining data integrity across tool boundaries.

Computational resource optimization strategies address the efficient utilization of processing power, memory allocation, and storage resources across analytical workflows. These strategies incorporate workload analysis, resource scheduling, and dynamic allocation algorithms that maximize analytical throughput while minimizing infrastructure costs and reducing environmental impact through efficient resource utilization.

Quality assurance mechanisms ensure analytical accuracy and reliability through systematic validation procedures, result verification algorithms, and consistency checks that identify potential issues in analytical processing. These mechanisms incorporate statistical validation, cross-verification procedures, and automated testing frameworks that maintain analytical integrity while enabling continuous improvement of analytical methodologies and processing efficiency.

Metadata integration ensures that analytical processes preserve contextual information, processing lineage, and quality indicators throughout complex analytical workflows. These integration approaches incorporate metadata propagation, contextual preservation, and audit trail maintenance that enable analytical transparency while supporting regulatory compliance and quality assurance requirements.

Performance scaling strategies enable analytical frameworks to accommodate increasing data volumes, computational complexity, and user concurrency without degrading analytical accuracy or response times. These strategies incorporate horizontal scaling, load distribution, and resource elasticity that maintain analytical performance while optimizing infrastructure utilization and operational costs.

Collaborative Excellence and Distributed Team Coordination

Effective collaboration within sophisticated knowledge management environments requires advanced coordination mechanisms that transcend traditional project management approaches. These mechanisms incorporate real-time collaboration tools, asynchronous communication frameworks, and distributed decision-making processes that enable productive teamwork across geographical, temporal, and organizational boundaries.

Workflow orchestration systems establish systematic approaches to coordinating complex collaborative activities that involve multiple stakeholders, sequential dependencies, and parallel processing requirements. These systems incorporate task assignment algorithms, progress tracking mechanisms, and conflict resolution procedures that ensure collaborative efficiency while maintaining quality standards and meeting project deadlines across distributed team environments.

Knowledge sharing platforms facilitate efficient exchange of insights, expertise, and analytical results through sophisticated communication tools that accommodate diverse collaboration styles and organizational cultures. These platforms incorporate discussion forums, expert networks, and mentoring frameworks that enable knowledge transfer while building organizational learning capabilities and professional development opportunities.

Conflict resolution mechanisms address disagreements, competing priorities, and resource allocation disputes through systematic approaches that preserve collaborative relationships while ensuring productive outcomes. These mechanisms incorporate mediation procedures, decision-making frameworks, and escalation protocols that resolve conflicts efficiently while maintaining team cohesion and organizational harmony.

Remote collaboration tools enable effective teamwork across geographical distances through video conferencing, virtual workspace platforms, and distributed project management capabilities. These tools incorporate timezone management, cultural sensitivity features, and communication optimization algorithms that facilitate productive collaboration despite physical separation and cultural differences between team members.

Version control integration ensures that collaborative activities maintain proper tracking of contributions, changes, and decision rationales throughout project lifecycles. These integrations incorporate branching strategies, merge procedures, and attribution mechanisms that preserve collaborative history while enabling parallel development activities and maintaining intellectual property clarity across team contributions.

Quality assurance collaboration establishes systematic peer review processes, validation procedures, and collective quality improvement mechanisms that leverage distributed expertise while maintaining consistent quality standards. These collaborative quality frameworks incorporate review assignment algorithms, expertise matching systems, and consensus-building procedures that optimize quality outcomes through strategic utilization of distributed knowledge and experience.

Conclusion

Knowledge management evolution requires anticipatory strategies that prepare organizational frameworks for emerging technological capabilities, changing business requirements, and evolving competitive landscapes. These forward-looking approaches incorporate technology trend analysis, organizational capability development, and strategic planning methodologies that position organizations advantageously for future opportunities and challenges.

Emerging technology integration strategies evaluate new technological capabilities for potential incorporation into existing knowledge management frameworks without disrupting operational stability or requiring extensive organizational restructuring. These strategies incorporate technology assessment methodologies, pilot implementation frameworks, and risk evaluation procedures that enable strategic technology adoption while minimizing implementation risks and organizational disruption.

Organizational learning capabilities enable continuous improvement of knowledge management practices through systematic analysis of operational outcomes, user feedback, and performance metrics. These capabilities incorporate learning loop mechanisms, improvement identification algorithms, and change implementation procedures that foster organizational adaptation while preserving institutional knowledge and maintaining operational continuity.

Scalability planning frameworks anticipate organizational growth, expanding analytical requirements, and increasing complexity through strategic capacity planning and architectural evolution strategies. These frameworks incorporate growth modeling, capacity forecasting, and infrastructure scaling algorithms that ensure knowledge management capabilities evolve appropriately with organizational development while maintaining performance standards and operational efficiency.

ExamCollection provides the complete prep materials in vce files format which include Splunk Core Certified Power User certification exam dumps, practice test questions and answers, video training course and study guide which help the exam candidates to pass the exams quickly. Fast updates to Splunk Core Certified Power User certification exam dumps, practice test questions and accurate answers vce verified by industry experts are taken from the latest pool of questions.

Splunk Splunk Core Certified Power User Video Courses

Top Splunk Certification Exams

Site Search: