Pass Your Google Professional Data Engineer Certification Easy!

Google Professional Data Engineer Certification Exams Questions & Answers, Accurate & Verified By IT Experts

Instant Download, Free Fast Updates, 99.6% Pass Rate.

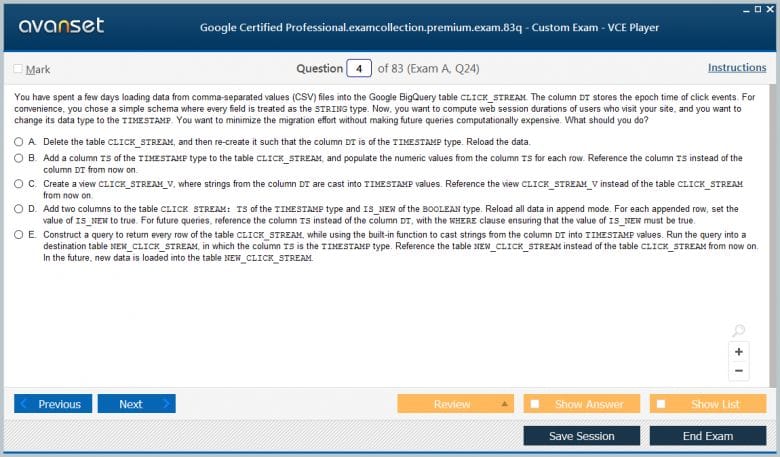

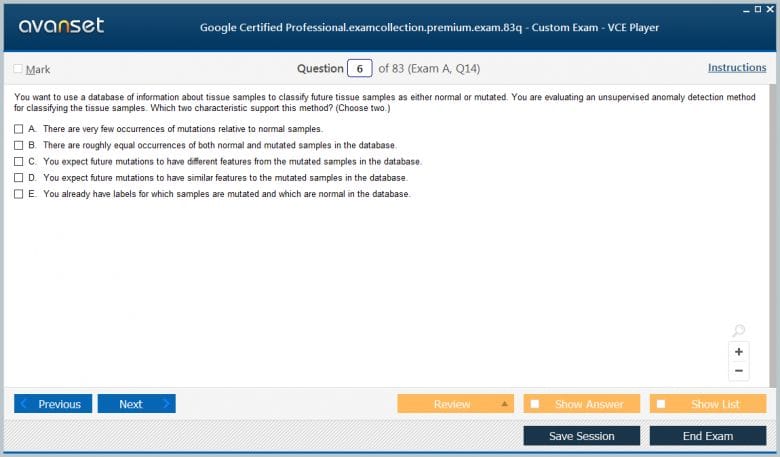

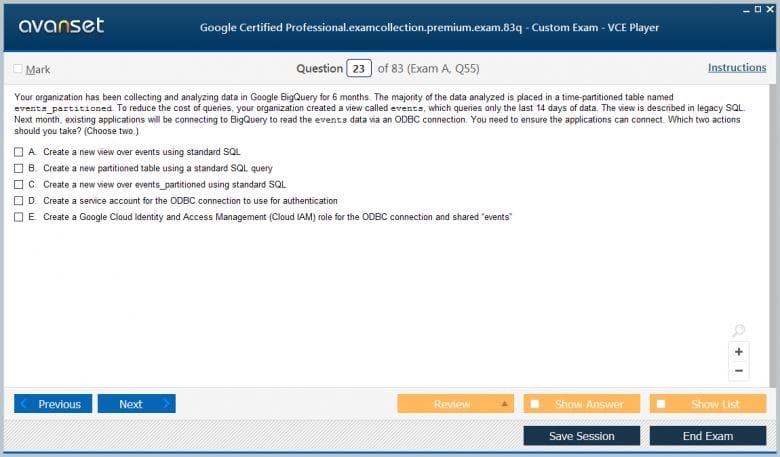

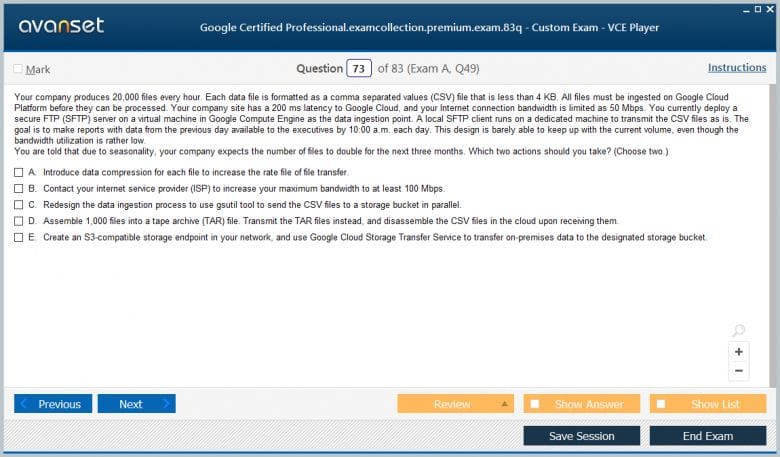

Google Professional Data Engineer Certification Exams Screenshots

Download Free Professional Data Engineer Practice Test Questions VCE Files

| Exam | Title | Files |

|---|---|---|

Exam Professional Data Engineer |

Title Professional Data Engineer on Google Cloud Platform |

Files 10 |

Google Professional Data Engineer Certification Exam Dumps & Practice Test Questions

Prepare with top-notch Google Professional Data Engineer certification practice test questions and answers, vce exam dumps, study guide, video training course from ExamCollection. All Google Professional Data Engineer certification exam dumps & practice test questions and answers are uploaded by users who have passed the exam themselves and formatted them into vce file format.

Google Professional Data Engineer Certification: Your Ultimate Guide to Cloud Data Mastery

In the evolving world of technology, data has become the backbone of decision-making, business strategy, and innovation. Organizations rely heavily on data to understand customer behavior, optimize operations, and drive growth. As a result, the demand for skilled data engineers has skyrocketed, and certifications that validate expertise in this field have become highly sought after. Among these, the Google Professional Data Engineer Certification stands out as a globally recognized credential that demonstrates an individual's ability to design, build, and operationalize data processing systems on the Google Cloud Platform.

This certification not only validates technical skills but also emphasizes the ability to translate business requirements into actionable data-driven solutions. It is designed for data engineers, analysts, and IT professionals who are looking to advance their careers by showcasing proficiency in cloud-based data management, analytics, and machine learning integration. Achieving this certification signals to employers and peers that the professional possesses a deep understanding of modern data engineering practices and can effectively leverage cloud technologies to drive organizational success.

Core Responsibilities of a Data Engineer

Data engineering is a multifaceted role that combines elements of software engineering, database management, and analytics. A certified data engineer is expected to perform a range of responsibilities, including:

Designing Data Architectures

A key responsibility of a data engineer is to design scalable, reliable, and secure data architectures. This involves understanding the organization’s data requirements, choosing the right storage solutions, and creating structures that support efficient data retrieval and processing. Cloud platforms, like Google Cloud, offer a variety of services to facilitate this, including BigQuery for analytics, Cloud Storage for raw data, and Cloud SQL for structured databases.

Building and Maintaining Data Pipelines

Data engineers are tasked with constructing pipelines that extract, transform, and load data (ETL processes) from multiple sources into centralized repositories. These pipelines must be automated, resilient, and capable of handling large volumes of data without interruption. Tools like Dataflow and Dataproc are commonly used in Google Cloud to manage these workflows efficiently.

Ensuring Data Quality and Governance

Maintaining high-quality data is critical for accurate analysis and decision-making. Data engineers implement validation checks, enforce data standards, and monitor pipelines to ensure data integrity. Additionally, they are responsible for managing metadata, maintaining audit trails, and ensuring compliance with relevant data protection regulations.

Enabling Data Analytics

Data engineers provide the foundation for data analysis by preparing clean, structured datasets. They collaborate closely with data analysts and scientists to ensure that the data is accessible and usable for generating insights. This often involves creating views, tables, and datasets in platforms like BigQuery and optimizing queries for performance.

Integrating Machine Learning

Modern data engineering often overlaps with machine learning (ML). Data engineers prepare datasets suitable for training models, manage feature stores, and help deploy ML models in production. Knowledge of AI and ML services within Google Cloud, such as AI Platform and Vertex AI, is essential for professionals aiming to leverage predictive analytics effectively.

Skills Validated by the Certification

The Google Professional Data Engineer Certification evaluates a broad set of skills, ensuring that certified professionals are equipped to handle complex data environments. The key competencies include:

Cloud Data Architecture Design

Candidates must demonstrate proficiency in designing data architectures that are scalable, reliable, and secure. This includes selecting the appropriate storage solutions, structuring data for analysis, and optimizing the overall architecture to meet performance and cost requirements.

Data Pipeline Development

The certification tests the ability to build robust data pipelines. Candidates must show that they can automate ETL processes, integrate data from multiple sources, and ensure pipeline efficiency and reliability. This skill ensures that data flows smoothly from collection to storage to analysis.

Data Security and Governance

Ensuring data security and governance is a critical aspect of the certification. Professionals must understand access controls, encryption, compliance standards, and data auditing practices. This ensures that organizations can trust the integrity and confidentiality of their data.

Data Analysis and Visualization

Certified data engineers must be capable of transforming raw data into actionable insights. They should know how to write queries, create dashboards, and generate reports that support business decision-making. This also involves understanding the needs of stakeholders and tailoring data solutions accordingly.

Machine Learning Integration

While the certification is not solely focused on machine learning, candidates should understand how to prepare data for ML models and deploy these models using Google Cloud services. Knowledge of feature engineering, model evaluation, and deployment pipelines is beneficial.

Benefits of Obtaining the Certification

Earning the Google Professional Data Engineer Certification provides numerous advantages for professionals seeking to advance in their careers:

Enhanced Career Opportunities

Certification opens doors to advanced roles in data engineering, analytics, and cloud architecture. Organizations value certified professionals for their verified expertise and ability to handle complex data challenges in cloud environments.

Industry Recognition

The credential is globally recognized, signaling to employers and peers that the professional possesses industry-standard skills and knowledge. This recognition can lead to more significant career opportunities and professional credibility.

Higher Earning Potential

Certified data engineers often command higher salaries due to their specialized skills. The certification demonstrates a proven ability to manage cloud-based data systems and extract meaningful insights, making professionals more valuable to organizations.

Practical Knowledge

Preparing for the certification equips professionals with hands-on experience using Google Cloud services. This practical knowledge is directly applicable in real-world scenarios, allowing certified individuals to implement solutions immediately.

Alignment with Business Goals

The certification emphasizes the ability to translate business needs into technical solutions. Professionals are trained to focus on outcomes that drive organizational success, making them integral to strategic initiatives.

Overview of Google Cloud Platform Tools

A critical part of the certification is familiarity with Google Cloud Platform (GCP) tools and services. Candidates should understand the purpose, functionality, and best practices for using these tools:

BigQuery

BigQuery is a serverless, highly scalable data warehouse designed for large-scale data analytics. It allows professionals to run SQL queries on massive datasets quickly and efficiently, making it a core tool for data analysis in Google Cloud.

Cloud Storage

Cloud Storage provides secure, durable, and highly available object storage. It is used for storing raw data, backups, and other large datasets. Understanding storage classes and access controls is essential for effective data management.

Dataflow

Dataflow is a fully managed service for stream and batch data processing. It is used to create and manage data pipelines that are reliable, scalable, and cost-efficient. Dataflow supports both ETL and real-time processing.

Dataproc

Dataproc is a managed Hadoop and Spark service that simplifies big data processing. It allows data engineers to run large-scale data processing jobs with minimal infrastructure management, making it ideal for batch processing and analytics.

Pub/Sub

Pub/Sub is a messaging service for building event-driven systems. It facilitates real-time data ingestion and streaming, enabling organizations to process events as they occur.

AI and Machine Learning Tools

Vertex AI and AI Platform provide machine learning services within Google Cloud. Data engineers use these tools to train, deploy, and manage ML models, integrating predictive capabilities into data pipelines.

Exam Structure and Preparation Tips

The Google Professional Data Engineer Certification exam consists of multiple-choice and scenario-based questions. Candidates are evaluated on their ability to apply knowledge in practical situations. Understanding the exam structure and preparing effectively is crucial for success.

Exam Focus Areas

Designing data processing systems

Building and operationalizing data pipelines

Ensuring data quality, security, and governance

Performing data analysis and visualization

Integrating machine learning into workflows

Preparation Strategies

Gain hands-on experience with GCP tools, creating pipelines, and working with datasets.

Review Google Cloud documentation and best practices for storage, processing, and analytics.

Practice scenario-based questions to develop problem-solving skills.

Study case studies that demonstrate translating business needs into data solutions.

Join forums or study groups to discuss challenges and solutions with peers.

Time Management

The exam requires careful time management, as scenario-based questions may take longer to analyze. Practice under timed conditions to improve speed and accuracy.

The Google Professional Data Engineer Certification is a comprehensive credential that equips professionals with the skills and knowledge needed to succeed in cloud-based data engineering. It validates expertise in designing scalable architectures, building efficient pipelines, ensuring data quality, and enabling actionable insights. Certified professionals gain recognition, career advancement, and practical experience that is highly applicable in today’s data-driven organizations.

For those looking to advance in the field of data engineering, preparing for this certification is not just about passing an exam—it is about developing a deep understanding of cloud data systems and their impact on business decisions. With dedication, hands-on practice, and strategic preparation, earning this certification is a significant step toward becoming a leading professional in the world of cloud data engineering.

Advanced Preparation Strategies for the Google Professional Data Engineer Certification

Earning the Google Professional Data Engineer Certification requires more than theoretical knowledge. Candidates must develop practical skills, understand the Google Cloud ecosystem, and be able to solve real-world problems using cloud-based tools. A well-structured preparation plan is essential for success. This includes understanding the exam objectives, engaging in hands-on projects, reviewing case studies, and utilizing various learning resources effectively.

Understanding Exam Objectives

Before diving into preparation, it is crucial to comprehend the scope of the exam. The certification evaluates a candidate’s ability to design, build, and operationalize data processing systems, maintain data quality, analyze and visualize data, and integrate machine learning. Focusing on these domains helps candidates prioritize study topics and allocate time efficiently.

Key areas to focus on include:

Designing scalable and secure data architectures

Building and operationalizing ETL pipelines

Ensuring data governance and quality

Performing data analysis using BigQuery and other tools

Integrating machine learning models into production pipelines

Understanding these objectives allows candidates to approach preparation systematically and ensures that they are equipped to answer scenario-based questions effectively.

Hands-On Experience with Google Cloud Platform

Practical experience is a critical component of exam readiness. Working directly with Google Cloud services enables candidates to understand how data flows through different systems, how to configure services, and how to optimize pipelines for performance and cost. Hands-on practice builds confidence and reinforces theoretical knowledge.

Creating Data Pipelines

Building ETL pipelines is central to data engineering. Candidates should practice creating pipelines using Dataflow and Dataproc, integrating data from multiple sources such as Cloud Storage, Pub/Sub, and BigQuery. Tasks should include data ingestion, transformation, cleaning, and loading into analytics-ready formats.

Managing Data Storage

Understanding storage options within Google Cloud is essential. Candidates should work with Cloud Storage, BigQuery, Cloud SQL, and Firestore to store structured and unstructured data. Practice setting access controls, configuring data retention policies, and optimizing storage costs.

Implementing Data Governance

Hands-on experience with data governance reinforces best practices. Candidates should implement validation rules, monitor data quality, track metadata, and ensure compliance with regulations. This helps prepare for questions related to security and governance on the exam.

Integrating Machine Learning

Candidates should experiment with AI and ML services such as Vertex AI and AI Platform. This involves preparing datasets for training, creating models, and deploying them in production pipelines. Understanding model evaluation, feature engineering, and monitoring ensures candidates can handle ML-related scenarios effectively.

Leveraging Case Studies

Studying real-world scenarios helps candidates understand how theoretical concepts are applied in practical environments. Google Cloud provides case studies demonstrating data engineering solutions for industries such as finance, healthcare, retail, and logistics. Reviewing these examples helps candidates grasp problem-solving approaches, pipeline design, and optimization strategies.

Key takeaways from case studies include:

Translating business requirements into technical designs

Choosing appropriate storage and processing solutions

Implementing monitoring, logging, and error-handling mechanisms

Ensuring data security and compliance

Analyzing case studies also prepares candidates for scenario-based questions on the exam, which often involve interpreting business needs and proposing technical solutions.

Practice Using Sample Questions

Regular practice with sample questions improves familiarity with the exam format and helps identify knowledge gaps. Candidates should attempt questions covering all exam domains, including data architecture, pipelines, governance, analytics, and machine learning. Reviewing explanations for correct and incorrect answers reinforces understanding and builds problem-solving skills.

Tips for practicing sample questions include:

Timing practice sessions to simulate exam conditions

Reviewing topics for which multiple incorrect answers were selected

Focusing on scenario-based questions to improve analytical thinking

Consistent practice helps candidates approach the exam with confidence and enhances their ability to answer questions accurately under time constraints.

Developing a Study Schedule

A structured study plan ensures comprehensive coverage of exam objectives and allows candidates to balance hands-on practice with theory. A typical schedule may include:

Week 1–2: Review of Google Cloud fundamentals and core services

Week 3–4: Hands-on practice with data storage, ETL pipelines, and BigQuery

Week 5–6: Data governance, security, and monitoring exercises

Week 7–8: Machine learning integration and pipeline optimization

Week 9–10: Case study analysis, sample question practice, and final review

Allocating time for review and hands-on exercises ensures that candidates retain knowledge and develop the practical skills needed for exam success.

Utilizing Learning Resources

Various resources can support preparation for the certification. These include official Google Cloud training, online courses, video tutorials, practice labs, and community forums. Selecting the right combination of resources allows candidates to learn effectively and gain both theoretical knowledge and practical experience.

Recommended resource categories:

Official Google Cloud courses covering data engineering and analytics

Interactive labs and sandbox environments for hands-on practice

Video tutorials for step-by-step guidance on GCP services

Community forums for discussion, tips, and problem-solving

Using diverse resources provides a well-rounded preparation experience and exposes candidates to different problem-solving approaches.

Optimizing Data Pipelines for Performance

Understanding how to optimize pipelines is critical for data engineers. Candidates should learn techniques to improve efficiency, reduce costs, and ensure scalability. Optimization strategies include:

Parallel processing and distributed computing for large datasets

Minimizing data transfer and storage costs

Caching and indexing strategies in BigQuery

Efficient use of Pub/Sub for real-time streaming data

Practical exercises in optimization help candidates demonstrate their ability to design effective data systems under real-world constraints.

Monitoring and Troubleshooting Pipelines

Monitoring and troubleshooting are essential skills for maintaining reliable data systems. Candidates should practice setting up logging, error notifications, and monitoring dashboards to detect and resolve issues proactively. Tools such as Stackdriver and Cloud Monitoring provide insights into pipeline performance and health.

Key activities for monitoring include:

Tracking data ingestion rates and pipeline throughput

Identifying bottlenecks and errors in ETL processes

Implementing alerts for pipeline failures or delays

Conducting root cause analysis to prevent future issues

These skills ensure that candidates can manage production data systems effectively, a core competency tested on the exam.

Preparing for Scenario-Based Questions

Scenario-based questions are a significant component of the exam, requiring candidates to apply knowledge to solve practical problems. To prepare, candidates should practice:

Analyzing business requirements and identifying relevant data solutions

Evaluating trade-offs between cost, performance, and scalability

Designing end-to-end data pipelines for diverse use cases

Integrating analytics and machine learning to meet organizational objectives

Developing a structured approach to scenario questions improves accuracy and efficiency during the exam.

Building Confidence Through Mock Exams

Taking full-length mock exams simulates real exam conditions and helps build confidence. Candidates should focus on time management, accuracy, and applying knowledge to scenario-based questions. Mock exams also highlight areas that require further review, allowing for targeted preparation in the final stages.

Strategies for effective mock exams include:

Treating each exam seriously, with minimal distractions

Timing each section to improve pacing

Reviewing all incorrect answers and understanding the rationale

Adjusting the study plan based on performance and knowledge gaps

Consistent practice with mock exams ensures readiness and reduces anxiety on test day.

Applying Knowledge to Real-World Projects

One of the most effective preparation methods is applying skills to real-world projects. Candidates can create sample pipelines, analyze datasets, and deploy machine learning models in sandbox environments. This approach reinforces learning, enhances practical skills, and demonstrates the ability to implement solutions in professional settings.

Example projects include:

Building a real-time analytics pipeline for streaming data

Designing a scalable data warehouse using BigQuery and Cloud Storage

Implementing automated data quality checks and alerts

Deploying a machine learning model to predict trends based on historical data

Completing these projects helps candidates gain hands-on experience that mirrors real-world challenges and aligns with exam objectives.

Maintaining Continuous Learning

Data engineering and cloud technologies are constantly evolving. Candidates should adopt a mindset of continuous learning to stay updated with new tools, best practices, and industry trends. Subscribing to newsletters, attending webinars, and following thought leaders in cloud data engineering provides ongoing knowledge that complements certification preparation.

Advanced preparation for the Google Professional Data Engineer Certification combines theoretical knowledge, hands-on practice, case study analysis, and real-world project experience. Understanding exam objectives, practicing with GCP tools, optimizing pipelines, and preparing for scenario-based questions are essential for success.

Candidates who follow a structured study plan, leverage diverse learning resources, and engage in practical exercises build confidence and competence. Achieving this certification not only validates expertise in cloud data engineering but also enhances career opportunities, earning potential, and professional credibility. By dedicating time and effort to preparation, aspiring data engineers can successfully navigate the exam and emerge as skilled, certified professionals ready to tackle complex data challenges in the cloud.

Real-World Applications of Google Cloud Data Engineering

The Google Professional Data Engineer Certification emphasizes not only technical skills but also the ability to apply them to real-world business challenges. Data engineers must design and implement solutions that solve actual organizational problems, enabling businesses to make data-driven decisions. Understanding how data engineering principles are applied in different industries helps candidates grasp the relevance of the certification and prepare for scenario-based questions on the exam.

Industry Use Cases for Data Engineering

Data engineering plays a critical role across various industries. Some common use cases include:

Finance

In the financial sector, data engineers help process transactions, monitor risk, detect fraud, and generate real-time analytics. Pipelines ingest large volumes of data from multiple sources, such as trading platforms, customer databases, and market feeds, enabling analytics teams to make informed decisions quickly.

Healthcare

Healthcare organizations rely on data engineering for patient data management, medical research, and operational efficiency. Engineers design pipelines to aggregate electronic health records, medical imaging data, and IoT device readings, ensuring privacy, compliance, and accuracy for analysis.

Retail and E-commerce

Retailers use data engineering to track customer behavior, optimize inventory, and personalize marketing strategies. Data engineers build pipelines to process transaction logs, website activity, and social media interactions, providing analytics teams with actionable insights to improve sales and customer satisfaction.

Logistics and Supply Chain

In logistics, data engineering supports route optimization, demand forecasting, and supply chain monitoring. Engineers create real-time pipelines to process GPS data, inventory levels, and delivery schedules, allowing companies to reduce costs and improve operational efficiency.

Marketing and Advertising

Marketing organizations use data pipelines to track campaigns, measure performance, and optimize targeting. Engineers integrate data from CRM systems, social media platforms, and advertising networks, enabling marketers to adjust strategies based on timely insights.

Advanced Google Cloud Platform Services

The certification requires familiarity with both core and advanced GCP services. Mastery of these tools allows candidates to implement robust, scalable, and efficient data solutions.

BigQuery for Analytics

BigQuery is central to most GCP data solutions. It is a fully managed, serverless data warehouse designed for analytics at scale. Data engineers should practice writing complex queries, creating partitions and clusters, and optimizing performance for large datasets. Use cases include generating business intelligence dashboards, analyzing trends, and supporting machine learning pipelines.

Dataflow for ETL and Stream Processing

Dataflow enables both batch and real-time data processing. Engineers can design pipelines that clean, transform, and aggregate data from various sources. Use cases include processing streaming data from IoT devices, aggregating logs, and feeding analytical dashboards with live data.

Dataproc for Big Data Processing

Dataproc provides managed Hadoop and Spark clusters for big data processing. Engineers can leverage it for batch analytics, data transformation, and machine learning workloads. Use cases include log analysis, recommendation engines, and large-scale data cleansing.

Pub/Sub for Messaging

Pub/Sub is a messaging system that supports real-time data ingestion. Engineers use it to create event-driven architectures where data is streamed to pipelines as it arrives. Use cases include monitoring transactions in real time, tracking user behavior on websites, and integrating IoT device data.

Cloud Storage for Data Lake Architecture

Cloud Storage provides scalable and secure object storage. Data engineers use it to store raw data, backups, and intermediate datasets. Use cases include implementing data lakes, archiving historical data, and enabling analytics pipelines to access raw inputs efficiently.

Vertex AI for Machine Learning

Vertex AI allows engineers to train, deploy, and manage machine learning models. It supports both automated model building and custom model deployment. Use cases include predicting customer churn, detecting anomalies in transactions, and recommending products.

Designing End-to-End Data Solutions

Certified data engineers are expected to design complete data solutions that meet business requirements. This involves integrating multiple GCP services into coherent architectures.

Data Ingestion

Engineers must identify data sources and use appropriate tools to ingest data. For real-time data, Pub/Sub is typically used. For batch data, Cloud Storage or Dataproc may be more suitable.

Data Processing and Transformation

Once data is ingested, pipelines transform raw data into structured formats. Dataflow and Dataproc are commonly used for this step, applying cleaning, aggregation, and enrichment processes.

Data Storage and Organization

Processed data is stored in BigQuery, Cloud SQL, or Cloud Storage depending on its structure and usage. Engineers optimize storage by partitioning tables, clustering data, and setting access controls.

Analytics and Reporting

Data engineers prepare datasets for analytics teams. They create views, dashboards, and reports using BigQuery or third-party tools. Efficient query design and cost optimization are important considerations.

Machine Learning Integration

Where applicable, engineers integrate machine learning models into pipelines. They prepare training datasets, deploy models, and monitor predictions to ensure accuracy and performance.

Handling Real-Time Data Streams

Real-time data processing is increasingly important for businesses that require immediate insights. Data engineers must design pipelines capable of ingesting, transforming, and analyzing streaming data without delays.

Key considerations for real-time processing:

Ensuring low latency for analytics and decision-making

Implementing data validation to maintain quality

Scaling pipelines to handle fluctuating data volumes

Monitoring and alerting to detect anomalies quickly

Using Pub/Sub with Dataflow allows engineers to design robust real-time pipelines that can be applied to applications such as fraud detection, monitoring IoT sensors, and tracking website activity.

Optimizing Pipelines for Cost and Performance

Efficiency is a key skill for data engineers. Candidates should understand how to optimize pipelines to balance cost, performance, and scalability. Techniques include:

Partitioning and clustering data in BigQuery to reduce query costs

Using batch processing where real-time updates are not required

Minimizing data movement between services

Leveraging caching strategies to speed up repetitive queries

Monitoring resource utilization to avoid over-provisioning

Optimization ensures that pipelines not only deliver accurate results but also remain cost-effective, which is often a focus in scenario-based exam questions.

Security and Compliance Considerations

Data engineers must implement robust security measures to protect sensitive information. This includes:

Setting Identity and Access Management (IAM) policies

Encrypting data at rest and in transit

Monitoring access logs and audit trails

Ensuring compliance with GDPR, HIPAA, or industry-specific regulations

Implementing role-based access to restrict data usage

Understanding security and compliance is critical, as real-world data solutions must meet organizational and regulatory requirements.

Monitoring and Maintaining Data Pipelines

Once pipelines are operational, continuous monitoring and maintenance are essential. Engineers should use tools such as Cloud Monitoring and Stackdriver to track performance, detect errors, and optimize throughput. This includes setting up alerts, visualizing pipeline metrics, and performing root cause analysis for any issues.

Maintenance practices include:

Regularly reviewing pipeline logs for anomalies

Updating ETL processes as source data formats change

Scaling resources based on workload patterns

Conducting performance reviews to reduce costs

Effective monitoring ensures that pipelines remain reliable, accurate, and efficient over time.

Preparing for Complex Scenario-Based Questions

The exam often includes complex scenarios requiring candidates to propose solutions to real-world problems. To prepare:

Analyze business requirements carefully and identify key objectives

Consider trade-offs between performance, cost, and scalability

Use GCP best practices to design robust architectures

Document reasoning for proposed solutions, as understanding the rationale is often tested

Practicing with real-world scenarios, case studies, and sample questions helps candidates approach these challenges confidently.

Leveraging Community and Learning Resources

Community resources and collaborative learning can enhance preparation. Forums, discussion groups, and professional networks provide insights, study tips, and problem-solving strategies. Candidates can also benefit from sharing projects, receiving feedback, and staying updated on GCP developments.

Recommended activities include:

Participating in online discussion forums for data engineers

Attending webinars and workshops hosted by industry experts

Engaging with study groups for collaborative problem-solving

Following blogs, newsletters, and updates from Google Cloud

Using community resources complements formal training and provides practical insights into real-world challenges.

Continuous Learning and Skill Development

The field of data engineering is dynamic, with new tools, frameworks, and best practices emerging regularly. Certified professionals should adopt a mindset of continuous learning to stay relevant. This includes exploring new GCP services, learning about evolving data architectures, and experimenting with emerging technologies such as AI-driven analytics and serverless computing.

Real-world applications, industry-specific use cases, and advanced GCP services are central to the Google Professional Data Engineer Certification. Understanding how to design, implement, and optimize data pipelines for various industries prepares candidates for both the exam and professional practice.

By mastering BigQuery, Dataflow, Dataproc, Pub/Sub, Cloud Storage, and Vertex AI, candidates can develop scalable, secure, and efficient data solutions. Practical experience with real-time streaming, security, compliance, and monitoring ensures readiness for complex scenarios.

Engaging in hands-on projects, studying case studies, and leveraging community resources further enhances competence and confidence. Achieving this certification signifies not only technical expertise but also the ability to apply data engineering principles to solve real-world problems, making certified professionals highly valuable in today’s data-driven landscape.

Exam Strategies for the Google Professional Data Engineer Certification

Successfully passing the Google Professional Data Engineer Certification requires not only technical knowledge but also effective exam strategies. Understanding the structure of the exam, time management, and how to approach different types of questions is critical for success. Candidates should develop a systematic approach to ensure they can answer all questions accurately and efficiently.

Understanding the Exam Format

The certification exam consists of multiple-choice and scenario-based questions designed to test both theoretical knowledge and practical application. Scenario-based questions simulate real-world problems where candidates must design solutions, choose the appropriate tools, and optimize data pipelines based on given requirements.

Key points to consider:

The exam typically lasts around two hours

Questions are scenario-driven, often involving multiple steps or decisions

Candidates are evaluated on their problem-solving ability, understanding of GCP services, and knowledge of best practices

Familiarity with the exam format helps candidates approach each question with confidence and reduces the likelihood of errors due to misinterpretation.

Time Management Techniques

Effective time management is essential for completing the exam within the allocated period. Candidates should allocate time based on question complexity and ensure they leave enough time to review their answers.

Strategies include:

Skimming through all questions first to identify easier ones

Allocating more time to complex scenario-based questions

Avoiding spending too long on a single question

Using remaining time to review answers and ensure no questions are left unanswered

Practicing under timed conditions with sample exams helps develop pacing skills and builds familiarity with the exam environment.

Approaching Scenario-Based Questions

Scenario-based questions are designed to test practical application of knowledge. Candidates should read each scenario carefully, identify the key requirements, and consider constraints such as cost, performance, and scalability.

Tips for handling scenario-based questions:

Break down the scenario into smaller components

Identify the most critical objectives and constraints

Evaluate multiple approaches before selecting the best solution

Use knowledge of GCP services to align solutions with best practices

Practicing these types of questions enhances analytical thinking and prepares candidates for real-world problem-solving.

Common Challenges and How to Overcome Them

Candidates often face specific challenges while preparing for or taking the exam. Being aware of these challenges and developing strategies to overcome them improves the likelihood of success.

Challenge: Understanding Complex Scenarios

Some scenarios involve multiple data sources, workflows, and constraints. Candidates may struggle to determine the optimal solution.

Solution: Break down the scenario, map out data flows, and evaluate each step against GCP best practices. Using diagrams or notes during practice can help visualize solutions.

Challenge: Remembering GCP Service Features

With numerous services and frequent updates, remembering features, limitations, and best practices can be overwhelming.

Solution: Focus on the most commonly used services in data engineering, such as BigQuery, Dataflow, Dataproc, Pub/Sub, Cloud Storage, and Vertex AI. Use mnemonics, flashcards, or summary sheets for quick recall.

Challenge: Time Pressure

Scenario-based questions can be time-consuming, and candidates may struggle to complete the exam on time.

Solution: Practice timed mock exams and develop a pacing strategy. Prioritize questions and avoid getting stuck on particularly complex scenarios.

Challenge: Applying Theory to Practical Problems

Some candidates excel at theoretical knowledge but find it challenging to apply concepts to real-world problems.

Solution: Engage in hands-on projects, case studies, and simulations. Building pipelines, processing data, and deploying ML models provides practical experience that translates directly to scenario-based questions.

Tips for Exam Preparation

A structured and strategic preparation approach enhances performance and confidence. Key tips include:

Develop a Study Plan

Allocate dedicated study time, balancing theory, hands-on practice, and review. A well-structured plan ensures comprehensive coverage of all exam objectives.

Focus on Hands-On Practice

Practical experience with GCP services is crucial. Build pipelines, analyze datasets, and deploy ML models. Practice troubleshooting and optimizing pipelines to simulate real-world tasks.

Review Documentation and Best Practices

Study Google Cloud documentation to understand service features, limitations, and recommended practices. Knowing best practices helps in choosing the most efficient and effective solutions.

Use Practice Exams and Sample Questions

Regularly attempt sample questions and mock exams. Review incorrect answers to identify knowledge gaps and reinforce learning. Scenario-based questions are particularly important for exam readiness.

Join Study Groups and Communities

Engaging with peers provides opportunities to discuss challenges, share insights, and learn alternative problem-solving approaches. Online forums, social media groups, and study communities can offer valuable support.

Stay Updated with Google Cloud Developments

GCP evolves rapidly, and being aware of new features, updates, and services can provide an edge. Follow official updates, blogs, and webinars to stay informed.

Leveraging the Certification for Career Growth

The Google Professional Data Engineer Certification offers significant career benefits. It validates expertise in cloud-based data engineering, making certified professionals highly attractive to employers.

Enhanced Career Opportunities

Certification opens doors to advanced roles, including:

Data Engineer

Cloud Data Architect

Data Analytics Specialist

Machine Learning Engineer

Employers value certified professionals for their verified skills, ability to design scalable solutions, and proficiency with cloud services.

Higher Earning Potential

Certified professionals often command higher salaries due to their specialized expertise. The certification demonstrates competence in building efficient, scalable data solutions that drive business value.

Industry Recognition

The credential is recognized globally and signifies that the professional meets industry standards for cloud data engineering. This recognition enhances professional credibility and can lead to new opportunities.

Opportunities for Advancement

Certification positions professionals for leadership roles in data engineering projects. They gain opportunities to design complex architectures, lead data strategy initiatives, and mentor junior engineers.

Networking and Professional Growth

Certification provides access to a community of certified professionals, events, and forums. Engaging with peers and industry experts fosters networking and continuous professional growth.

Strategies for Continuous Skill Enhancement

Data engineering is an evolving field, and continuous learning is essential. Certified professionals should focus on enhancing skills through:

Exploring advanced GCP services and integrations

Learning new programming languages and data tools

Engaging in real-world projects and consulting opportunities

Attending industry conferences and workshops

Collaborating with peers to share knowledge and best practices

Ongoing skill development ensures that certified professionals remain competitive and adaptable in a rapidly changing data landscape.

Overcoming Exam Anxiety

Many candidates experience anxiety before or during the exam. Effective strategies can reduce stress and improve performance.

Preparation and Familiarity

Confidence comes from preparation. Hands-on experience, practice exams, and scenario-based exercises reduce uncertainty and build self-assurance.

Time Management During the Exam

Plan how much time to spend on each question and avoid lingering too long on complex scenarios. Managing time effectively reduces stress and ensures completion.

Mindset and Focus

Approach the exam calmly and systematically. Read each question carefully, identify key requirements, and apply logical reasoning. Taking deep breaths and maintaining focus improves decision-making.

Simulated Exam Practice

Take full-length practice exams under timed conditions to simulate the real experience. This helps manage nerves and builds endurance for the actual test.

Preparing for Post-Certification Opportunities

Achieving the Google Professional Data Engineer Certification is a milestone, but leveraging it effectively is equally important. Certified professionals can pursue new roles, negotiate higher salaries, and contribute strategically to organizational initiatives.

Career Planning

Identify roles and industries where data engineering skills are in high demand. Tailor your resume and portfolio to highlight certification and hands-on projects.

Demonstrating Expertise

Showcase practical experience through case studies, projects, and contributions to open-source initiatives. Demonstrating real-world problem-solving enhances credibility.

Continuing Education

Consider complementary certifications, such as machine learning, cloud architecture, or advanced analytics. These credentials broaden expertise and open additional career paths.

Mentorship and Leadership

Certified professionals can mentor junior engineers, lead projects, and influence data strategy. This positions them as thought leaders and enhances career advancement opportunities.

The Google Professional Data Engineer Certification requires a combination of technical knowledge, hands-on experience, and strategic exam preparation. Candidates must understand GCP services, build and optimize pipelines, handle real-world scenarios, and approach the exam with effective strategies.

By focusing on scenario-based questions, managing time efficiently, leveraging learning resources, and applying knowledge to practical projects, candidates can overcome challenges and succeed in the certification exam. Post-certification, professionals gain enhanced career opportunities, industry recognition, and higher earning potential, while positioning themselves for continuous growth in the data engineering field.

The certification not only validates technical expertise but also equips professionals with the skills and confidence to contribute strategically to organizations, design scalable and efficient data solutions, and lead initiatives that drive business value in a data-driven world.

Future Trends in Data Engineering

Data engineering is evolving rapidly, driven by advancements in cloud technologies, artificial intelligence, and the growing volume of data generated daily. Professionals pursuing the Google Professional Data Engineer Certification must not only master current tools and practices but also stay informed about emerging trends that shape the future of the industry.

Serverless Data Architectures

Serverless computing is transforming how data pipelines are built and deployed. Serverless architectures allow data engineers to focus on processing and analyzing data without managing infrastructure. Services like BigQuery and Dataflow are examples of serverless solutions that automatically scale to handle varying workloads. Future data engineers will increasingly adopt serverless approaches to reduce operational overhead and improve cost efficiency.

Real-Time Analytics and Streaming Data

Real-time data processing is becoming a standard requirement in many industries, from finance to e-commerce. Engineers are leveraging streaming services, such as Pub/Sub and Dataflow, to process data as it is generated. This trend enables organizations to react to events immediately, enhancing decision-making and customer experiences. Mastery of streaming data technologies will be essential for certified professionals moving forward.

Integration of Artificial Intelligence and Machine Learning

Data engineering and machine learning are increasingly intertwined. Preparing datasets, managing feature stores, and deploying ML models are becoming integral to the data engineer role. Google Cloud’s Vertex AI and AI Platform enable seamless integration of machine learning workflows. Future data engineers will be expected to have skills that bridge the gap between data pipelines and predictive analytics.

DataOps and Automation

Automation in data engineering, often referred to as DataOps, is gaining traction. Automated testing, monitoring, and deployment of data pipelines improve reliability and reduce errors. Data engineers must adopt tools and practices that enable continuous integration and continuous delivery (CI/CD) of data workflows, ensuring scalable and robust systems.

Enhanced Data Governance and Security

As data privacy regulations evolve, data engineers must prioritize governance and security. Techniques for encryption, access control, data masking, and auditing will become increasingly sophisticated. Certified data engineers will need to design systems that are compliant, secure, and resilient to cyber threats.

Hybrid and Multi-Cloud Strategies

Organizations are adopting hybrid and multi-cloud strategies to increase flexibility and reduce dependency on a single provider. Data engineers must design pipelines that can operate across multiple cloud platforms, ensuring seamless integration, data portability, and resilience. Understanding interoperability and cloud-agnostic practices will be a valuable skill for future professionals.

Emerging Tools and Technologies

Keeping pace with emerging tools is crucial for staying competitive in data engineering. Candidates and certified professionals should explore innovations that enhance efficiency, scalability, and analytics capabilities.

Data Virtualization

Data virtualization enables access to multiple data sources without moving or copying data physically. Engineers can query and analyze disparate datasets in real-time, reducing storage costs and complexity. Tools supporting virtualization allow faster insights and more flexible architecture designs.

Advanced Analytics Platforms

Platforms that integrate analytics, machine learning, and AI capabilities into unified ecosystems are becoming more popular. Engineers can leverage these platforms to streamline pipelines, automate model training, and deploy predictive insights efficiently.

Streamlined ETL/ELT Tools

Modern ETL and ELT tools, including managed services and automation frameworks, simplify pipeline creation and maintenance. These tools reduce manual coding, improve reliability, and enhance data quality, allowing engineers to focus on strategic problem-solving.

Cloud-Native Machine Learning Integration

Machine learning integration within cloud platforms continues to evolve. Tools for feature engineering, model deployment, and real-time predictions are becoming more accessible. Certified professionals must stay updated on these services to deliver impactful solutions that combine analytics and predictive insights.

Long-Term Career Impact of Certification

The Google Professional Data Engineer Certification has significant long-term benefits, positioning professionals for advanced roles and continuous growth in the data engineering field.

Career Advancement Opportunities

Certification opens doors to senior positions such as senior data engineer, cloud architect, data analytics manager, or machine learning engineer. These roles involve designing complex architectures, leading projects, and shaping data strategy at an organizational level.

Increased Job Market Competitiveness

Certified professionals are highly sought after in a competitive job market. The credential demonstrates verified skills and practical experience with cloud technologies, setting candidates apart from non-certified peers.

Expanded Professional Network

Achieving certification provides access to a global community of certified data engineers. Networking opportunities include professional forums, industry events, and collaborative projects, fostering knowledge sharing and career growth.

Higher Earning Potential

The certification often correlates with higher salaries due to the specialized expertise it represents. Organizations recognize certified professionals as capable of designing efficient, scalable, and secure data solutions that drive business value.

Opportunities for Specialization

With foundational skills validated, certified professionals can pursue specialized areas such as machine learning engineering, data architecture, real-time analytics, or cloud security. Specialization enhances career prospects and positions professionals as experts in niche domains.

Continuous Professional Development

Data engineering is dynamic, and the certification encourages a mindset of lifelong learning. Professionals must continue exploring new services, frameworks, and best practices to maintain expertise and relevance in the industry.

Leveraging the Certification in Career Planning

To maximize the value of the Google Professional Data Engineer Certification, professionals should strategically leverage it in their career development.

Resume and Portfolio Enhancement

Highlighting certification on resumes, LinkedIn profiles, and portfolios signals technical proficiency and commitment to professional growth. Including hands-on projects and real-world implementations further strengthens credibility.

Targeting High-Demand Roles

Certified professionals can target roles in industries with high data engineering demand, such as technology, finance, healthcare, e-commerce, and logistics. Understanding industry-specific requirements allows for tailored applications and higher chances of success.

Positioning for Leadership Roles

Certification equips professionals with the knowledge and confidence to lead data engineering projects. Demonstrating ability to design end-to-end solutions, manage teams, and optimize processes positions individuals for managerial and strategic roles.

Exploring Consulting and Freelance Opportunities

Certified data engineers can leverage their expertise for consulting or freelance opportunities. Organizations often seek certified professionals to design solutions, migrate data to the cloud, or optimize existing pipelines, providing diverse career paths.

Future-Proofing Your Data Engineering Skills

The field of data engineering is constantly evolving. Certified professionals must adopt strategies to remain adaptable and future-ready.

Continuous Learning

Stay updated with new Google Cloud services, features, and best practices. Engage in online courses, workshops, and webinars to expand knowledge.

Hands-On Experimentation

Regularly experiment with emerging tools and technologies. Building sample projects and exploring innovative solutions reinforces learning and prepares professionals for future challenges.

Industry Awareness

Monitor trends in data engineering, cloud computing, and analytics. Understanding market demands and emerging practices allows professionals to align skills with evolving industry needs.

Collaboration and Mentorship

Engage with peers, mentors, and professional communities to share knowledge, solve challenges, and gain insights from industry leaders. Collaboration fosters growth and keeps skills relevant.

Adapting to Hybrid and Multi-Cloud Environments

As organizations adopt hybrid and multi-cloud strategies, proficiency in integrating pipelines across platforms ensures adaptability and positions professionals as versatile data engineers.

Conclusion

The Google Professional Data Engineer Certification is not only a credential validating technical expertise but also a gateway to long-term career growth and professional development. Understanding emerging trends, leveraging advanced GCP services, and applying knowledge to real-world scenarios equips certified professionals to design scalable, efficient, and secure data solutions.

The certification prepares data engineers for future challenges, including serverless architectures, real-time analytics, machine learning integration, and automation through DataOps. Certified professionals gain enhanced career opportunities, higher earning potential, industry recognition, and access to a global community of peers.

Continuous learning, hands-on practice, and strategic career planning ensure that the value of the certification extends beyond passing the exam. It positions professionals to thrive in a dynamic, data-driven world, enabling them to contribute meaningfully to organizational success and establish themselves as leaders in the field of cloud data engineering.

By embracing emerging technologies, staying adaptable, and leveraging the certification effectively, data engineers can secure long-term success, remain competitive in the job market, and drive innovation across industries. The Google Professional Data Engineer Certification is not just a milestone; it is a foundation for a thriving and future-ready career in the rapidly evolving world of data engineering.

ExamCollection provides the complete prep materials in vce files format which include Google Professional Data Engineer certification exam dumps, practice test questions and answers, video training course and study guide which help the exam candidates to pass the exams quickly. Fast updates to Google Professional Data Engineer certification exam dumps, practice test questions and accurate answers vce verified by industry experts are taken from the latest pool of questions.

Google Professional Data Engineer Video Courses

Top Google Certification Exams

- Professional Cloud Architect

- Generative AI Leader

- Associate Cloud Engineer

- Professional Machine Learning Engineer

- Professional Data Engineer

- Professional Security Operations Engineer

- Professional Cloud Network Engineer

- Professional Cloud Security Engineer

- Cloud Digital Leader

- Professional Cloud Developer

- Professional Cloud DevOps Engineer

- Associate Google Workspace Administrator

- Professional Cloud Database Engineer

- Associate Data Practitioner

- Professional ChromeOS Administrator

- Professional Google Workspace Administrator

- Professional Chrome Enterprise Administrator

- Google Analytics

Site Search: