Pass Your ISC-CCSP Certification Easy!

ISC-CCSP Certification Exams Questions & Answers, Accurate & Verified By IT Experts

Instant Download, Free Fast Updates, 99.6% Pass Rate.

$69.99

Download Free ISC-CCSP Practice Test Questions VCE Files

| Exam | Title | Files |

|---|---|---|

Exam CCSP |

Title Certified Cloud Security Professional (CCSP) |

Files 5 |

ISC-CCSP Certification Exam Dumps & Practice Test Questions

Prepare with top-notch ISC-CCSP certification practice test questions and answers, vce exam dumps, study guide, video training course from ExamCollection. All ISC-CCSP certification exam dumps & practice test questions and answers are uploaded by users who have passed the exam themselves and formatted them into vce file format.

Mastering Cloud Security: A Complete Guide to ISC CCSP Certification and Career Advancement

The modern business environment is rapidly embracing cloud technologies, and with this shift comes an urgent demand for skilled professionals who can secure cloud environments. Organizations across industries are increasingly reliant on cloud infrastructure for storing data, running applications, and enabling collaboration. However, the convenience and flexibility of cloud computing also bring unique security challenges that traditional IT environments may not face.

The Certified Cloud Security Professional (CCSP) certification, offered by ISC², is a globally recognized credential designed for IT and cybersecurity professionals seeking to demonstrate expertise in cloud security. It validates the knowledge and practical skills required to secure cloud systems effectively, manage data protection, and ensure compliance with industry standards. CCSP-certified professionals are equipped to address cloud-specific threats and risks, making them highly valuable to organizations worldwide.

Why Cloud Security is Critical

Cloud computing offers numerous advantages, including scalability, reduced operational costs, and remote accessibility. However, these benefits come with inherent risks that can significantly impact businesses if not properly managed. Cloud security has emerged as one of the top concerns for organizations, especially as cyber threats continue to evolve in complexity.

One of the primary risks is data breaches. Sensitive information stored in the cloud is a prime target for cybercriminals. Unauthorized access, weak access controls, and misconfigured cloud services are common sources of data exposure. Additionally, organizations must ensure compliance with regulatory standards such as GDPR, HIPAA, and ISO frameworks, which govern data privacy and security. Failure to comply can lead to severe penalties and reputational damage.

Other challenges include insider threats, inadequate monitoring, and vulnerabilities in cloud applications or infrastructure. The CCSP certification equips professionals with the knowledge to design secure cloud architectures, implement robust security measures, and proactively mitigate risks. This makes CCSP holders essential assets in organizations that rely on cloud technology.

The Core Domains of CCSP

The CCSP exam is structured around six key domains, each covering critical aspects of cloud security. A deep understanding of these domains ensures that professionals are prepared to handle the complex security requirements of cloud environments.

Cloud Concepts, Architecture, and Design

This domain provides foundational knowledge of cloud computing, including service models and deployment types. Professionals learn to:

Differentiate between Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS).

Understand deployment options such as public, private, hybrid, and multi-cloud environments.

Apply secure design principles for cloud systems, ensuring scalability, resilience, and high availability.

By mastering this domain, professionals can design cloud architectures that meet business needs while maintaining robust security standards.

Cloud Data Security

Data security is the cornerstone of cloud protection. This domain focuses on safeguarding sensitive information throughout its lifecycle, including:

Data classification and protection strategies.

Implementing encryption for data at rest, in transit, and during processing.

Managing access controls and identity verification.

Ensuring data privacy and compliance with legal and regulatory frameworks.

A solid understanding of cloud data security allows professionals to minimize risks associated with data breaches and unauthorized access.

Cloud Platform and Infrastructure Security

Securing cloud infrastructure is essential to protect applications and services. This domain covers topics such as:

Network security and segmentation.

Virtualization and container security.

Identity and access management (IAM).

Monitoring cloud environments for suspicious activity and vulnerabilities.

Cloud professionals learn how to implement security measures at the infrastructure level, ensuring that the underlying systems supporting applications are resilient against attacks.

Cloud Application Security

Applications hosted in the cloud require specific security considerations. This domain addresses:

Secure software development practices, including DevSecOps integration.

Application vulnerability management and threat modeling.

API security and secure interactions between cloud services.

By understanding application-level security, professionals can protect cloud-based software from vulnerabilities and ensure continuous security throughout the development lifecycle.

Cloud Security Operations

Effective cloud security relies on robust operational practices. This domain emphasizes:

Incident response planning and execution.

Logging, monitoring, and auditing cloud environments.

Vulnerability assessment and remediation.

Configuration management and operational controls.

Cloud security operations ensure that threats are detected and mitigated promptly, minimizing potential damage and maintaining service reliability.

Legal, Risk, and Compliance

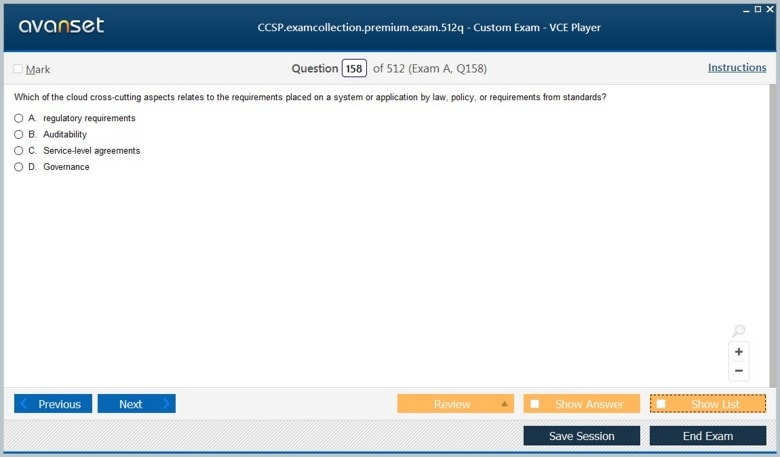

Cloud security is not just about technology—it also involves understanding legal and regulatory requirements. This domain covers:

Risk assessment and management strategies.

Compliance with laws and regulations across different regions.

Governance frameworks and contractual obligations with cloud service providers.

Professionals who master this domain can ensure that their organizations meet all legal and regulatory requirements while maintaining strong security postures.

Eligibility and Prerequisites

The CCSP certification requires a combination of experience and knowledge in both IT and cybersecurity. Candidates must have a minimum of five years of cumulative work experience in information technology, with at least three years focused on information security. Additionally, at least one year must involve hands-on experience in one or more of the six CCSP domains.

Individuals who do not meet the full experience requirements can take the exam and become an Associate of (ISC)². This status allows them up to six years to gain the required work experience while still holding recognition from ISC².

Exam Details

The CCSP exam is designed to evaluate both theoretical knowledge and practical skills in cloud security. Key information includes:

Format: Multiple-choice questions

Duration: Three hours

Number of Questions: Approximately 125

Passing Score: 700 out of 1000

The exam tests the candidate's ability to apply cloud security principles effectively, ensuring that certified professionals are capable of addressing real-world challenges in cloud environments.

Career Benefits of CCSP Certification

Earning the CCSP certification offers numerous advantages for career growth and professional development:

Enhanced Career Opportunities: CCSP holders are qualified for senior roles such as cloud security architect, consultant, or cloud security manager.

Global Recognition: ISC² credentials are internationally recognized, providing credibility and validation of expertise.

Skill Validation: Certification demonstrates proficiency in securing cloud systems, applications, and data across multiple platforms.

Higher Earning Potential: Professionals with CCSP certification often command higher salaries due to their specialized expertise.

Professional Networking: Membership in ISC² connects individuals with a global community of cybersecurity professionals, offering collaboration and learning opportunities.

Preparing for the CCSP Exam

Proper preparation is essential to succeed in the CCSP exam. Professionals should focus on both theoretical understanding and practical application. Recommended steps include:

Reviewing the official CCSP exam guide and domain objectives.

Participating in training programs and bootcamps tailored to CCSP preparation.

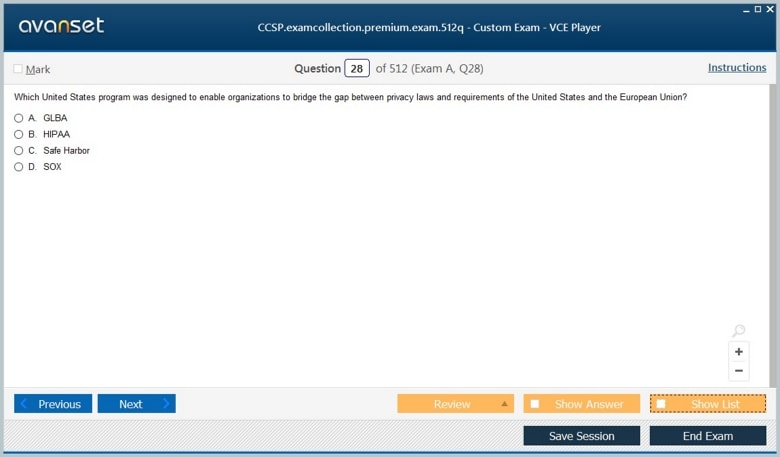

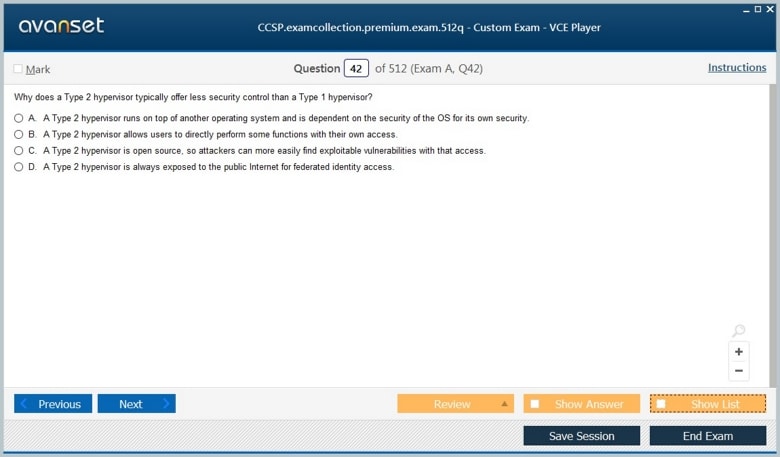

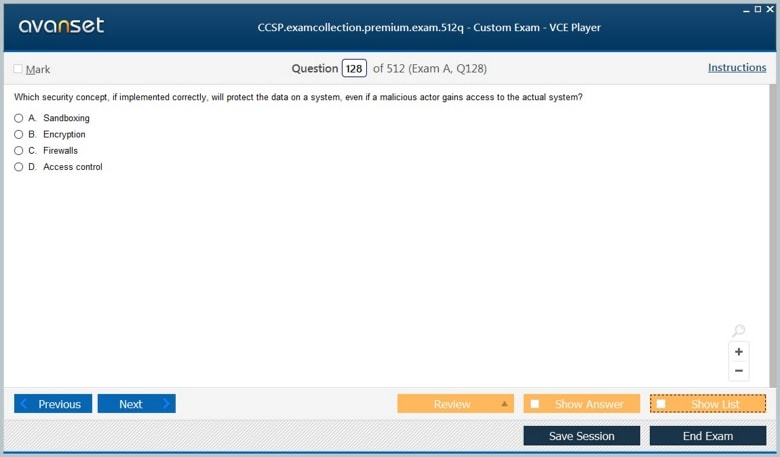

Using practice exams to familiarize with question formats and time management.

Engaging in hands-on experience within cloud environments to reinforce practical skills.

Additionally, joining study groups or online communities can provide support and insights from other professionals pursuing the certification.

Maintaining CCSP Certification

CCSP holders must maintain their certification through Continuing Professional Education (CPE) credits and annual maintenance fees. This ensures that certified professionals remain up-to-date with evolving cloud technologies, emerging threats, and industry best practices. ISC² encourages ongoing learning to foster continuous development and enhance the overall effectiveness of cloud security practices.

As cloud adoption continues to accelerate, the demand for skilled cloud security professionals grows in parallel. The CCSP certification provides a clear path for IT and security professionals to validate their expertise in cloud security, offering both recognition and career advancement opportunities. By mastering the six core domains—cloud concepts, data security, infrastructure security, application security, security operations, and legal compliance—professionals can play a crucial role in safeguarding cloud environments, protecting sensitive data, and ensuring compliance with regulatory requirements.

For those seeking to establish themselves as experts in cloud security, the CCSP certification represents a strategic investment in knowledge, skills, and career potential. It empowers professionals to address the complex security challenges of modern cloud environments and positions them for success in a rapidly evolving digital landscape.

Advanced Overview of CCSP Domains

The Certified Cloud Security Professional (CCSP) certification emphasizes not only theoretical knowledge but also the practical ability to implement and manage cloud security measures. Building on the foundational understanding of cloud security, this stage focuses on advanced concepts within the six domains to prepare professionals for real-world responsibilities.

Cloud Concepts, Architecture, and Design

At a more advanced level, cloud concepts involve deep comprehension of architectural patterns and frameworks that support secure cloud deployment. Professionals must understand:

Multi-cloud and hybrid architectures and the security implications of interconnecting multiple platforms.

Principles of cloud-native design, including microservices, containerization, and serverless computing.

Best practices for secure network design in cloud environments, including segmentation, zero-trust architecture, and perimeter defense in virtualized networks.

Scalability considerations while ensuring data protection and minimal downtime in distributed systems.

This domain emphasizes aligning business objectives with secure cloud designs while addressing resilience and availability challenges.

Cloud Data Security

Data security remains a cornerstone of cloud protection, and advanced strategies focus on comprehensive lifecycle management. Key elements include:

Implementing end-to-end encryption, tokenization, and key management strategies.

Understanding and applying data sovereignty rules, particularly when cloud data crosses international boundaries.

Designing secure access controls using identity federation, role-based access, and policy enforcement across multiple cloud environments.

Managing data retention, archival, and secure deletion to comply with regulations.

By mastering these techniques, professionals ensure that sensitive data remains protected under all circumstances, minimizing the risk of breaches or regulatory penalties.

Cloud Platform and Infrastructure Security

Advanced infrastructure security requires expertise in the inner workings of cloud services and platforms. Professionals focus on:

Security for virtualization layers, hypervisors, and container orchestration platforms such as Kubernetes.

Implementing automated monitoring and intrusion detection systems tailored for cloud environments.

Understanding cloud provider shared responsibility models and integrating internal security controls accordingly.

Threat modeling for infrastructure components, including edge devices, APIs, and storage systems.

This domain ensures that professionals can manage both the virtual and physical aspects of cloud infrastructure securely.

Cloud Application Security

Securing cloud applications involves integrating security into every stage of development. Advanced knowledge includes:

Embedding security into the software development lifecycle (SDLC) and implementing DevSecOps practices.

Performing static and dynamic application security testing to identify vulnerabilities.

Protecting APIs, microservices, and serverless functions against attacks such as injection, cross-site scripting, and privilege escalation.

Applying continuous monitoring and automated remediation for application security issues.

By focusing on proactive application security measures, professionals reduce the likelihood of exploitable vulnerabilities in cloud-hosted software.

Cloud Security Operations

Operational security in the cloud extends beyond traditional monitoring to encompass continuous assurance and automated responses. Critical components include:

Establishing incident response plans that are cloud-specific and tested across different environments.

Utilizing Security Information and Event Management (SIEM) systems to collect and analyze cloud logs.

Automating vulnerability management and patching to maintain secure configurations.

Conducting regular security audits and compliance checks to detect gaps in policies or procedures.

Advanced security operations require professionals to combine automation, monitoring, and governance to protect dynamic cloud environments effectively.

Legal, Risk, and Compliance

The regulatory and legal landscape is increasingly complex for cloud adoption. Advanced expertise includes:

Performing comprehensive risk assessments tailored to cloud architectures and workloads.

Interpreting international regulations and industry standards to guide cloud deployment strategies.

Drafting cloud security policies that address data protection, service provider accountability, and incident reporting.

Conducting compliance audits and maintaining documentation to demonstrate adherence to legal requirements.

A deep understanding of risk and compliance allows professionals to balance security needs with business objectives and regulatory obligations.

Exam Preparation Strategies

Passing the CCSP exam requires a combination of disciplined study, hands-on experience, and familiarity with the exam format. Key strategies include:

Structured Study Plans: Break down the exam domains into manageable sections, allocating focused study time for each.

Official ISC² Resources: Utilize exam guides, practice questions, and domain outlines provided by ISC² to ensure coverage of essential topics.

Hands-On Labs: Engage with cloud platforms such as AWS, Azure, and Google Cloud to apply security concepts in practice.

Simulated Exams: Take timed practice exams to build confidence, improve time management, and identify knowledge gaps.

Study Groups and Forums: Participate in professional communities to exchange insights, discuss challenging topics, and stay updated on best practices.

Effective preparation combines theoretical understanding with practical exposure to cloud environments, which reinforces learning and builds confidence for exam day.

Real-World Applications of CCSP Knowledge

CCSP certification equips professionals to address cloud security challenges across various industries. Real-world applications include:

Designing secure multi-cloud environments for enterprise workloads.

Implementing robust encryption and access management for financial and healthcare data.

Conducting cloud risk assessments and recommending remediation strategies for vulnerabilities.

Integrating automated security operations to detect and respond to threats proactively.

Advising organizations on compliance with data protection laws and industry standards.

Professionals with CCSP certification can directly influence the security posture of their organizations by applying knowledge in architecture, operations, and governance.

Career Paths and Opportunities

The CCSP certification opens doors to advanced cloud security roles, including:

Cloud Security Architect: Designing secure cloud infrastructures and ensuring resilience against threats.

Cloud Security Consultant: Advising organizations on best practices, compliance, and risk mitigation strategies.

Cloud Security Engineer: Implementing and managing cloud security controls, monitoring systems, and incident response processes.

Information Security Manager: Overseeing cloud security operations, compliance, and risk management across organizational units.

Compliance Analyst: Ensuring that cloud deployments adhere to legal, regulatory, and industry standards.

These roles are in high demand as organizations increasingly prioritize cloud security, and CCSP-certified professionals are recognized for their specialized expertise.

Benefits Beyond Certification

Beyond immediate career opportunities, CCSP certification provides ongoing professional benefits:

Professional Credibility: Globally recognized credential validates cloud security knowledge and skills.

Networking Opportunities: Access to ISC² communities and events for sharing knowledge and professional growth.

Continuous Learning: Maintaining certification requires ongoing education, keeping professionals updated on emerging threats, technologies, and practices.

Organizational Value: Certified professionals help organizations reduce risks, improve compliance, and enhance overall cloud security posture.

Competitive Advantage: CCSP holders often outperform peers in interviews, salary negotiations, and project leadership opportunities.

These advantages highlight why CCSP is more than a credential—it is an investment in long-term career development and influence in the cybersecurity field.

Maintaining and Renewing CCSP Certification

Maintaining CCSP certification involves:

Earning a minimum number of Continuing Professional Education (CPE) credits annually.

Paying an annual maintenance fee to ISC².

Staying informed about changes in cloud security technologies, industry standards, and regulations.

This continuous learning requirement ensures that CCSP professionals remain capable of managing evolving threats and implementing current best practices. It reinforces the certification’s value and credibility in the fast-paced cybersecurity landscape.

The CCSP certification represents a comprehensive standard for cloud security expertise. Beyond technical knowledge, it emphasizes practical skills, operational excellence, and governance awareness. Professionals who earn this credential gain recognition, career advancement, and the ability to influence the security strategies of their organizations.

As cloud technologies continue to evolve, the demand for skilled cloud security professionals will only increase. Mastery of CCSP domains equips professionals to meet these challenges head-on, ensuring secure, resilient, and compliant cloud environments. By combining advanced domain knowledge, hands-on experience, and a proactive approach to risk management, CCSP-certified professionals play a critical role in the modern digital landscape.

Strategic Approaches to CCSP Exam Preparation

Successfully achieving the CCSP certification requires more than basic study—it demands a strategic approach that blends conceptual understanding, hands-on experience, and exam-specific practice. Professionals must not only understand cloud security principles but also know how to apply them in real-world scenarios and under exam conditions.

Understanding the Exam Structure

The CCSP exam consists of approximately 125 multiple-choice questions to be completed within three hours. Candidates must achieve a passing score of 700 out of 1000. The exam is designed to test both theoretical knowledge and practical application across the six core domains:

Cloud Concepts, Architecture, and Design

Cloud Data Security

Cloud Platform and Infrastructure Security

Cloud Application Security

Cloud Security Operations

Legal, Risk, and Compliance

Understanding the weight and focus of each domain is critical for effective preparation. Candidates should allocate more study time to domains they find challenging, while maintaining proficiency across all areas.

Building a Study Plan

A structured study plan is essential for covering the extensive CCSP syllabus effectively. Key steps include:

Domain-Based Scheduling: Break down study sessions by domain, ensuring thorough coverage of each topic.

Goal Setting: Set daily or weekly learning objectives to track progress and maintain focus.

Review Cycles: Incorporate periodic reviews to reinforce memory retention and understanding.

Practice Questions: Include multiple practice exams and quizzes to familiarize yourself with the question format.

A disciplined study plan ensures comprehensive coverage of the exam topics and reduces the likelihood of last-minute cramming.

Hands-On Experience

CCSP emphasizes practical application of cloud security principles. Hands-on experience is invaluable for both the exam and real-world scenarios. Professionals should:

Work with cloud platforms such as AWS, Microsoft Azure, or Google Cloud to gain practical insights.

Practice implementing encryption, identity and access management (IAM), and network security controls.

Simulate incident response scenarios, monitoring, and auditing tasks within cloud environments.

This experiential learning reinforces theoretical concepts, making it easier to apply knowledge during the exam and in professional settings.

Leveraging Official Study Resources

ISC² provides official study materials designed to align with the CCSP exam objectives. These include:

Exam guides detailing the six domains and key concepts.

Practice questions to assess understanding and readiness.

Online learning modules and webinars hosted by certified instructors.

Using official resources ensures that preparation is aligned with exam requirements and focuses on the most relevant topics.

Real-World Applications of CCSP Knowledge

Beyond exam preparation, CCSP-certified professionals apply their expertise to address complex cloud security challenges in organizations. Understanding real-world applications helps professionals translate theoretical knowledge into practical impact.

Designing Secure Cloud Architectures

Professionals use their knowledge to design cloud architectures that are secure, resilient, and scalable. Key considerations include:

Selecting appropriate cloud service models and deployment strategies.

Implementing secure network topologies with segmentation and firewall rules.

Incorporating redundancy and failover mechanisms to maintain high availability.

A well-designed architecture ensures that cloud systems can withstand potential threats while supporting business operations efficiently.

Data Protection and Compliance

Data security is a central focus in cloud environments. CCSP-certified professionals implement measures to protect sensitive information, such as:

Encrypting data in transit and at rest.

Applying data classification and retention policies.

Ensuring compliance with GDPR, HIPAA, and industry-specific standards.

By prioritizing data protection, professionals mitigate the risk of breaches and maintain regulatory compliance.

Securing Cloud Infrastructure

Cloud infrastructure security involves protecting virtual machines, storage, networking, and services from unauthorized access and attacks. Professionals focus on:

Configuring access controls and identity management policies.

Monitoring systems for anomalies, vulnerabilities, or misconfigurations.

Implementing automated security controls and patch management processes.

Effective infrastructure security forms the foundation for safe and reliable cloud operations.

Application Security in the Cloud

Cloud applications require specific security strategies due to their distributed nature. Professionals apply CCSP knowledge to:

Conduct vulnerability assessments and penetration testing.

Implement secure development practices and integrate security into DevOps processes.

Secure APIs, microservices, and serverless functions against potential threats.

Securing cloud applications ensures that user-facing systems and internal services remain protected from attacks.

Incident Response and Security Operations

CCSP-certified professionals develop operational strategies to detect and respond to threats efficiently. This includes:

Establishing incident response plans tailored for cloud environments.

Using monitoring and logging tools to identify suspicious activities.

Conducting forensic analysis to investigate incidents and prevent recurrence.

Robust operational security minimizes damage during incidents and strengthens organizational resilience.

Case Studies and Practical Scenarios

Learning from real-world examples helps CCSP candidates understand how theoretical knowledge translates into practical action.

Financial Sector Cloud Security

In the financial sector, organizations face strict regulatory requirements and high-value data targets. CCSP-certified professionals contribute by:

Designing multi-layered encryption and access controls for sensitive financial records.

Ensuring compliance with standards such as PCI DSS and SOX.

Monitoring cloud infrastructure for anomalous transactions and potential insider threats.

These strategies reduce the likelihood of breaches and maintain customer trust.

Healthcare Cloud Deployments

Healthcare organizations handle vast amounts of patient data, requiring strict privacy measures. Professionals apply CCSP principles to:

Implement HIPAA-compliant security controls.

Ensure secure storage, transmission, and sharing of patient data.

Conduct regular risk assessments and audits to maintain compliance.

Effective cloud security protects both patients and organizational reputation.

Multi-Cloud Strategies

Organizations often use multiple cloud providers to optimize resources and redundancy. CCSP-certified professionals manage security by:

Ensuring consistent policies across cloud platforms.

Implementing centralized monitoring and logging solutions.

Managing identity federation and cross-platform access control.

Multi-cloud strategies require advanced knowledge of architecture, data protection, and compliance to mitigate risks effectively.

Common Challenges and Mitigation Strategies

Cloud security presents unique challenges that professionals must address proactively.

Misconfigurations

Misconfigured cloud resources are a common source of vulnerabilities. Professionals mitigate risks by:

Using automated configuration tools to detect and correct errors.

Implementing standardized templates and policies.

Conducting regular audits and security reviews.

Insider Threats

Employees or contractors with malicious intent or accidental errors can compromise security. Mitigation strategies include:

Role-based access controls and least privilege principles.

Continuous monitoring and anomaly detection.

Employee training and awareness programs.

Compliance Complexity

Different regulations across regions can create compliance challenges. Professionals address this by:

Mapping applicable laws to organizational policies.

Implementing automated compliance checks.

Maintaining detailed documentation for audits.

Rapidly Evolving Threats

The cloud landscape evolves quickly, requiring constant vigilance. Mitigation involves:

Continuous education and certification maintenance.

Implementing adaptive security measures and threat intelligence solutions.

Regularly updating policies and procedures to reflect emerging risks.

Leveraging CCSP for Career Growth

The CCSP certification opens a range of opportunities for advancement in cloud security roles.

Cloud Security Architect: Leads the design and implementation of secure cloud infrastructures.

Security Consultant: Advises organizations on best practices, risk mitigation, and compliance strategies.

Cloud Security Engineer: Builds and maintains security controls, monitors systems, and responds to incidents.

Compliance and Risk Analyst: Ensures adherence to regulations and manages cloud-related risks.

IT Manager with Cloud Focus: Oversees cloud security operations, policy enforcement, and team development.

Professionals with CCSP certification often enjoy higher salaries, greater career mobility, and recognition for their specialized expertise in cloud security.

Maintaining Expertise and Continuous Learning

Achieving CCSP certification is just the beginning. Professionals must continue to build expertise through:

Earning Continuing Professional Education (CPE) credits annually.

Staying updated on emerging cloud technologies and threats.

Participating in professional communities and ISC² events.

Engaging in advanced cloud security projects to refine skills.

Ongoing learning ensures that professionals remain effective in protecting dynamic and complex cloud environments.

CCSP certification represents a significant achievement for cloud security professionals, demonstrating mastery of both conceptual knowledge and practical skills. The credential equips professionals to design secure architectures, protect data, manage infrastructure, and ensure compliance across cloud environments.

By strategically preparing for the exam, applying knowledge to real-world scenarios, and maintaining expertise through continuous learning, CCSP-certified professionals are well-positioned to advance their careers and contribute meaningfully to organizational cloud security strategies.

The combination of advanced knowledge, practical application, and professional recognition makes CCSP a valuable investment for anyone seeking to excel in the rapidly evolving field of cloud security.

Deep Dive into CCSP Domains: Practical Insights

The Certified Cloud Security Professional (CCSP) certification equips professionals with comprehensive knowledge across six critical domains. Understanding these domains in depth, along with their practical applications, is essential for securing cloud environments and advancing in a cloud security career.

Cloud Concepts, Architecture, and Design

This domain focuses on designing and implementing secure cloud infrastructures. Key practical insights include:

Architectural Principles: Professionals must understand multi-tiered architecture, microservices, and serverless computing, ensuring that security is integrated from the design phase.

Secure Deployment Models: Selecting the appropriate cloud deployment model—public, private, hybrid, or multi-cloud—is crucial. Security strategies must align with the chosen model, addressing potential vulnerabilities unique to each.

Resiliency and High Availability: Design considerations include redundancy, load balancing, and failover mechanisms. These measures ensure continuous operations even during system failures or attacks.

Security Patterns: Implement patterns like zero-trust architecture and segmentation to minimize the attack surface and enhance system integrity

A strong grasp of these principles ensures cloud systems are both functional and secure from the outset.

Cloud Data Security

Protecting data is central to cloud security. Practical strategies for this domain include:

Data Classification and Handling: Categorize data based on sensitivity and apply appropriate controls for each category.

Encryption and Key Management: Implement encryption for data at rest and in transit. Use robust key management practices to prevent unauthorized access.

Access Control: Apply role-based access controls (RBAC) and identity federation to ensure only authorized personnel access sensitive data.

Data Privacy Compliance: Ensure that data handling practices comply with regulations like GDPR, HIPAA, and ISO standards, mitigating legal and financial risks.

Effective data security protects organizational assets and maintains customer trust in cloud environments.

Cloud Platform and Infrastructure Security

Securing the underlying infrastructure of cloud services is critical. Practical applications include:

Virtualization Security: Protect hypervisors and virtual machines against attacks. Ensure isolation between tenants in multi-tenant environments.

Container and Orchestration Security: Implement security measures for containers, container registries, and orchestration platforms like Kubernetes.

Network Security Controls: Use firewalls, segmentation, and intrusion detection systems to monitor and protect traffic.

Automation and Monitoring: Employ automated tools for vulnerability scanning, configuration management, and continuous monitoring.

By securing the infrastructure, professionals create a strong foundation for the overall cloud environment.

Cloud Application Security

Applications deployed in the cloud require specific security practices. Key practical considerations include:

Secure Development Practices: Integrate security into the software development lifecycle (SDLC) and adopt DevSecOps practices to reduce vulnerabilities.

API Security: Protect APIs against injection attacks, excessive data exposure, and unauthorized access.

Threat Modeling and Testing: Perform vulnerability assessments, penetration testing, and threat modeling to identify and mitigate risks.

Continuous Monitoring: Implement tools that continuously monitor applications for vulnerabilities and respond to incidents promptly.

Securing applications ensures that both internal services and user-facing software are resilient against evolving threats.

Cloud Security Operations

Operational security is vital to maintain a secure cloud environment. Practical strategies include:

Incident Response: Develop cloud-specific incident response plans and conduct regular drills to ensure readiness.

Monitoring and Logging: Collect and analyze logs from all cloud components to detect unusual activities or potential breaches.

Vulnerability Management: Implement patching schedules, automated vulnerability scanning, and remediation processes.

Security Metrics and Reporting: Use key performance indicators (KPIs) to measure the effectiveness of security operations and identify areas for improvement.

Operational security ensures threats are detected and addressed promptly, reducing potential damage and downtime.

Legal, Risk, and Compliance

Cloud security also involves understanding legal, regulatory, and governance requirements. Practical applications include:

Risk Assessment: Conduct regular assessments to identify vulnerabilities, potential impacts, and likelihood of cloud-specific threats.

Policy Development: Develop security policies, standards, and procedures that align with organizational objectives and regulatory requirements.

Compliance Monitoring: Ensure ongoing compliance with local and international regulations. This may include maintaining audit logs, documentation, and reporting mechanisms.

Third-Party Risk Management: Assess the security practices of cloud service providers and partners to mitigate supply chain risks.

Mastering this domain allows professionals to balance security, operational efficiency, and compliance obligations effectively.

Implementation Strategies for Cloud Security

Effective cloud security requires more than theoretical knowledge; it demands practical implementation strategies. Professionals can use the following approaches to enhance cloud security:

Layered Security Approach

Also known as defense-in-depth, a layered approach ensures that multiple controls protect cloud resources:

Perimeter Security: Firewalls, intrusion prevention systems, and network segmentation.

Data Protection: Encryption, tokenization, and access control policies.

Application Security: Secure coding, regular vulnerability assessments, and monitoring.

Operational Security: Logging, incident response, and continuous auditing.

A layered strategy reduces the risk of single points of failure and enhances overall resilience.

Automation and Orchestration

Automating cloud security tasks increases efficiency and reduces human error. Key areas include:

Automated vulnerability scanning and patching.

Continuous configuration monitoring for compliance.

Security orchestration, automation, and response (SOAR) tools to streamline incident handling.

Automation ensures consistent application of security controls and rapid response to threats.

Continuous Monitoring and Threat Intelligence

Monitoring cloud environments in real-time is essential to detect and mitigate risks promptly. Best practices include:

Implementing Security Information and Event Management (SIEM) systems.

Using cloud-native monitoring tools to track performance and anomalies.

Integrating threat intelligence feeds to anticipate emerging risks.

Proactive monitoring allows organizations to respond quickly and minimize potential impact.

Risk-Based Approach

Prioritizing security measures based on risk assessments ensures resources are allocated effectively. Key steps include:

Identifying critical assets and data.

Assessing potential threats and vulnerabilities.

Implementing controls that address high-risk areas first.

A risk-based approach maximizes security effectiveness while optimizing operational efficiency.

Challenges in Cloud Security and Mitigation

Cloud security comes with unique challenges that require careful planning and execution. Common challenges include:

Shared Responsibility Model

Cloud providers and customers share responsibility for security. Professionals must understand their obligations regarding data, applications, and infrastructure. Clear delineation of responsibilities ensures no security gaps exist.

Rapid Cloud Adoption

Fast migration to the cloud may lead to misconfigurations and overlooked vulnerabilities. Address this by implementing automated compliance checks and security audits throughout the migration process.

Insider Threats

Insider risks are significant in cloud environments due to privileged access. Mitigation strategies include strict access controls, monitoring, and employee awareness programs.

Regulatory Complexity

Multiple regulations across regions complicate compliance. Organizations must align internal policies with international standards and maintain thorough documentation for audits.

Leveraging CCSP Certification for Career Advancement

CCSP certification provides professionals with specialized expertise, positioning them for advanced roles in cloud security. Career opportunities include:

Cloud Security Architect: Designs and implements secure cloud systems.

Security Consultant: Advises organizations on cloud security best practices.

Cloud Security Engineer: Deploys and manages security controls and monitors cloud environments.

Compliance Analyst: Ensures adherence to laws, regulations, and industry standards.

IT Security Manager: Oversees teams managing cloud security operations and policies.

Professionals with CCSP credentials are often recognized for their expertise, earning higher salaries and access to leadership roles.

Maintaining Certification and Continuous Learning

Continuous learning is essential for sustaining CCSP expertise. Professionals maintain their certification by:

Earning required Continuing Professional Education (CPE) credits.

Staying updated with emerging cloud technologies, threats, and best practices.

Participating in ISC² events, webinars, and professional communities.

Applying skills in real-world projects to maintain practical proficiency.

This ensures that certified professionals remain effective in protecting evolving cloud environments.

CCSP certification is more than a credential—it is a comprehensive framework for mastering cloud security. By understanding each domain deeply, applying practical strategies, and addressing real-world challenges, professionals can secure cloud environments, manage risks, and support organizational objectives.

The certification equips professionals with advanced skills in architecture, data protection, application security, infrastructure security, operations, and compliance. It empowers them to implement robust, resilient, and compliant cloud systems while advancing their careers in a highly competitive field.

CCSP-certified professionals play a pivotal role in shaping secure cloud strategies, ensuring that organizations remain protected against evolving threats while leveraging the full potential of cloud computing.

Emerging Trends in Cloud Security

Cloud security is an evolving field, and staying ahead of trends is critical for CCSP-certified professionals. Emerging technologies and shifting threat landscapes require adaptive strategies.

Zero-Trust Architecture

Zero-trust models are becoming the standard for cloud security. Unlike traditional perimeter-based security, zero-trust assumes that no entity—inside or outside the network—is inherently trusted. Implementation involves:

Verifying every access request through multi-factor authentication and contextual analysis.

Continuously monitoring user behavior and access patterns.

Segmenting networks and restricting lateral movement to minimize potential breaches.

Zero-trust architecture reduces exposure to insider threats and external attacks in increasingly complex cloud environments.

Cloud-Native Security Tools

Cloud-native security tools provide organizations with integrated monitoring, protection, and automation capabilities. These tools enable:

Real-time detection of vulnerabilities and threats.

Automated compliance reporting and policy enforcement.

Integration with DevSecOps pipelines to ensure security is embedded in software development.

Adopting cloud-native tools helps organizations maintain visibility and control over dynamic cloud resources.

Artificial Intelligence and Machine Learning

AI and machine learning are transforming cloud security by enabling predictive threat detection and automated responses. Applications include:

Identifying anomalous activity patterns that may indicate security breaches.

Automating responses to common threats, such as malware or misconfigurations.

Enhancing vulnerability assessments by analyzing large datasets to prioritize remediation.

AI-driven solutions allow professionals to respond faster and more accurately to emerging risks.

Multi-Cloud and Hybrid Environments

Organizations increasingly adopt multi-cloud and hybrid strategies to optimize costs, redundancy, and performance. This trend introduces challenges for CCSP professionals:

Ensuring consistent security policies across different cloud providers.

Implementing unified monitoring and logging to detect cross-platform threats.

Managing identity and access controls for distributed resources.

Mastering multi-cloud security is essential for organizations seeking flexibility without compromising protection.

Advanced Applications of CCSP Knowledge

CCSP-certified professionals apply their expertise in complex scenarios to deliver tangible organizational value.

Secure Cloud Migration

Migrating workloads to the cloud requires detailed planning and security-focused execution. Professionals must:

Assess risks and define security requirements before migration.

Implement data encryption, identity management, and access controls during transition.

Conduct post-migration audits to verify security controls and compliance.

Successful cloud migration minimizes downtime, reduces vulnerabilities, and ensures continuity of operations.

Cloud Risk Management

Effective risk management involves identifying, evaluating, and mitigating threats in the cloud. Key steps include:

Performing continuous risk assessments to identify potential threats.

Prioritizing mitigation efforts based on asset criticality and potential impact.

Maintaining detailed risk registers and reporting to executive leadership.

A proactive risk management approach helps organizations reduce exposure and maintain trust.

Security Automation

Automation reduces human error and improves response times in cloud security operations. CCSP professionals can:

Implement automated vulnerability scanning and patch management.

Use orchestration tools to enforce security policies consistently.

Integrate security automation with incident response workflows for faster mitigation.

Automation increases efficiency and ensures security controls are consistently applied across cloud environments.

Regulatory Compliance

Regulatory pressures continue to grow, and organizations must maintain strict compliance. Professionals apply CCSP knowledge to:

Interpret and implement regulations such as GDPR, HIPAA, and industry-specific standards.

Conduct audits and maintain documentation to demonstrate adherence.

Develop policies and training programs to ensure organizational compliance.

Compliance expertise minimizes legal and financial risks while maintaining customer confidence.

Career Planning for CCSP Professionals

CCSP certification opens doors to advanced roles in cloud security. Career planning involves strategic skill development and positioning.

Technical Career Paths

Professionals can pursue specialized technical roles such as:

Cloud Security Architect: Designs secure cloud infrastructures and ensures scalability.

Cloud Security Engineer: Implements security controls, monitors environments, and responds to incidents.

Application Security Specialist: Focuses on securing cloud-based applications, APIs, and microservices.

These roles require continuous skill enhancement and hands-on experience with cloud platforms and security tools.

Management and Leadership Roles

CCSP-certified professionals are also suited for leadership positions, including:

Security Operations Manager: Oversees monitoring, incident response, and security teams.

IT Security Manager: Manages overall security strategy and cloud security programs.

Compliance and Risk Manager: Ensures regulatory adherence and manages organizational risk portfolios.

Leadership roles benefit from a combination of technical knowledge, strategic thinking, and communication skills.

Ongoing Professional Development

Maintaining CCSP certification requires continuous learning. Professionals should:

Earn Continuing Professional Education (CPE) credits through webinars, courses, and conferences.

Stay updated on emerging threats, technologies, and industry standards.

Participate in professional communities to exchange knowledge and experiences.

Ongoing professional development ensures CCSP-certified individuals remain competitive and effective in dynamic cloud environments.

Best Practices for Long-Term Success

CCSP-certified professionals can achieve lasting impact by implementing these best practices:

Adopt a proactive security mindset, anticipating threats rather than reacting to incidents.

Emphasize secure design and architecture from the beginning of projects.

Maintain clear documentation and audit trails to support compliance and governance.

Collaborate across teams to integrate security into all stages of cloud operations.

Continuously evaluate and update policies, tools, and strategies to adapt to new threats.

By embedding these practices into daily work, professionals enhance their organizations' security posture and reinforce their own credibility.

Real-World Impact of CCSP Expertise

CCSP certification enables professionals to influence organizational outcomes significantly:

Enhancing the security and resilience of cloud infrastructures.

Reducing the likelihood and impact of data breaches or cyberattacks.

Streamlining compliance efforts and minimizing regulatory risk.

Supporting secure innovation and enabling cloud adoption with confidence.

Mentoring teams and guiding strategic decisions related to cloud security.

This expertise positions CCSP-certified professionals as trusted advisors and strategic contributors in technology-driven organizations.

Preparing for the Future of Cloud Security

The cloud security landscape will continue to evolve rapidly. To remain effective, CCSP-certified professionals should:

Explore emerging technologies such as quantum-resistant encryption and secure cloud-native AI.

Develop skills in multi-cloud and hybrid management strategies.

Engage in research and thought leadership to anticipate trends and best practices.

Foster a culture of security awareness within their organizations.

Future-proofing skills ensures that CCSP-certified professionals maintain relevance and influence in a rapidly changing field.

Conclusion

The CCSP certification represents a commitment to mastering cloud security at a professional and strategic level. It equips individuals with the knowledge and practical skills required to design secure architectures, protect sensitive data, manage complex infrastructure, and ensure compliance across diverse cloud environments.

Beyond immediate technical skills, CCSP-certified professionals gain strategic insight, positioning them for advanced roles in architecture, engineering, operations, risk management, and leadership. By staying ahead of emerging trends, adopting best practices, and pursuing continuous professional development, these professionals can maximize their career potential and contribute meaningfully to organizational success.

In an era where cloud adoption is accelerating, the value of CCSP-certified professionals is clear. They are the architects, guardians, and strategists who ensure that organizations leverage the benefits of cloud computing securely and responsibly, paving the way for innovation and growth in the digital age.

ExamCollection provides the complete prep materials in vce files format which include ISC-CCSP certification exam dumps, practice test questions and answers, video training course and study guide which help the exam candidates to pass the exams quickly. Fast updates to ISC-CCSP certification exam dumps, practice test questions and accurate answers vce verified by industry experts are taken from the latest pool of questions.

ISC ISC-CCSP Video Courses

Top ISC Certification Exams

Site Search: