Pass Your Amazon AWS Certified Cloud Practitioner Exam Easy!

Amazon AWS Certified Cloud Practitioner Exam Questions & Answers, Accurate & Verified By IT Experts

Instant Download, Free Fast Updates, 99.6% Pass Rate

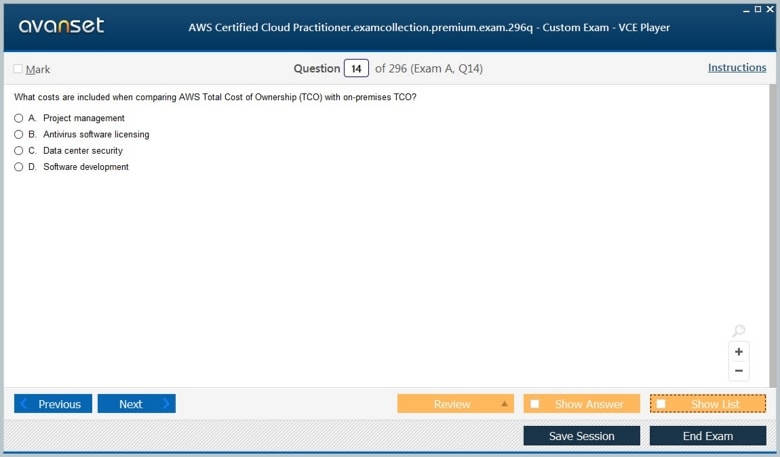

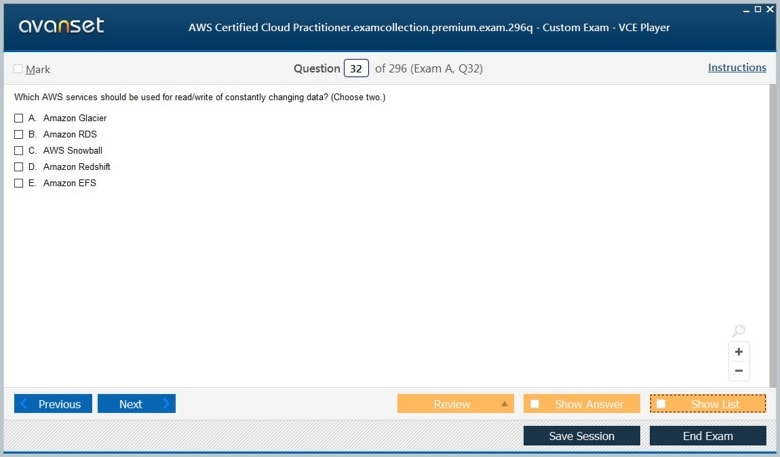

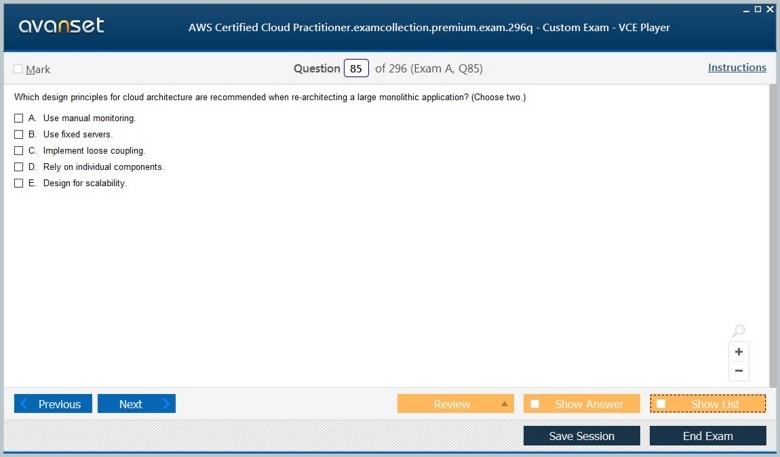

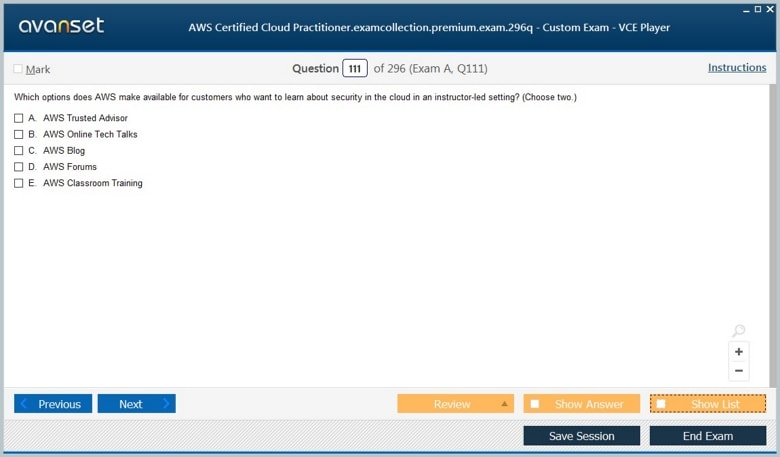

Amazon AWS Certified Cloud Practitioner Practice Test Questions in VCE Format

Amazon AWS Certified Cloud Practitioner Practice Test Questions, Exam Dumps

Amazon AWS Certified Cloud Practitioner (AWS Certified Cloud Practitioner (CLF-C01)) exam dumps vce, practice test questions, study guide & video training course to study and pass quickly and easily. Amazon AWS Certified Cloud Practitioner AWS Certified Cloud Practitioner (CLF-C01) exam dumps & practice test questions and answers. You need avanset vce exam simulator in order to study the Amazon AWS Certified Cloud Practitioner certification exam dumps & Amazon AWS Certified Cloud Practitioner practice test questions in vce format.

Pass Your Amazon AWS Certified Cloud Practitioner Exam Easy!

Passing the AWS Certified Cloud Practitioner exam requires a firm understanding of fundamental cloud concepts, such as deployment models, storage solutions, and the types of services offered by AWS. Cloud computing is transforming the way businesses operate, enabling flexibility, scalability, and cost efficiency. Beginners often start by exploring core services like Amazon EC2 for compute resources, S3 for storage, and RDS for managed databases. Developing hands-on experience reinforces learning and helps with exam retention. Efficiently managing large amounts of information in cloud environments is essential, similar to how you can convert MSG files in bulk when handling enterprise email systems. By understanding how data flows and is stored, candidates gain insight into cloud storage best practices and learn how to apply similar concepts in AWS services like S3 and Glacier.

In addition, foundational cloud knowledge includes understanding key benefits such as elasticity, high availability, and disaster recovery. These are often tested in scenario-based questions. A candidate should be able to identify which AWS service is best for a specific use case—for example, when to use S3 for long-term storage versus EBS for high-performance block storage. Combining theoretical knowledge with practical exercises ensures you’re well-prepared for the exam.

Security Basics in AWS

Security is one of the core pillars in the AWS exam, covering aspects such as identity management, access controls, and encryption. Candidates are expected to understand AWS’s shared responsibility model, which clearly defines the responsibilities of AWS versus the customer. A practical way to solidify security concepts is through experimentation with security-focused scripts. Learning how to create Windows reverse shell can demonstrate the potential risks of insecure configurations and the importance of implementing robust security controls.

Even though the exam does not require deep programming knowledge, understanding the basics of threats and mitigations can help candidates answer scenario questions more accurately. AWS services such as IAM, KMS, and CloudTrail enable you to manage user permissions, encrypt sensitive data, and monitor account activity. Practicing these concepts in a hands-on environment helps learners see how security policies translate into real-world cloud scenarios.Moreover, candidates should also understand compliance frameworks like GDPR, HIPAA, and SOC 2. These frameworks ensure that cloud workloads are secure and compliant with industry standards. Combining theoretical knowledge with practical experimentation prepares candidates to approach security questions confidently on the exam.

Networking and Access Management

Networking is an essential part of the AWS Certified Cloud Practitioner exam, as it underpins how resources communicate securely in the cloud. Understanding concepts like VPCs, subnets, route tables, security groups, and NACLs is critical for exam success. A useful analogy for understanding network access is experimenting with authentication techniques in controlled environments. Attempting a DVWA login brute attack in a safe lab environment shows the significance of secure login systems, which mirrors the importance of configuring IAM roles and permissions in AWS.

Candidates should also understand how cloud networking differs from traditional networking. Cloud networks are highly scalable, flexible, and can be adapted dynamically using services like AWS Direct Connect or Transit Gateway. Knowing these principles allows candidates to answer questions about network design, access controls, and fault tolerance scenarios effectively.Hands-on labs that simulate network failures or test secure access permissions can provide deeper insight into how AWS ensures reliable connectivity and security for applications deployed in the cloud.

Privacy and Anonymity Principles

Even at a foundational level, AWS emphasizes security awareness, privacy, and the protection of sensitive information. Understanding identity and access management, audit logs, and monitoring tools is vital for maintaining secure cloud environments. Candidates can strengthen these skills by exploring practical exercises such as learning to stay anonymous by MAC in controlled network settings. This exercise teaches how to manage device identities and maintain privacy within a network, which parallels IAM best practices in AWS.

AWS provides various tools for monitoring and auditing access, including CloudTrail, Config, and GuardDuty. Being familiar with these services helps candidates understand how cloud providers detect unusual activity, enforce compliance, and maintain accountability. Privacy and anonymity exercises also encourage a mindset of proactive security, which is critical for both exam success and real-world cloud operations.

Adaptive Networking in Cloud

Networking is dynamic, and cloud environments require adaptive and flexible solutions to ensure performance and reliability. Services such as VPC peering, Direct Connect, and Elastic Load Balancers allow resources to communicate efficiently while maintaining security. Learning practical techniques, such as how to harness SOCAT for networking, can provide insight into how traffic can be routed, proxied, or monitored adaptively. These concepts help candidates understand AWS networking services from a hands-on perspective.Adaptive networking also ensures high availability and fault tolerance in the cloud. AWS exams often include questions on scalability and load balancing. Understanding how to simulate or monitor network traffic reinforces the core concepts of reliability, availability, and performance optimization, which are essential for passing the exam.

Exam Preparation with VMware Examples

Understanding the exam blueprint and practicing consistently are crucial steps for certification success. Even if a candidate is not pursuing VMware, the preparation methods provide a blueprint for methodical study and skill retention. Exam readiness comes from repetition, familiarization with scenarios, and applying theoretical knowledge to practical exercises.Studying other IT certifications can provide strategies for approaching AWS exams. Techniques used to pass new VCA6 exam emphasize planning, hands-on labs, and scenario-based learning. These same methods can be applied to AWS exam preparation. For example, candidates can create lab environments to simulate AWS services, practice configuring security settings, and run mock scenario questions to assess their readiness.

Emerging Cloud Certification Trends

Candidates should focus on combining cloud fundamentals with practical skills and additional certifications that complement AWS knowledge. This approach ensures that certification is not only a milestone but also a foundation for continuous career growth. Staying informed about cloud certification trends helps plan long-term IT careers. New certifications, such as new VCAP VCA exams, illustrate how IT skills are constantly evolving. AWS itself frequently updates exams to reflect changes in services and best practices, so adopting a mindset of continuous learning is essential.Emerging trends also highlight the importance of keeping technical skills current and understanding how multiple cloud and IT certifications integrate to provide a competitive advantage in the job market.

VMware Exam Retirements Update

The IT certification landscape is constantly changing, and VMware frequently updates its exams to match current technology and industry standards. Professionals planning certifications must track these changes carefully, as staying informed prevents wasted effort and ensures that credentials remain valid and recognized. Understanding exam updates is an important part of career planning, particularly for individuals looking to establish credibility in virtualization and cloud computing.For instance, at the end of October, three VMware exams retire, which directly affects several VCA and VCP certifications. Candidates preparing for these exams must adjust their plans, ensuring they focus on active credentials to maximize their professional value. Being aware of retirements allows learners to invest their time efficiently and align their study strategy with current industry requirements.

Staying updated on exam retirements also enables professionals to remain competitive. Employers highly value candidates who demonstrate awareness of credential relevance and have the foresight to pursue active certifications. This knowledge contributes to better career planning and supports long-term growth in cloud and virtualization domains.

Navigating Multiple VMware Exam Retirements

VMware periodically retires multiple exams simultaneously, which can disrupt candidates who are midway through preparation. Understanding these simultaneous retirements is essential to adjust study schedules effectively and avoid wasted effort. Planning around these updates helps learners maintain focus on certifications that provide tangible career benefits.This fall, multiple VMware exams retired, affecting learners across several certification tracks. Candidates should evaluate which credentials are still active and prioritize them accordingly, while keeping track of replacement exams or updated certification paths. By focusing on valid certifications, learners can ensure their preparation aligns with the current market demand and retains professional relevance.Being aware of multiple exam retirements also allows professionals to plan long-term learning strategies. By shifting focus to updated or replacement exams, candidates can maximize their skills, remain aligned with technological advancements, and maintain a competitive edge in the IT and cloud sectors.

New VMware Cloud Certification Roadmap

A clear roadmap is essential for planning a successful certification journey, especially in cloud and virtualization. VMware has introduced a structured progression for cloud associate certifications, allowing learners to start with foundational knowledge before advancing to higher levels. Understanding this roadmap helps candidates determine which certifications to pursue first and ensures that each stage builds on the previous one effectively.The cloud associate certifications roadmap provides detailed guidance on credential progression, highlighting prerequisites, recommended experience, and logical sequences. By following this roadmap, learners gain structured, step-by-step preparation, combining theoretical understanding with practical experience. This approach also mirrors effective strategies for AWS exam readiness, where sequential learning and hands-on practice reinforce comprehension and retention.Following a structured roadmap ensures candidates develop both confidence and competence. By completing certifications in a logical sequence, learners acquire a strong foundation for advanced roles in cloud computing, virtualization, and IT management, positioning themselves for career advancement and strategic opportunities.

Future Employment Trends in IT

Keeping up with emerging job trends is critical for IT professionals to ensure their skills remain relevant and in demand. Cloud computing, data analytics, and cybersecurity continue to be highly sought-after skills, making them ideal areas for professional development. Understanding these trends allows candidates to target certifications and practical skills that align with market needs.Predicting future high demand jobs provide valuable insights into which careers will continue to grow. By aligning certification plans with these trends, candidates can focus on skills that have long-term relevance, ensuring their preparation is future-proof and strategically beneficial.Anticipating these trends allows learners to combine certifications with practical experience and career planning. Professionals who align their learning with high-demand roles position themselves to take advantage of emerging opportunities, maintain competitiveness, and secure sustainable careers in the technology sector.

Non-Medical Career Options for PCB Students

Students completing the PCB stream often look for career paths outside the traditional medical domain. IT and cloud computing offer alternative opportunities for growth, providing technical skills that are increasingly valued in the job market. Early exposure to relevant courses can provide a strong foundation for entry-level certifications and hands-on experience.For instance, non-medical career courses highlight programs that balance theoretical knowledge with practical application, making students ready for technical certifications such as AWS Cloud Practitioner. These courses ensure that learners gain both the foundational understanding and the applied skills necessary for IT and cloud career paths.By exploring non-medical options early, students can make informed decisions that align with their interests and market demand. Building a portfolio of technical skills along with certifications enhances employability and prepares learners for future opportunities in IT, cloud computing, and data analytics.

Postgraduate Course Options

Postgraduate education provides specialized knowledge that complements certifications and prepares learners for advanced roles in IT and cloud computing. Programs are designed to integrate theoretical study with hands-on labs, case studies, and real-world projects that help learners apply cloud concepts effectively.The PG courses for career offers a comprehensive view of programs that align with career objectives. By combining formal education with certification preparation, professionals strengthen both technical expertise and academic credentials, making them more competitive in the job market.Structured postgraduate programs allow learners to deepen their skills, gain practical exposure, and prepare for leadership or specialized technical roles. This integration of certifications and education ensures readiness for complex IT projects, cloud implementations, and data-driven business solutions.

Short-Term Skill Development Courses

Short-term courses provide learners with an efficient way to acquire practical skills without committing to long-term degrees. These programs are ideal for students and professionals who want to enter the workforce quickly or supplement their existing knowledge.For example, 1-year skill development courses provide applied training in IT, data analysis, and cloud computing. When combined with certification preparation, such courses create a robust foundation that balances theoretical understanding with practical skill acquisition.By participating in short-term courses, learners can quickly develop marketable skills, enhance employability, and build confidence to pursue certifications like AWS Cloud Practitioner. This approach accelerates entry into technical careers while preparing candidates for real-world challenges.

Recession-Proof IT Career Paths

In today’s volatile economic environment, choosing a resilient career path is essential. Certain IT domains, such as cloud computing, cybersecurity, and data analytics, continue to experience high demand regardless of market fluctuations, making them ideal areas for professional development.The recession-proof IT careers provide guidance on roles that offer stability and long-term prospects. Candidates who focus on these areas can combine certifications with hands-on skills to remain competitive even during challenging economic periods.Planning around recession-proof careers allows learners to invest strategically in skills and certifications that yield sustainable returns. Professionals who align learning with resilient career paths are better prepared for future opportunities and can adapt to evolving technological and business landscapes.

Future-Proof Career with Microsoft Power Automate

Automation has become a critical component of modern IT and cloud environments. Learning to streamline workflows and implement RPA solutions enhances operational efficiency and complements cloud skills, increasing employability across industries.For instance, Power Automate RPA career equips learners with skills to automate repetitive tasks and optimize business processes. Combining these automation capabilities with cloud expertise enables professionals to deliver end-to-end solutions in enterprise environments.Mastering automation alongside cloud skills ensures professionals remain versatile and competitive. Employers highly value individuals who can integrate cloud deployments with automated workflows, demonstrating both technical depth and practical problem-solving abilities.

Enhancing Data Skills with Power BI

Data analysis is a key skill for professionals in IT and cloud domains, enabling informed business decision-making. By learning to visualize and interpret complex datasets, professionals can transform raw data into actionable insights that drive strategic initiatives.Courses such as Power BI data career teach learners to extract insights from large datasets, which complements AWS services like QuickSight and Redshift. Integrating these skills with cloud knowledge allows candidates to design data-driven solutions that provide measurable business value.By combining analytics with cloud expertise, professionals enhance their overall skillset and career prospects. This integrated approach enables learners to deliver comprehensive solutions that are both technically sound and strategically impactful, positioning them for advanced roles in cloud computing and data analytics.

Step-by-Step Power Platform Career

The Microsoft Power Platform has become essential for organizations looking to optimize business processes, automate repetitive tasks, and analyze data efficiently. Professionals seeking to advance their careers in IT or business analysis must develop both technical and analytical skills to succeed in this competitive environment. Structured learning paths provide a clear roadmap for acquiring these capabilities.For those wanting a guided approach, step-by-step Power Platform career outlines how to become a PL-200 functional consultant. This resource explains each stage of learning, including foundational concepts, platform navigation, and application deployment in real-world scenarios. Following such structured guidance ensures that learners cover all essential areas without missing critical knowledge.Developing expertise systematically allows candidates to gain confidence and practical experience. Professionals who master Power Platform step-by-step are prepared to implement solutions, improve operational efficiency, and contribute meaningfully to organizational digital transformation initiatives.

Propel Your Career With PL-100

Career advancement often depends on acquiring relevant certifications that demonstrate practical expertise. Power Platform certifications, such as the PL-100, allow learners to showcase their ability to create applications, automate processes, and solve business challenges using Microsoft tools.The PL-100 app maker certification provides structured guidance on leveraging platform capabilities to design and deploy applications efficiently. Candidates learn to connect data sources, implement workflow automation, and customize apps to meet specific business requirements. Completing this certification ensures that learners gain both theoretical knowledge and applied skills.Earning the PL-100 credential helps professionals stand out in the job market. It validates practical skills in application development and workflow optimization, making certified individuals attractive for roles in business analysis, IT consulting, and enterprise solution deployment.

Unlocking Power Platform Fundamentals

Before attempting advanced certifications, understanding the fundamentals of Power Platform is essential. This includes learning the core tools such as Power Apps, Power Automate, Power BI, and Power Virtual Agents, which collectively enable data analysis, application development, and workflow automation.For beginners, unlocking Power Platform fundamentals provides step-by-step guidance to understand these tools. The course focuses on creating simple apps, automating workflows, and building dashboards that provide actionable insights. By starting with foundational knowledge, learners establish a solid base for more complex certifications and real-world projects.A strong foundation ensures that learners can confidently tackle advanced topics, troubleshoot issues, and design practical solutions. Professionals who master the basics are better prepared for subsequent certifications and gain a more comprehensive understanding of business and technical applications.

Expanding Skills Across Technology Certifications

IT professionals benefit from diversifying their knowledge across multiple technology platforms. This includes gaining skills in e-commerce, marketing automation, and cybersecurity to complement Power Platform expertise. Diversification ensures adaptability and positions professionals for various technical roles.For instance, Magento technology exams validate practical knowledge in e-commerce management, product cataloging, and platform customization. Preparing for such certifications allows learners to extend their skill set beyond business apps and automation, integrating cloud and commerce technologies for broader impact.Combining certifications across platforms strengthens a professional’s profile. By leveraging knowledge in multiple domains, learners are equipped to address diverse challenges, implement end-to-end solutions, and remain competitive in a rapidly evolving job market.

Mastering Marketo Certification Exams

Marketing automation skills are increasingly essential for professionals working in digital marketing and customer engagement. Certifications like Marketo validate practical knowledge in campaign management, lead nurturing, and marketing analytics, which helps professionals advance their careers in competitive industries.The Marketo certification exams guide provides step-by-step preparation for learning campaign setup, automation workflows, and reporting. Candidates gain practical experience and understand best practices for implementing marketing automation strategies that deliver measurable results.Completing Marketo certifications enhances employability and ensures professionals are ready to manage complex marketing campaigns. Certified individuals are better positioned to contribute to business growth and optimize digital marketing initiatives.

Advancing Skills With McAfee Certifications

Cybersecurity continues to be a top priority for organizations globally. Professionals skilled in security tools and practices are highly sought after, and certifications from McAfee demonstrate practical expertise in threat detection, endpoint protection, and risk mitigation.The McAfee security certification exams guide learners through core security principles, platform configuration, and incident response processes. This resource ensures candidates are prepared to handle real-world security challenges effectively while enhancing their technical knowledge.Achieving McAfee certifications strengthens career prospects in cybersecurity. Certified professionals can manage complex security environments, implement preventive measures, and provide strategic guidance for enterprise-level security solutions.

Expanding Expertise With Microsoft Exams

Microsoft certifications are critical for IT professionals aiming to specialize in cloud computing, data analytics, or enterprise solutions. These exams validate skills in platform usage, administration, and solution deployment, providing tangible proof of competence.The Microsoft certification exams list covers topics ranging from cloud administration to application development and security. Preparing for these exams ensures that candidates gain both theoretical knowledge and practical experience with Microsoft technologies.Earning Microsoft certifications improves professional credibility and opens doors to roles in system administration, cloud services, and IT consulting. Certified individuals are equipped to handle enterprise-level projects and strategic initiatives effectively.

Achieving Mile2 Security Certifications

In a technology-driven world, cybersecurity certifications are vital for IT professionals seeking to safeguard organizations from threats. Mile2 certifications provide training in ethical hacking, penetration testing, and information security management.The Mile2 security certification exams prepare learners for roles in risk assessment, security auditing, and incident response. These exams emphasize hands-on skills and real-world applications, ensuring that candidates can manage security environments confidently.Professionals with Mile2 certifications are highly valued in organizations that prioritize security. These credentials demonstrate a commitment to cybersecurity excellence and readiness to handle evolving threats.

Cloud Expertise With Mirantis Certifications

Containerization and cloud-native technologies are vital for modern IT operations. Mirantis certifications validate skills in Docker, Kubernetes, and cloud orchestration, enabling professionals to design, deploy, and maintain scalable cloud infrastructures. Mirantis technology exams guide provides step-by-step learning on container management, cloud deployment, and infrastructure automation. Candidates gain practical exposure to real-world scenarios that enhance their problem-solving and implementation skills.Holding Mirantis certifications positions IT professionals for roles in cloud architecture, DevOps, and enterprise infrastructure management. These skills are in high demand as organizations adopt cloud-native solutions for scalability and efficiency.

Database Mastery With MongoDB Certifications

Data-driven organizations require skilled professionals to manage, analyze, and secure data efficiently. MongoDB certifications validate expertise in NoSQL database management, aggregation frameworks, and performance optimization. MongoDB certification exams guide ensures learners understand document modeling, query design, and operational practices. Candidates gain practical skills needed for database administration and effective data-driven decision-making.Certified MongoDB professionals can design scalable database solutions and contribute to business intelligence projects. These skills enhance career prospects in data engineering, analytics, and cloud database management.

Motorola Solutions Certification Path

Telecommunications and enterprise mobility require specialized skills to manage complex hardware and software systems. Motorola Solutions certifications validate technical proficiency in network devices, communication tools, and enterprise solutions.The Motorola Solutions exams guide covers product configuration, troubleshooting, and operational procedures. Candidates acquire hands-on knowledge that prepares them for real-world deployments and system maintenance.Earning these certifications positions professionals for roles in enterprise communications, network administration, and technical support. Certified candidates can implement, monitor, and optimize solutions effectively.

Expanding MSP Professional Skills

Managed Service Providers (MSPs) deliver IT services across organizations, requiring professionals to manage diverse platforms, networks, and client requirements. MSP certifications validate operational, technical, and management expertise for service delivery. MSP certification exams guide covers network administration, cloud solutions, and service management best practices. Candidates gain insights into client engagement, service-level agreements, and workflow optimization.MSP-certified professionals are well-prepared to manage multi-client IT services efficiently. Their skills enhance reliability, client satisfaction, and operational excellence in managed IT environments.

Hands-On Power Automate RPA Developer

Automation is transforming business operations, and Microsoft Power Automate enables professionals to streamline workflows and repetitive tasks. Training in RPA development ensures candidates can implement automation effectively.The Power Automate RPA developer training teaches workflow design, automation integration, and practical RPA implementation. Learners gain hands-on experience, enabling them to build scalable automated solutions for business efficiency.Professionals trained in Power Automate RPA development can deliver process optimization and time-saving solutions. This expertise is highly sought after in organizations focused on digital transformation initiatives.

Power BI Data Analyst Training

Data visualization and analytics are essential skills for modern IT and business professionals. Power BI certifications validate a candidate’s ability to analyze, visualize, and interpret data for actionable insights. Power BI data analyst training provides practical experience in building dashboards, reports, and data models. Candidates learn how to transform complex datasets into strategic insights that support decision-making.Power BI-certified professionals are equipped to enhance organizational performance through data-driven strategies. Their skills allow them to communicate insights effectively and support evidence-based business planning.

Power Platform Developer Certification

Developers play a key role in designing and implementing solutions on the Microsoft Power Platform. Certification validates technical skills, application development capabilities, and integration expertise.The Power Platform developer training teaches app creation, workflow automation, and integration with other Microsoft tools. Candidates acquire practical knowledge that prepares them for enterprise-level deployment projects.Certified developers enhance organizational efficiency by delivering scalable, automated, and optimized solutions. Their expertise ensures robust, secure, and maintainable business applications.

Functional Consultant Certification

Functional consultants bridge the gap between business requirements and technical solutions. Power Platform functional consultant certifications validate skills in designing, implementing, and optimizing business solutions. Power Platform functional consultant training teaches requirements analysis, solution configuration, and integration best practices. Candidates gain the ability to translate business needs into technical solutions effectively.Certified functional consultants improve project success rates by ensuring solutions meet business objectives. Their skills help organizations implement efficient, automated processes aligned with strategic goals.

Power Platform Fundamentals Training

Microsoft Power Platform provides essential tools for automation, data analysis, and app development. Professionals looking to enhance their IT and business skills benefit from structured learning paths that cover core platform concepts and practical applications.The Power Platform fundamentals training offers step-by-step guidance on understanding Power Apps, Power Automate, Power BI, and Power Virtual Agents. This program ensures learners gain hands-on experience while grasping the underlying principles of platform functionality.Mastering fundamentals allows professionals to approach advanced certifications confidently. Foundational knowledge ensures successful implementation of solutions in real-world business scenarios and strengthens problem-solving capabilities.

Solution Architect Expert Certification

Solution architects design, deploy, and optimize enterprise solutions. Microsoft Power Platform Solution Architect certifications validate the ability to integrate technical expertise with strategic planning to meet organizational goals. Power Platform solution architect training equips learners with skills in system design, integration, and governance. Candidates learn to plan complex solutions, align technology with business requirements, and ensure scalability and security across platforms.Certified solution architects enhance organizational value by implementing efficient, robust solutions. Their expertise bridges business strategy and technical execution, making them highly sought after in enterprise IT roles.

ACP-420 Certification Guide

Cloud computing and AWS services require skilled professionals who can design, deploy, and manage cloud infrastructure. ACP-420 certification validates knowledge of AWS solutions and best practices. ACP-420 exam preparation guide provides detailed coverage of core cloud concepts, security considerations, and deployment strategies. Candidates gain both theoretical knowledge and practical experience in cloud implementation.Certified professionals can confidently manage cloud environments, optimize resources, and enhance organizational efficiency. ACP-420 holders are well-positioned for roles in cloud administration and architecture.

ACP-600 Certification Overview

Advanced AWS certifications, such as ACP-600, test professional expertise in cloud solutions architecture and deployment. Candidates are required to demonstrate proficiency in complex AWS environments, ACP-600 exam guide resource explains exam objectives, key concepts, and best practices for infrastructure design and deployment. Learners gain practical knowledge applicable to enterprise cloud scenarios.Earning ACP-600 certification validates advanced cloud skills, making professionals highly competitive for senior cloud roles. It demonstrates mastery of AWS technologies and strategic deployment capabilities.

ACP-620 Advanced Certification

For IT professionals seeking to specialize in AWS cloud architecture, ACP-620 certification provides advanced training and validation. It covers complex cloud design, security, and automation practices. ACP-620 certification training includes detailed guidance on AWS service integration, deployment strategies, and architectural best practices. Learners gain hands-on experience in configuring scalable, secure cloud solutions.This certification enhances career prospects in enterprise cloud management and architecture. ACP-620 holders demonstrate advanced technical competence and strategic insight into cloud deployments.

ACP-01101 Exam Insights

Specialized certifications like ACP-01101 validate expertise in specific cloud services and deployment models. Professionals gain practical skills and knowledge to implement solutions efficiently. ACP-01101 exam preparation covers essential concepts in cloud management, service optimization, and real-world deployment scenarios. Candidates learn to design, implement, and troubleshoot complex cloud solutions.Certified candidates enhance organizational capabilities and are recognized for their ability to manage cloud environments effectively. ACP-01101 strengthens professional credibility in cloud computing.

MAYA11-A Certification

Professional certifications like MAYA11-A focus on network management and security principles. Candidates gain expertise in configuring, monitoring, and troubleshooting enterprise systems. MAYA11-A exam guide provides detailed learning resources, covering best practices in system administration, security protocols, and network optimization. Learners gain hands-on experience for practical application.Achieving this certification ensures professionals are prepared for technical challenges in enterprise environments. It enhances career opportunities in IT administration and network management.

MAYA12-A Certification

MAYA12-A builds on foundational networking knowledge and focuses on advanced configuration and troubleshooting. Professionals acquire skills for managing complex enterprise networks. MAYA12-A certification guide includes step-by-step preparation, highlighting advanced network protocols, system security, and performance optimization techniques. Candidates gain practical exposure for real-world application.Certified professionals enhance operational efficiency and can manage large-scale networks effectively. This credential supports career growth in IT infrastructure and systems administration.

3312 Certification Overview

Certifications such as 3312 focus on specialized technical skills in IT systems and cloud deployment. They validate expertise required for managing enterprise-level solutions, 3312 exam preparation guide covers deployment best practices, system monitoring, and configuration management. Candidates acquire both theoretical knowledge and practical skills for professional application.Certification ensures professionals are recognized for their technical competence. 3312 holders are prepared for complex IT challenges and advanced enterprise roles.

37820X Certification Training

Advanced IT certifications like 37820X validate skills in enterprise system management, security, and cloud integration. They prepare professionals for high-demand technical roles.The 37820X certification training provides detailed exam objectives, step-by-step learning, and practical labs. Learners gain hands-on experience in implementing and managing enterprise systems.Certified professionals demonstrate the ability to handle complex technical environments and lead advanced IT projects, enhancing career potential.

CSM-001 Scrum Master Certification

Agile methodologies are essential for modern project management. The CSM-001 certification validates the ability to lead Agile teams and implement Scrum principles effectively.The CSM-001 Scrum Master guide teaches candidates how to manage sprints, facilitate team collaboration, and optimize project delivery. Practical scenarios prepare learners for real-world Agile projects.Certified Scrum Masters improve team productivity and project outcomes. This credential enhances leadership skills and career prospects in project management and IT delivery roles.

Google Ads Fundamentals

Digital marketing proficiency requires understanding advertising platforms. Google Ads certifications validate skills in campaign creation, optimization, and performance tracking.The Google Ads fundamentals guide provides detailed training on campaign setup, keyword management, and analytics tracking. Learners gain practical knowledge to manage effective marketing campaigns.Certified professionals can optimize ad performance, improve ROI, and enhance digital marketing strategies. This credential supports careers in marketing, analytics, and business development.

Google Ads Shopping Advertising

E-commerce businesses benefit from specialized advertising strategies. Google Ads Shopping certification demonstrates skills in product listing campaigns, bidding strategies, and analytics interpretation. Google Shopping advertising guide teaches learners how to create and manage shopping campaigns, optimize product visibility, and measure conversion performance. Practical exercises reinforce skill application.Professionals with this certification improve campaign efficiency, enhance e-commerce visibility, and increase sales. It is valuable for digital marketers managing online retail strategies.

Google Associate Android Developer

Mobile application development is a high-demand skill. The Google Associate Android Developer certification validates practical app development skills for the Android platform.The Google Android developer certification teaches application design, coding best practices, and testing techniques. Candidates gain hands-on experience in building functional, user-friendly apps.Certified Android developers enhance employability, contribute to mobile projects, and demonstrate competence in modern app development, making them valuable assets for tech companies.

Google Associate Cloud Engineer

Cloud computing skills are critical for IT professionals. Google Associate Cloud Engineer certification demonstrates expertise in deploying, monitoring, and maintaining cloud-based solutions.The Google cloud engineer certification provides training in cloud architecture, resource management, and security practices. Candidates gain practical skills for real-world cloud deployments.Certified cloud engineers optimize cloud resources, improve operational efficiency, and ensure secure and scalable deployments, positioning them for advanced cloud roles.

Google Cloud Digital Leader

Understanding cloud strategy and business integration is essential for leaders. Google Cloud Digital Leader certification validates knowledge of cloud concepts, business value, and technology strategy. Google cloud digital leader guide teaches candidates how to assess business needs, evaluate cloud solutions, and guide organizational adoption of cloud technologies.Certified digital leaders align technology initiatives with strategic goals. They improve organizational efficiency, enable innovation, and guide enterprise cloud adoption effectively.

Google Analytics Certification

Data-driven decision-making is essential in business. Google Analytics certification validates the ability to collect, analyze, and interpret website data to improve marketing and business strategies.The Google Analytics certification guide teaches tracking implementation, report generation, and insights interpretation. Candidates gain practical skills for improving digital performance and optimizing user experience.Certified professionals can enhance website performance, make data-driven decisions, and support marketing strategies effectively. This credential is valuable for roles in analytics, digital marketing, and business intelligence.

Conclusion

In today’s rapidly evolving technology landscape, earning certifications such as the AWS Certified Cloud Practitioner and other related IT and cloud credentials has become a pivotal step for professionals aiming to advance their careers. These certifications not only validate technical knowledge but also demonstrate a commitment to continuous learning, practical application, and problem-solving capabilities. We explored structured approaches to mastering Power Platform, cloud computing, cybersecurity, and digital marketing tools, emphasizing the importance of combining theoretical knowledge with hands-on experience.

One of the key takeaways is that preparation is a multi-faceted process. It involves understanding exam objectives, gaining practical exposure through labs and projects, and leveraging high-quality resources to reinforce learning. For example, Power Platform certifications, ranging from fundamentals to developer and functional consultant tracks, empower professionals to automate business processes, analyze data, and develop enterprise-grade applications. Similarly, AWS certifications such as ACP-420, ACP-600, and ACP-620 validate cloud expertise and deployment skills, positioning certified candidates for both technical and strategic roles. Integrating these learning pathways with complementary skills in cybersecurity, database management, and project management ensures that professionals remain competitive in a diverse and demanding job market.

Furthermore, specialized certifications such as Google Cloud, Google Analytics, and digital marketing credentials provide opportunities to expand one’s expertise beyond traditional IT roles. This enables professionals to contribute effectively to digital transformation initiatives and improve operational efficiency across organizations. The practical approach, combining foundational learning, advanced training, and hands-on experience, ensures that candidates can apply their skills in real-world scenarios while excelling in certification exams.

Ultimately, achieving these certifications is not merely about passing an exam—it is about building a strong, future-proof career. Certified professionals demonstrate not only technical proficiency but also strategic thinking, adaptability, and the ability to implement solutions that deliver tangible business value. By following structured learning pathways, utilizing high-quality training resources, and applying skills in practice environments, learners can confidently navigate the challenges of the modern IT and cloud ecosystem. These certifications open doors to advanced roles, higher earning potential, and long-term career growth, making them a worthwhile investment for anyone committed to excelling in the digital era.

Go to testing centre with ease on our mind when you use Amazon AWS Certified Cloud Practitioner vce exam dumps, practice test questions and answers. Amazon AWS Certified Cloud Practitioner AWS Certified Cloud Practitioner (CLF-C01) certification practice test questions and answers, study guide, exam dumps and video training course in vce format to help you study with ease. Prepare with confidence and study using Amazon AWS Certified Cloud Practitioner exam dumps & practice test questions and answers vce from ExamCollection.

Amazon AWS Certified Cloud Practitioner Video Course

Top Amazon Certification Exams

- AWS Certified Solutions Architect - Associate SAA-C03

- AWS Certified Solutions Architect - Professional SAP-C02

- AWS Certified AI Practitioner AIF-C01

- AWS Certified Cloud Practitioner CLF-C02

- AWS Certified Machine Learning Engineer - Associate MLA-C01

- AWS Certified DevOps Engineer - Professional DOP-C02

- AWS Certified Data Engineer - Associate DEA-C01

- AWS Certified CloudOps Engineer - Associate SOA-C03

- AWS Certified Developer - Associate DVA-C02

- AWS Certified Advanced Networking - Specialty ANS-C01

- AWS Certified Machine Learning - Specialty

- AWS Certified Security - Specialty SCS-C02

- AWS Certified Security - Specialty SCS-C03

- AWS Certified SysOps Administrator - Associate

- AWS-SysOps

Site Search:

@Mad Belo1, congratulations! Is the premium file word for word with its Q&A's with the ones you saw in the exams?

Passed today!! Test results wont come in until the next 5 days. Premium DUMP is FULLY valid!! Good Luck!!!

The practice questions prepared me very well. I passed.

Passed yesterday 903/1000, also you need study by your self too, due to include new services that AWS launched.

Passed Today, but you must reinforce with your own-training

Premium dumps is valid. only a few new questions, Good luck!

Hello guys, is the premium exam valid? thank you

Please is the AWS Certified Cloud Practitioner Premium still valid

Thanks!

looking for vce simulator. where i can get it.

I am writing the exam next weekend in the US, is the prep4sure by Abrielle still valid?

Anybody has any update if the premium is valid?

is the premium dump valid??

Just took the exam yesterday friday 13th September. Have to say the exam are like 65 q. This free dump has only 32, from which, i got like less than half of the questions. So it helps, but get the official technical essentials PDF and do some quick labs. It asked a lot about billing, trust advisor and some otehr tools. Just with the PDF its not enough for sure.

Just took the test this morning 24 Aug 2019. I passed. Yes the dump is valid. You still need to study. Know the cost estimations. Know what services bring what benefits.

Its valid?

Hello, Please confirm this dump for AWS Cloud Practitioner (32q free and 65q Premium) is valid?

Is this dump still valid in the uk?

any practitioner dumps available?

Please share....

Preparing for aws cloud practioner exam

Please share the AWS cloud Practioner exam dumps

is this already available?

AWS Certified Cloud Practitioner

Where can I put hase practioner aws exam?