Pass Your Amazon AWS Certified Solutions Architect - Associate Exam Easy!

Amazon AWS Certified Solutions Architect - Associate Exam Questions & Answers, Accurate & Verified By IT Experts

Instant Download, Free Fast Updates, 99.6% Pass Rate

Amazon AWS Certified Solutions Architect - Associate Practice Test Questions in VCE Format

| File | Votes | Size | Date |

|---|---|---|---|

File Amazon.Test4prep.AWS Certified Solutions Architect - Associate.v2019-02-09.by.Barbara.570q.vce |

Votes 24 |

Size 6.48 MB |

Date Feb 14, 2019 |

File Amazon.Testkings.AWS Certified Solutions Architect - Associate.v2018-12-01.by.Bentley.555q.vce |

Votes 26 |

Size 7.25 MB |

Date Dec 14, 2018 |

File Amazon.Pass4sure.AWS Certified Solutions Architect - Associate 2018.v2018-11-14.by.Barbara.58q.vce |

Votes 37 |

Size 91.5 KB |

Date Nov 26, 2018 |

File Amazon.Selftestengine.AWS Certified Solutions Architect - Associate.v2018-06-23.by.Jackson.525q.vce |

Votes 13 |

Size 5.36 MB |

Date Jun 27, 2018 |

File Amazon.ActualTests.AWS Solution Architect Associate.v2017-03-14.by.FS.410q.vce |

Votes 64 |

Size 9.31 MB |

Date Mar 14, 2017 |

Amazon AWS Certified Solutions Architect - Associate Practice Test Questions, Exam Dumps

Amazon AWS Certified Solutions Architect - Associate (AWS Certified Solutions Architect - Associate (SAA-C01)) exam dumps vce, practice test questions, study guide & video training course to study and pass quickly and easily. Amazon AWS Certified Solutions Architect - Associate AWS Certified Solutions Architect - Associate (SAA-C01) exam dumps & practice test questions and answers. You need avanset vce exam simulator in order to study the Amazon AWS Certified Solutions Architect - Associate certification exam dumps & Amazon AWS Certified Solutions Architect - Associate practice test questions in vce format.

Mastering the Amazon AWS Certified Solutions Architect - Associate Exam - Core Security and Compute

Embarking on the path to earn the AWS Certified Solutions Architect - Associate certification is a significant step in validating your cloud architecture skills. This credential demonstrates your ability to design and implement robust, scalable, and cost-effective solutions on the AWS platform. This five-part series will serve as your comprehensive guide, breaking down the essential knowledge areas required to confidently approach and pass the SAA-C03 exam. In this first installment, we will lay the groundwork by exploring the exam's structure and then diving deep into the foundational pillars of security and core compute services, which are critical for any successful cloud architecture.

Understanding the SAA-C03 Exam Structure

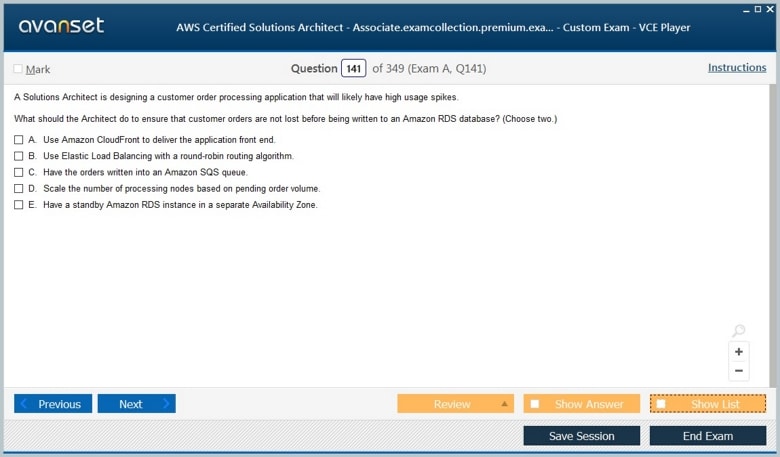

The AWS Certified Solutions Architect - Associate exam is meticulously designed to test your practical knowledge and problem-solving abilities. You will be presented with 65 questions to be completed within a 130-minute timeframe. These questions are a mix of multiple-choice, where you select the single best answer, and multiple-response, where you must identify all correct options. Unlike some other certifications, the questions are not adaptive; each one contributes to your final score. The exam content is categorized into four distinct domains, each with a specific weighting that reflects its importance in the role of a solutions architect.

These domains are: Design Secure Architectures (30%), Design Resilient Architectures (26%), Design High-Performing Architectures (24%), and Design Cost-Optimized Architectures (20%). This structure emphasizes that a successful architect must balance these four crucial aspects in every solution they create. A deep understanding of how AWS services fit within these domains is not just beneficial for the exam but is the essence of the architect role itself. Therefore, your preparation should focus on applying your knowledge to real-world scenarios presented in the questions, rather than simply memorizing service features.

Designing Secure Architectures: The First Pillar

Security is the most heavily weighted domain in the exam, and for good reason. On AWS, security is job zero. A fundamental concept you must grasp is the AWS Shared Responsibility Model. This model delineates the security obligations of AWS from your responsibilities as a customer. AWS is responsible for the security of the cloud, which includes the physical security of data centers, hardware, and the underlying network infrastructure. You, the customer, are responsible for security in the cloud. This includes managing your data, configuring access controls, encrypting sensitive information, and securing your network configurations.

Many exam questions will test your understanding of this model implicitly. You will be asked to choose the appropriate service or configuration to secure an application or data set. For instance, if a question involves protecting against network-level threats like DDoS attacks, you should think about services AWS manages. If the question is about restricting access to an S3 bucket or an EC2 instance, that falls squarely within your area of responsibility. Mastering this distinction is a crucial first step for any aspiring AWS Certified Solutions Architect - Associate.

A Deep Dive into Identity and Access Management (IAM)

Identity and Access Management, or IAM, is the heart of security on AWS. It allows you to manage access to AWS services and resources securely. The core components of IAM are users, groups, roles, and policies. Users represent the individual people or applications that interact with your AWS account. Groups are collections of users, which simplifies permission management; instead of applying permissions to each user, you can apply them to a group, and all users in that group inherit those permissions. This aligns with best practices for manageability and scalability of access control.

Roles are a distinct and powerful IAM identity that are meant to be assumed by trusted entities. Unlike users, roles do not have permanent credentials like a password or access keys. They provide temporary security credentials for their duration. This is the most secure way to grant permissions, especially for applications running on EC2 instances or for providing cross-account access. The permissions themselves are defined in policies, which are JSON documents that explicitly state what actions are allowed or denied on specific AWS resources. Understanding how to read and construct these policies is essential.

The principle of least privilege is a recurring theme in IAM-related exam questions. This principle dictates that you should only grant the minimum permissions necessary for an entity to perform its required tasks. When presented with a scenario, always choose the option that is most restrictive while still meeting the requirements. For example, if an application only needs to read objects from an S3 bucket, its IAM role should only have the s3:GetObject permission for that specific bucket, not wildcard permissions like s3:* that grant full access. This minimizes the potential impact of compromised credentials.

IAM policies come in two main types: identity-based and resource-based. Identity-based policies are attached directly to an IAM user, group, or role. They define what that identity can do. Resource-based policies are attached to a resource itself, such as an S3 bucket or an SQS queue. They specify which principals (accounts, users, roles) are allowed to access that resource. Understanding when to use one over the other, or how they work together, is a key skill tested in the AWS Certified Solutions Architect - Associate exam.

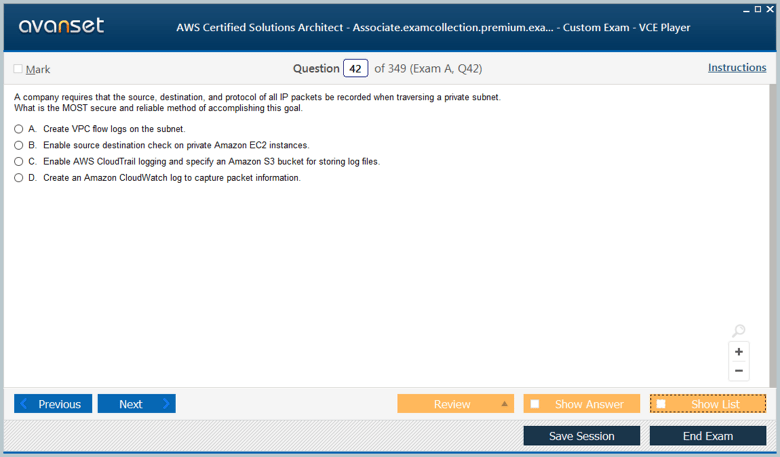

Securing Your Network with VPC Security Controls

Within your Virtual Private Cloud (VPC), you have two primary mechanisms for controlling network traffic: Security Groups and Network Access Control Lists (NACLs). Security Groups act as a virtual firewall for your EC2 instances, controlling both inbound and outbound traffic at the instance level. They are stateful, which is a critical feature to remember. This means if you allow inbound traffic on a certain port, the corresponding outbound return traffic is automatically allowed, regardless of any outbound rules. You can only create "allow" rules in a Security Group; all traffic is denied by default.

NACLs, on the other hand, act as a firewall for your subnets, controlling traffic at the subnet boundary. They are stateless, meaning you must explicitly define rules for both inbound and outbound traffic. If you allow inbound traffic on a port, you must also create a corresponding outbound rule to allow the return traffic. NACLs can have both "allow" and "deny" rules, which are evaluated in numerical order, starting with the lowest number. This makes them useful for blacklisting specific IP addresses at the subnet level.

A common scenario question will involve a multi-tier application, such as a web server, application server, and database server, each in a different subnet. You will be asked how to configure the security controls. The correct approach would be to use Security Groups to allow traffic between the tiers (e.g., the web server Security Group allows traffic from the application server Security Group) and use NACLs for broader, stateless filtering, such as blocking known malicious IPs from entering any part of your VPC. Understanding their stateful versus stateless nature is key to answering correctly.

Encryption Fundamentals with AWS Key Management Service (KMS)

Protecting data at rest and in transit is a critical security requirement. AWS Key Management Service (KMS) is a managed service that makes it easy to create and control the encryption keys used to encrypt your data. It is integrated with most AWS services that store data, such as S3, EBS, and RDS, simplifying the process of applying encryption. One of the core concepts of KMS is envelope encryption. Instead of using a single master key to encrypt large amounts of data, KMS uses a more secure and performant method.

With envelope encryption, your data is encrypted using a unique data key. That data key is then itself encrypted by a master key that is stored and managed within KMS. This encrypted data key is stored alongside your encrypted data. When you need to decrypt your data, you first ask KMS to decrypt the data key using the master key, and then you use the plaintext data key to decrypt your data. This process provides an additional layer of security and allows for more granular control over your data.

KMS offers different types of master keys, known as Customer Master Keys (CMKs). You can use AWS-managed CMKs, which are created and managed by AWS on your behalf, or you can create customer-managed CMKs, which give you full control over their lifecycle, including their key policy and rotation schedule. The key policy is the primary access control mechanism for a CMK and is crucial for defining who can use or manage the key. Many exam questions will revolve around configuring these policies, especially for scenarios involving cross-account access to encrypted resources.

Core Compute with Amazon EC2

Amazon Elastic Compute Cloud (EC2) is one of the most fundamental services in AWS. It provides scalable computing capacity in the cloud, allowing you to launch virtual servers, known as instances. For the AWS Certified Solutions Architect - Associate exam, you need a solid understanding of EC2's various components and how to select the right configuration for a given workload. This starts with understanding the different instance families. Each family is optimized for specific types of tasks.

For example, General Purpose instances (like the T and M families) provide a balance of compute, memory, and networking resources, making them suitable for a wide variety of applications such as web servers or small databases. Compute Optimized instances (C family) are ideal for compute-intensive applications like scientific modeling or high-performance web servers. Memory Optimized instances (R and X families) are designed for workloads that process large data sets in memory, such as in-memory databases or big data analytics. Choosing the right family is the first step in designing a high-performing and cost-effective architecture.

Understanding EC2 Instance Purchasing Options

Beyond instance types, a crucial topic for any architect is choosing the correct purchasing option, as this has a massive impact on cost. The most flexible option is On-Demand, where you pay for compute capacity by the hour or second with no long-term commitments. This is perfect for applications with unpredictable workloads or for development and testing environments. For steady-state, predictable workloads, you can achieve significant savings by using Reserved Instances (RIs) or Savings Plans. These involve committing to a certain amount of usage for a one- or three-year term.

Spot Instances are another powerful cost-saving option, offering up to a 90% discount compared to On-Demand prices. Spot Instances allow you to bid on spare EC2 capacity. The major caveat is that AWS can reclaim this capacity with only a two-minute warning if they need it back. This makes Spot Instances ideal for fault-tolerant and flexible workloads, such as big data analysis, batch processing, or containerized applications that can handle interruptions. The exam will often present scenarios and ask you to choose the most cost-effective purchasing model that meets the application's availability requirements.

Essential EC2 Components: AMIs and Instance Metadata

When you launch an EC2 instance, you must specify an Amazon Machine Image (AMI). An AMI provides the information required to launch an instance, including the operating system, an application server, and any required applications. AWS provides many pre-configured AMIs, but you can also create your own custom AMIs. Creating a custom AMI is useful when you have a standard configuration with software and settings that you need to replicate across many instances. This can significantly speed up the launch time for new instances, which is beneficial for auto-scaling scenarios.

Another key feature to understand is instance metadata and user data. The instance metadata service allows an EC2 instance to learn about itself without needing to know its own IP address or other external details. An instance can retrieve information like its instance ID, public IP address, and IAM role from a special link-local address. User data is a script that you can provide when launching an instance. This script will run automatically the first time the instance starts, making it a powerful tool for bootstrapping, such as installing software, applying patches, or downloading application code.

Introduction to Resilient Architecture Design

Welcome to the second part of our series dedicated to preparing you for the AWS Certified Solutions Architect - Associate exam. In the previous installment, we established a strong foundation in core security and compute services. Now, we will build upon that knowledge to tackle another critical domain: designing resilient architectures. Resilience is the ability of a system to recover from failures and continue to function. In the cloud, this means designing systems that can withstand component failures, from a single EC2 instance to an entire data center, without impacting the end-user experience.

This section will delve into the key AWS services and design patterns that enable you to build highly available and fault-tolerant applications. We will explore how to distribute traffic effectively using Elastic Load Balancing, how to scale your resources dynamically with Auto Scaling, and how to manage your global traffic with Amazon Route 53. We will also cover the foundational networking concepts within Amazon VPC and how to accelerate content delivery with Amazon CloudFront. A deep understanding of these topics is crucial for answering the scenario-based questions you will encounter on the exam.

Achieving High Availability with Elastic Load Balancing (ELB)

Elastic Load Balancing automatically distributes incoming application traffic across multiple targets, such as EC2 instances, containers, and IP addresses. By spreading the load, ELB increases the fault tolerance of your applications. If one of your instances fails, the load balancer will simply stop sending traffic to it and redirect it to the remaining healthy instances. The AWS Certified Solutions Architect - Associate exam will expect you to know the different types of load balancers and when to use each one. There are three main types: Application Load Balancer (ALB), Network Load Balancer (NLB), and Classic Load Balancer (CLB).

The Application Load Balancer operates at the application layer (Layer 7) and is best suited for load balancing HTTP and HTTPS traffic. It is intelligent and can make routing decisions based on the content of the request, such as the URL path or hostname. This allows you to route traffic to different groups of servers based on the request. For example, requests for /api could go to one set of instances, while requests for /images go to another. The Network Load Balancer operates at the transport layer (Layer 4) and is capable of handling millions of requests per second with ultra-low latency. It's ideal for TCP traffic where extreme performance is required.

The Classic Load Balancer is the previous generation load balancer and is no longer recommended for new applications, but you should still be aware of its existence for the exam. A key feature of ALBs and NLBs is their integration with other AWS services. They can balance traffic across multiple Availability Zones (AZs), which is fundamental to building a highly available architecture. You will frequently see questions that require you to combine an ELB with an Auto Scaling group to create a self-healing and scalable application tier.

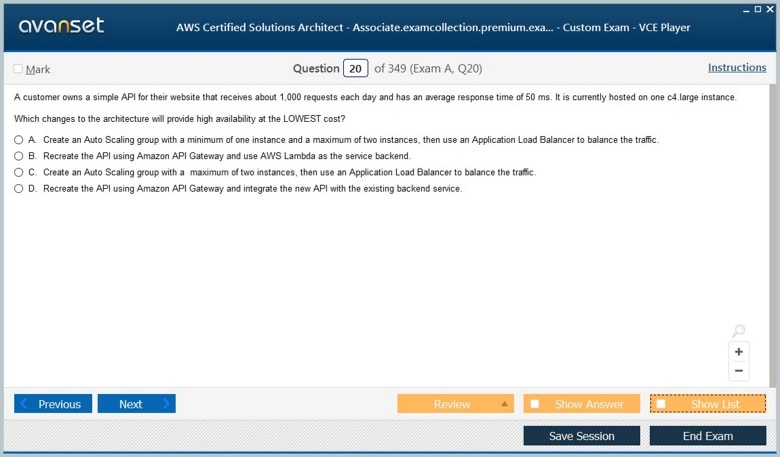

Dynamic Scaling with Auto Scaling Groups (ASG)

An Auto Scaling Group (ASG) helps you ensure that you have the correct number of EC2 instances available to handle the load for your application. You create a collection of EC2 instances, called an Auto Scaling group, and define a desired capacity. The ASG will maintain this number of instances by performing periodic health checks. If an instance is found to be unhealthy, the ASG will terminate it and launch a new one to replace it. This provides a powerful self-healing capability that is a cornerstone of resilient design on AWS.

Beyond maintaining a fixed number of instances, the true power of Auto Scaling lies in its ability to scale dynamically. You can configure scaling policies that automatically increase or decrease the number of instances in your group in response to changing demand. For example, you can set up a policy to add instances when the average CPU utilization across the group exceeds 70% for a sustained period. Conversely, you can set a policy to remove instances when CPU utilization drops, which helps you optimize costs by not paying for idle resources.

There are different types of scaling policies you should be familiar with for the exam. Target tracking scaling is the simplest, where you set a target value for a specific metric, like average CPU utilization, and the ASG adjusts the number of instances to keep the metric at that target value. Step scaling and simple scaling allow for more customized responses to alarms. A common scenario involves an application that experiences predictable traffic spikes at certain times. In this case, you could use scheduled scaling to proactively increase capacity before the anticipated spike occurs.

Global Traffic Management with Amazon Route 53

Amazon Route 53 is a highly available and scalable cloud Domain Name System (DNS) web service. It is designed to give developers and businesses an extremely reliable and cost-effective way to route end users to internet applications. Beyond standard DNS resolution, Route 53 offers powerful traffic routing policies that are essential for building resilient and high-performing global applications. You must know these policies for the AWS Certified Solutions Architect - Associate exam, as they are frequently tested in scenarios involving disaster recovery and latency reduction.

The different routing policies include Simple, Failover, Geolocation, Geoproximity, Latency, and Weighted. Simple routing is the most basic, allowing you to route traffic to a single resource. Failover routing lets you configure an active-passive setup. Route 53 will monitor the health of your primary endpoint and, if it becomes unhealthy, will automatically route all traffic to your secondary, standby endpoint. This is a simple yet effective pattern for disaster recovery. Latency-based routing directs users to the AWS region that provides the lowest possible latency, improving application performance for a global user base.

Geolocation routing allows you to route traffic based on the geographic location of your users, which is useful for serving localized content or enforcing data sovereignty requirements. Weighted routing lets you distribute traffic across multiple resources in proportions that you specify. This can be used for A/B testing or for blue-green deployments where you gradually shift traffic from an old version of your application to a new one. Understanding the specific use case for each routing policy is key to selecting the correct answer in the exam.

Networking Foundations with Amazon VPC

A Virtual Private Cloud (VPC) is your own logically isolated section of the AWS cloud where you can launch AWS resources in a virtual network that you define. A strong grasp of VPC fundamentals is absolutely essential for the exam. This includes understanding how to structure your VPC using subnets. A subnet is a range of IP addresses in your VPC, and all resources must be launched into a subnet. Subnets are tied to a single Availability Zone, which means that to build a highly available application, you must place your resources in subnets across multiple AZs.

Subnets can be configured as either public or private. A public subnet has a route to an Internet Gateway (IGW), which allows resources within it to communicate directly with the internet. A private subnet does not have a direct route to the internet. To allow instances in a private subnet to access the internet for things like software updates, you must use a Network Address Translation (NAT) Gateway or a NAT Instance. The NAT Gateway is a managed AWS service and is the preferred, more resilient option. It sits in a public subnet and provides outbound-only internet access for instances in private subnets.

Communication between different VPCs can be achieved using VPC Peering. A VPC peering connection is a networking connection between two VPCs that enables you to route traffic between them using private IPv4 addresses or IPv6 addresses. It is not a transitive relationship; if VPC A is peered with VPC B, and VPC B is peered with VPC C, VPC A cannot communicate with VPC C unless a direct peering connection is established. For more complex network topologies involving many VPCs, an AWS Transit Gateway acts as a central hub, simplifying connectivity and management.

Accelerating Content Delivery with Amazon CloudFront

Amazon CloudFront is a fast content delivery network (CDN) service that securely delivers data, videos, applications, and APIs to customers globally with low latency and high transfer speeds. It works by caching copies of your content (like images, videos, or static web pages) in edge locations that are located close to your end-users. When a user requests your content, CloudFront directs the request to the edge location that can best serve the user, which results in faster delivery times. This is a key component for designing high-performing architectures.

CloudFront integrates seamlessly with other AWS services. A common architecture involves using an S3 bucket as the origin for your static content. You can configure CloudFront to cache the content from this bucket. For dynamic content, you can use an Application Load Balancer or an EC2 instance as the origin. CloudFront can also improve security. You can configure it to require that users access your content using HTTPS, and you can use AWS WAF (Web Application Firewall) with CloudFront to protect your application from common web exploits.

One important feature to know for the exam is the concept of an Origin Access Identity (OAI). If you are using an S3 bucket as an origin, you typically want to prevent users from bypassing CloudFront and accessing the files directly from the S3 bucket using its public URL. An OAI is a special CloudFront user that you can associate with your distribution. You can then create an S3 bucket policy that only allows access to the objects from that specific OAI, effectively locking down your bucket so that content can only be served through CloudFront.

Designing for Multi-AZ and Multi-Region Architectures

A core principle of resilience on AWS is designing for failure by distributing your architecture across multiple Availability Zones. An AZ is one or more discrete data centers with redundant power, networking, and connectivity within an AWS Region. They are physically separate, so a failure in one AZ is unlikely to impact others. By deploying your application across at least two AZs, using services like ELB and ASG, you can create a highly available system that can withstand the failure of an entire data center. This is a common pattern tested on the AWS Certified Solutions Architect - Associate exam.

For even greater resilience and disaster recovery, you can design a multi-region architecture. This involves replicating your infrastructure and data to a second AWS Region. In the event of a regional outage, you can fail over your traffic to the secondary region. This approach increases complexity and cost but provides the highest level of availability. You can use services like Route 53's failover routing to manage the traffic redirection. You might also use services with built-in cross-region replication capabilities, such as S3 Cross-Region Replication or DynamoDB Global Tables, to keep your data synchronized between regions.

The exam will present scenarios with specific Recovery Time Objective (RTO) and Recovery Point Objective (RPO) requirements. RTO is the maximum acceptable downtime for your application, while RPO is the maximum acceptable amount of data loss. Your choice of a single-AZ, multi-AZ, or multi-region architecture will depend heavily on these requirements. A multi-region active-active setup offers the lowest RTO and RPO but is also the most complex and expensive to implement. You must be able to weigh these trade-offs to select the most appropriate solution.

Introduction to Data Persistence on AWS

Welcome to the third part of our comprehensive guide for the AWS Certified Solutions Architect - Associate examination. Having covered foundational security, compute, and the principles of resilient architecture, we now focus on another critical area: data storage and databases. How you store, manage, and access data is a defining characteristic of any well-architected system. AWS provides a vast and specialized portfolio of storage and database services, each designed to meet different technical and business requirements. A significant portion of the exam will test your ability to choose the right service for the right job.

This installment will provide a detailed exploration of AWS's primary storage solutions, including the versatile Amazon S3, block storage with Amazon EBS, and file storage with Amazon EFS. We will also delve into archival solutions like Amazon S3 Glacier. Following that, we will navigate the diverse landscape of AWS databases, comparing and contrasting relational databases like Amazon RDS and Amazon Aurora with NoSQL offerings like Amazon DynamoDB. By the end of this part, you will have a clear understanding of the features, use cases, and performance characteristics of these essential services.

Versatile Object Storage with Amazon S3

Amazon Simple Storage Service (S3) is a secure, durable, and highly scalable object storage service. You can use S3 to store and retrieve any amount of data, at any time, from anywhere on the web. It is one of the most fundamental AWS services, and you can expect numerous questions about it. S3 stores data as objects within containers called buckets. Each object consists of the data itself, a key (which is its name), and metadata. A key concept to master is that S3 provides a flat structure; there are no directories, although the use of prefixes in object keys can simulate a folder hierarchy.

S3 is designed for 99.999999999% (11 nines) of durability, which means your data is extremely safe. This is achieved by storing your objects redundantly across multiple devices in multiple facilities within an AWS Region. However, it's important to distinguish durability from availability. S3 Standard offers 99.99% availability over a given year. For the exam, you must be intimately familiar with the different S3 storage classes. Each class is designed for a specific access pattern and comes with a different price point and availability Service Level Agreement (SLA).

The S3 Standard storage class is for frequently accessed data that requires low latency. S3 Intelligent-Tiering automatically moves data to the most cost-effective access tier based on usage patterns, without performance impact or operational overhead. S3 Standard-Infrequent Access (S3 Standard-IA) is for data that is accessed less frequently but requires rapid access when needed. S3 One Zone-IA is even lower cost but stores data in only a single Availability Zone, making it suitable for non-critical, reproducible data. Understanding these tiers is crucial for cost-optimization questions.

Archival Storage with Amazon S3 Glacier

For long-term data archival and digital preservation, AWS offers the Amazon S3 Glacier storage classes. These are extremely low-cost storage options designed for data that is accessed very rarely. Amazon S3 Glacier Instant Retrieval offers the lowest-cost storage for data that is archived but still needs millisecond retrieval, like medical images or news media assets. Amazon S3 Glacier Flexible Retrieval is a low-cost option for archives where retrieval times of minutes or hours are acceptable. This is a great fit for backup and disaster recovery use cases.

The deepest and lowest-cost storage class is Amazon S3 Glacier Deep Archive. It is designed for data that is accessed once or twice a year and can be restored within 12 hours. This is an ideal solution for replacing on-premises magnetic tape libraries and meeting long-term regulatory compliance requirements. A key feature you must understand for the exam is S3 Lifecycle policies. These policies allow you to automate the transition of your objects between different storage classes over time. For example, you can create a rule to move objects from S3 Standard to S3-IA after 30 days, and then to S3 Glacier Deep Archive after 180 days.

Block and File Storage: EBS and EFS

While S3 is for object storage, Amazon Elastic Block Store (EBS) provides persistent block-level storage volumes for use with EC2 instances. Think of an EBS volume as a virtual hard drive in the cloud. You attach it to an EC2 instance, and it appears as a local block device. Because it is block storage, you can format it with a file system and use it for anything you would use a hard drive for, such as installing an operating system, running a database, or storing application files. EBS volumes are tied to a specific Availability Zone and can only be attached to one EC2 instance at a time.

EBS comes in several volume types optimized for different workloads, which you need to know for the exam. General Purpose SSD volumes (gp2 and gp3) provide a balance of price and performance and are suitable for a wide variety of transactional workloads, such as virtual desktops and system boot volumes. Provisioned IOPS SSD volumes (io1 and io2) are designed for I/O-intensive workloads, such as large relational databases, where you need consistent and high performance. Throughput Optimized HDD (st1) and Cold HDD (sc1) are lower-cost magnetic storage options for large, sequential workloads like big data processing or log streaming.

Amazon Elastic File System (EFS) provides a simple, scalable, fully managed elastic NFS file system. Unlike EBS, which can only be attached to a single instance, an EFS file system can be mounted by thousands of EC2 instances concurrently, even across different Availability Zones. This makes EFS an ideal solution for use cases where multiple servers need to access and modify the same set of files, such as content management systems, web serving, or shared code repositories. EFS is elastic and automatically grows and shrinks as you add and remove files, so you don't have to provision storage in advance.

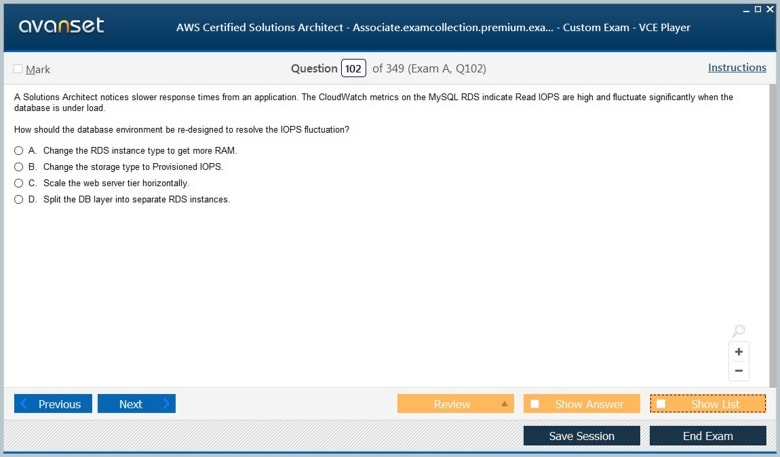

Relational Databases with Amazon RDS and Aurora

Amazon Relational Database Service (RDS) is a managed service that makes it easy to set up, operate, and scale a relational database in the cloud. It supports several popular database engines, including MySQL, PostgreSQL, MariaDB, Oracle, and Microsoft SQL Server. RDS handles time-consuming database administration tasks like patching, backups, and provisioning. This allows you to focus on your application rather than managing the database infrastructure. A key feature of RDS for resilience is its Multi-AZ deployment option.

When you provision an RDS instance with Multi-AZ, AWS automatically creates a synchronous standby replica of your database in a different Availability Zone. In the event of an infrastructure failure or during a database maintenance window, RDS automatically fails over to the standby replica, ensuring high availability for your database tier. For read-heavy applications, you can use RDS Read Replicas. These are asynchronous copies of your primary database that you can create to offload read traffic, thereby improving the performance of your main database.

Amazon Aurora is a MySQL and PostgreSQL-compatible relational database built for the cloud. It offers the performance and availability of high-end commercial databases at a fraction of the cost. Aurora's architecture separates compute and storage, making it highly durable and fault-tolerant. Its storage volume is spread across three Availability Zones, and it can tolerate the loss of an entire AZ without any data loss. Aurora also offers features like Global Database for low-latency global reads and disaster recovery, and Aurora Serverless, which automatically starts up, shuts down, and scales capacity based on your application's needs.

NoSQL Databases with Amazon DynamoDB

Amazon DynamoDB is a fully managed NoSQL key-value and document database that delivers single-digit millisecond performance at any scale. It is a perfect fit for applications that need consistent, low-latency performance, such as mobile apps, gaming, and IoT. Unlike relational databases that require you to define a schema upfront, DynamoDB is schema-less, providing more flexibility. A core concept in DynamoDB is its use of primary keys to uniquely identify each item in a table. The primary key can be a simple partition key or a composite key made up of a partition key and a sort key.

For performance, you need to understand DynamoDB's capacity modes: provisioned and on-demand. In provisioned throughput mode, you specify the number of reads and writes per second that you require for your application. This is a good option for applications with predictable traffic. In on-demand mode, DynamoDB automatically scales the throughput capacity to meet the demands of your workload, and you pay for what you use. This is ideal for applications with unpredictable or spiky traffic patterns.

To further improve read performance, DynamoDB offers an in-memory caching service called DynamoDB Accelerator (DAX). DAX is a fully managed, highly available cache that can reduce DynamoDB response times from milliseconds to microseconds, even at millions of requests per second. For applications with a global user base, DynamoDB Global Tables provide a multi-region, multi-active database solution. Global Tables automatically replicate your data across multiple AWS Regions, allowing you to deliver low-latency data access to your users no matter where they are located.

Choosing the Right Database

A common type of question on the AWS Certified Solutions Architect - Associate exam presents a scenario and asks you to select the most appropriate database service. To answer these correctly, you need a clear mental model of when to use each service. If the scenario involves structured data with complex queries, joins, and transactions (OLTP), a relational database like RDS or Aurora is usually the best choice. Look for keywords like "schema," "SQL," or "ACID compliance."

If the scenario describes a need for extreme scalability, low-latency key-value access, and a flexible schema (OLAP or web-scale applications), then DynamoDB is likely the correct answer. Look for keywords like "millions of users," "single-digit millisecond latency," or "unstructured data." The decision between RDS and Aurora often comes down to performance, scalability, and availability requirements. Aurora is generally the better choice for new, demanding applications due to its superior performance and cloud-native architecture.

Introduction to Efficient Cloud Architectures

This is the fourth installment in our series designed to prepare you for the AWS Certified Solutions Architect - Associate exam. So far, we have built a solid understanding of security, resilience, storage, and databases. We will now explore two equally important aspects of modern cloud design: building cost-optimized and high-performing architectures using serverless and container technologies. A key responsibility of a solutions architect is not just to design functional systems, but to do so in the most economically efficient way possible. Furthermore, leveraging modern architectural patterns can lead to increased agility and scalability.

In this section, we will delve into the various AWS pricing models and the strategies you can employ to minimize your monthly bill. We will explore how to choose the right compute purchasing option for your workloads. Then, we will transition to the transformative world of serverless computing with AWS Lambda and Amazon API Gateway, understanding when and why to choose this approach over traditional server-based models. We will also cover AWS's container services, including Amazon ECS and AWS Fargate, which offer another powerful way to deploy and manage applications.

Designing Cost-Optimized Architectures

Cost optimization is one of the four main domains of the exam and a pillar of the AWS Well-Architected Framework. It is a continuous process of refining and improving a system to reduce costs over time. The exam will test your ability to select the most cost-effective services and configurations for a given scenario without compromising performance, security, or reliability. This often involves understanding the trade-offs between different pricing models and service tiers. The first step in cost optimization is choosing the right pricing model for your compute resources.

As discussed previously, for EC2, you have On-Demand, Reserved Instances, Savings Plans, and Spot Instances. On-Demand is the most expensive but most flexible. Reserved Instances and Savings Plans offer significant discounts in exchange for a one- or three-year commitment and are ideal for predictable, steady-state workloads. Spot Instances provide the deepest discounts but can be interrupted. A common strategy for cost optimization is to use a mix of these models: use RIs or Savings Plans for your baseline capacity and supplement with On-Demand or Spot Instances to handle spikes in demand.

Beyond compute, you should also apply cost-optimization principles to storage. Using S3 Lifecycle policies to automatically transition data to lower-cost storage classes like S3-IA or S3 Glacier is a prime example. For databases and other services, choosing the right size and type of resource is crucial. AWS provides tools to help you with this. AWS Trusted Advisor is an online tool that provides real-time guidance to help you provision your resources following AWS best practices. It has a cost optimization check that can identify underutilized resources like idle EC2 instances or unattached EBS volumes.

Another key service is AWS Budgets, which allows you to set custom budgets that alert you when your costs or usage exceed (or are forecasted to exceed) your budgeted amount. This helps you monitor your spending and take corrective action before costs spiral out of control. The exam may present a scenario where a company is concerned about its rising cloud costs and ask you to identify the best course of action. Your answer should involve using these tools to identify waste and implementing strategies like right-sizing instances and leveraging appropriate pricing models.

Serverless Architectures with AWS Lambda

Serverless computing allows you to build and run applications and services without thinking about servers. With serverless, you don't need to provision, scale, or manage any servers. AWS Lambda is a serverless compute service that lets you run code without provisioning or managing servers. You can write your code, called a Lambda function, in various languages and upload it to the service. Lambda then handles everything required to run and scale your code with high availability. You only pay for the compute time you consume, down to the millisecond, and there is no charge when your code is not running.

Lambda is an event-driven service. This means your code is triggered in response to events from other AWS services or from your own applications. For example, you can have a Lambda function that automatically runs every time a new object is uploaded to an S3 bucket, a new message arrives in an SQS queue, or an HTTP request is made to an API Gateway endpoint. This event-driven model makes Lambda an excellent choice for a wide variety of use cases, including data processing, real-time file processing, building web application backends, and creating event-driven workflows.

For the AWS Certified Solutions Architect - Associate exam, you need to understand the key characteristics of Lambda, including its concurrency limits, execution timeout, and memory configuration. You should also know how to grant a Lambda function the necessary permissions to access other AWS resources by using an IAM execution role. A common pattern you will see is the use of Lambda for "glue" logic, connecting different AWS services together to form a cohesive application workflow. For instance, a Lambda function could be triggered by an S3 upload, process the image, and then store metadata about the image in a DynamoDB table.

Creating APIs with Amazon API Gateway

Amazon API Gateway is a fully managed service that makes it easy for developers to create, publish, maintain, monitor, and secure APIs at any scale. An API acts as the "front door" for applications to access data, business logic, or functionality from your backend services. API Gateway can be used to create RESTful APIs and WebSocket APIs that enable real-time two-way communication. A primary use case for API Gateway is to create an HTTP endpoint that can trigger a backend AWS Lambda function. This combination is the foundation of many serverless web applications.

API Gateway handles all the tasks involved in accepting and processing up to hundreds of thousands of concurrent API calls, including traffic management, authorization and access control, monitoring, and API version management. It provides features like throttling to protect your backend from being overwhelmed by too many requests, and caching to improve the performance and reduce the latency of requests. You can also secure your APIs using various methods, including IAM roles, Cognito User Pools for user authentication, or Lambda authorizers for custom authorization logic.

The exam will often present scenarios where you need to expose a backend service to external clients. Choosing API Gateway is often the correct answer when you need features like request transformation, throttling, or a managed security layer. The combination of API Gateway, Lambda, and DynamoDB forms a common and powerful serverless pattern for building scalable, cost-effective web backends that require minimal operational management. Understanding how these three services work together is critical for the exam.

Containerization with Amazon ECS and AWS Fargate

Containers have become a popular way to package and deploy applications because they provide a consistent and portable runtime environment. Amazon Elastic Container Service (ECS) is a highly scalable, high-performance container orchestration service that supports Docker containers. It allows you to easily run and scale containerized applications on AWS. ECS removes the need for you to install and operate your own container orchestration software, manage and scale a cluster of virtual machines, or schedule containers on those virtual machines.

With ECS, you have two launch types or modes to run your containers: EC2 and Fargate. With the EC2 launch type, you are responsible for provisioning and managing the underlying cluster of EC2 instances that your containers will run on. This gives you more control over the infrastructure, for example, if you need to use a specific EC2 instance type. With the AWS Fargate launch type, you do not have to manage the underlying servers at all. Fargate is a serverless compute engine for containers. You just package your application in containers, specify the CPU and memory requirements, and Fargate launches and scales the containers for you.

For the exam, you need to understand the difference between these two launch types. Fargate is simpler to use and removes the operational overhead of managing the EC2 cluster, making it a great choice for most applications. The EC2 launch type is suitable when you need more granular control over your infrastructure. A question might describe an application that needs to be containerized and ask you to choose the most appropriate compute option. If the scenario emphasizes simplicity and reduced operational burden, Fargate is likely the correct answer.

Comparing Compute Options

At this point, you have been introduced to several compute options on AWS: EC2 instances, Lambda functions, and containers running on ECS or Fargate. A key skill for a solutions architect is knowing which option to choose for a given workload. EC2 provides the most control and flexibility and is suitable for almost any workload, especially legacy applications that are not designed for the cloud. However, it also carries the most operational overhead in terms of patching, scaling, and managing the instances.

Lambda is best for short-running, event-driven tasks. If your application logic can be broken down into small, independent functions that respond to triggers, Lambda is an extremely cost-effective and scalable option. It is not suitable for long-running processes due to its execution time limits. Containers with ECS and Fargate provide a middle ground. They are great for running microservices, batch jobs, and long-running applications. They offer more portability and consistency than EC2 while providing more flexibility for long-running tasks than Lambda. The exam will test your judgment in selecting the right tool for the job.

Conclusion:

This series has provided a comprehensive overview of the key topics you need to master for the AWS Certified Solutions Architect - Associate exam. We have covered security, compute, resilience, storage, databases, cost optimization, modern architectures, operations, and recovery. Passing this exam is a significant achievement that will validate your skills and enhance your career. By combining diligent study of these concepts with hands-on practice, you will build the confidence and knowledge needed to succeed. Stay focused, trust in your preparation, and approach the exam with a clear plan. We wish you the very best on your certification journey.

Go to testing centre with ease on our mind when you use Amazon AWS Certified Solutions Architect - Associate vce exam dumps, practice test questions and answers. Amazon AWS Certified Solutions Architect - Associate AWS Certified Solutions Architect - Associate (SAA-C01) certification practice test questions and answers, study guide, exam dumps and video training course in vce format to help you study with ease. Prepare with confidence and study using Amazon AWS Certified Solutions Architect - Associate exam dumps & practice test questions and answers vce from ExamCollection.

Amazon AWS Certified Solutions Architect - Associate Video Course

Top Amazon Certification Exams

- AWS Certified Solutions Architect - Associate SAA-C03

- AWS Certified Solutions Architect - Professional SAP-C02

- AWS Certified AI Practitioner AIF-C01

- AWS Certified Cloud Practitioner CLF-C02

- AWS Certified DevOps Engineer - Professional DOP-C02

- AWS Certified Machine Learning Engineer - Associate MLA-C01

- AWS Certified Data Engineer - Associate DEA-C01

- AWS Certified CloudOps Engineer - Associate SOA-C03

- AWS Certified Developer - Associate DVA-C02

- AWS Certified Machine Learning - Specialty

- AWS Certified Advanced Networking - Specialty ANS-C01

- AWS Certified Security - Specialty SCS-C02

- AWS Certified Security - Specialty SCS-C03

- AWS Certified SysOps Administrator - Associate

- AWS-SysOps

Site Search:

Premium Valid! Passed!

Today , I pass the exam, 100% valid dump.The answer needs to refer to the AWS whitepaper

The questions are good, but what about the answers?

Passed Yesterday. Dump still valid . United States

Today 20/05/2020, I pass the examen, 100% valid dump.

Thanks

didn't like the course

i didnt pass the exam for the first time. i hope this dump will help me

I was skeptical about the quality of dumps. But I was surprised. It's really good. I passed the exam! Thank you very much!

kinda expensive exam, but it did help to pass the exam!!! highly recommended!!!

My first Examcollection purchase and everything is fine. I didn't know I need VCE reader though.

Premium file is valid, i do recommend to use it for preparation. The exam is not very easy. Good luck

Hey!

It's not an easy exam, unfortunately i've failed.

almost all questions were from the dump, i think it's more my fault rather than dump

Premium file is valid so far. I looked through all questions, hope it will help me to pass the exam!

Surj, the issue is even the dumps are valid, the content is quite confusing. i have been taking 50 questions a day and scoring well now. i will say read up the supporting links in the answers that show up to get some clarification. storage, networking and database got very clear for me using those links (plus also i did some hands-on training )

HI even if the dumps are valid as confirmed, any idea why people are nor passing the exam.

AWS Associate---Almost, just like me, i messed up on storage questions. One has to get the minimum in all areas before you can get a pass mark!

took the exam today and premium dump is 100% valid. however failed to pass it as i think i messed up networking and Database parts... now gotta wait 14 days to re-write it.

@Surj, yes and no. I think you have to score points in all areas. E.g. if you get 100% in 3 areas and score 0, you will fail. The unfortunate thing is if you fail, your result isn't printed out for you to know where you messed up so you can concentrate more on the area

so does that mean that the premium dumps are still valid... and you have to practice mpre

I actually did the exam on saturday - 25/01 and failed! All questions were on the premium exam. I'm planning to retake the exam again before it gets retired on 22/03/20

I have read it different forums that there's no minimum score for AWS exams like Microsoft where the pass mark is 700.

I've had people say, they passed with 54%, 68% whilst some others failed with 74%

Out of the 65Q in the exam, i was pretty sure i got about 51Q right but couldn't remember answers to the 14Q and still failed.

So for you to pass, you will have to get rat least 60Q right just to be sure

Please somebody confirm if the dumps are valid in 2020.

Information About AWS Certified Solutions Architect - Associate - In 22/03/2020 Amazon will put a new exam with a new question.

Can someone confirm if the premium is valid for 2020???

Have anybody passed in 2020?

Is the premium valid?

Number of questions?

premium totally valid, i got it this week, the same q

Premium Dump is valid. All questions came from the dump. No new questions.

Can anyone say is this Premium Dump Valid to take up exam

@ Silva when you took the exam i mean what date.

the premium dump is valid. All exam questions come from the dump. Got pass using 345Q.

Have anybody passed recently with this dumps. Is it still valid.

What is the difference in SAA-001 and SAA-C01? And there is no any exam on Amazon coded SAA-001. Is there any premium dump for SAA-C01? I saw premium for SAA-001 but not for SAA-C01. Can you please clarify.

@Era,

Thanks for contacting us.

We checked the file, it isn't encrypted or corrupted, format is valid. Pay attention, we recommend using of VCE Exam Simulator to play VCE files properly. VCE Exam Simulator can be purchased from its developer, http://www.avanset.com/.

Please note that Examcollection does not sell or support this software. Should you have any questions or concerns about using this product, please contact Avanset support team directly.

Also you can contact the support of your player.

AWS Certified Solutions Architect - Associate Premium File seems error. I can't add to VCE.

Hi Guys,

Please, the premium dump is valid?

Did anyone managed to take the exam an verify if this one's are valid? I will appreciate a feedback. Also, anyone here paid for the us$16 on the vce mountly subscription or os there any other alternative?

Can anyone please confirm AWS Certified Solutions Architect - Associate 2018 Premium 313q version 7.4 is still valid and anyone cleared using that?

Please let me know which exam dumps are valid

is premium valid?

313q premium is valid?

Anybody passed the exam recently using these dumps?

Hi @Sun.. Did you completed the exam? What is the status of the dump. Kindly update

Hi @YY

Did you try again and pass with Premium dump? Are all questions Valid and similar?

Hi Everyone

I am going to give exam in next week which exam dumps are valid is premium exam of 313 Questions are valid, can i sure pass the exam from these dumps. If you guys have any valid dumps of solutions architect please do let me know

Thanks

Hello @Rami

Yes these are the dumps for the SAA-C01

I took the exam 3 weeks ago but unfortunately, I failed the exam.

I bought the dumps yesterday and it was similar to the exam.

Where can I find the Premium file? Is it valid?

What is the difference in SAA-001 and SAA-C01? And there is no any exam on Amazon coded SAA-001. Is there any premium dump for SAA-C01? I saw premium for SAA-001 but not for SAA-C01. Can you please clarify.

Hi Gays i need some one to explain to me, is this dumps can be used for certificate code SAA-C01? because all update it happen here, please guide me i will be thank full to you

Guys and girls .. Please please enlighten .. Is premium dump valid.. anyone tried with this please

Can any one please verify if the 227 premium dump is valid?

Any Pass recently and how i can open these dump it is not working with me ?

Is premium dump valid in Pakistan? Please confirm

how can open these dumps ?

@That Guy when did you take the exam.

Please confirm if this dump of AWS-169Q are still valid

Can you confirm is Premium dump is valid or not?

Amazon.Pass4sureexam.AWS Certified Solutions Architect - Associate 2018.v2019-01-21.by.Margaret.65q.vce is not vaild, there was 3 questions on the real exam

Are the premium dumps still valid?

Which premium is valid? AWS Certified Solutions Architect - Associate 2018 (SAA-001) 169q or AWS Certified Solutions Architect - Associate 987q?

@Alex,

We checked the file, it isn't corrupted. Please use the latest version of player to open these files. We recommend using of VCE Exam Simulator to play VCE files properly https://www.avanset.com/products.html

If you already use this VCE Exam Simulator, please, update it to the newest version. If it did not help we advise you to contact the support of your player.

Could you please check why premium dump file is corrupted and can’t be played through vce player.

is this Premium Dump Valid? anyone took the test recently?

Hello everyone, Can anyone confirm if the premium dump is valid ?

Are these valid?

Are these valid for the new exam?

Anyone Please confirm prime dump valid or not?

Hello everyone, you can confirm if the premium dump is valid

Can anyone confirm if the dumps are valid and which one - Donald or Frank ?

this dump still valid ? Est ce que le dump est tjrs valable ?

is valid dump premium

this dump still valid ? Thanks

question: stringent requirements concerning the security of the data at rest. Specifically, the CISO asked for the use of encryption with separate permissions for the use of an envelope key, automated rotation of the encryption keys, and visibility into when an encryption key was used and by whom.

the answer given is create amazon S3 bucket to store the reports and use Server-Side Encryption with Amazon S3-Managed keys( SSE-S3), and even gives a link for reference but that link is vague and point more towards SSE-KMS as an answer. I have checked AWS references and they list SSE-KMS as having these qualities, not SSE-S3. Please verify.

I took the September exam and can help confirm if it is a new version. But I don't have software to open the confirmation.

is valid dump premium?

Has anyone taken the exam since August 12? Any feedback?

is valid dump premium?

Where can i found the AWS Cloud Practioner Exams Dump? Any help will be greatly appreciated.

is the premium dump valid?

just 65 questions feels a bit small !?!

Is valid this exam? thanks

someone passed the certification??

Is valid the exam?

Is it still valid? Anyone has written exam after 12th Aug 2018 ?

Thanks

Still valid?

is valid ?

Is the premium valid? Has anyone taken using premium?

passed. 95%. Premium version. G0 through the questions, helps you prepare.

apparently saa-c00, according to support

Which version of exam is this? is it exam code: saa-c00 OR is this exam material for code: saa-c01

which version of the exam is this

This was a great purchase.

gosh anil, am also thinking along that line. from the experience i gained i was able to get my aws solutions architect association dumps. it was now easy to relate what i had learnt from experience plus what was there on the dumps and understanding it was easy. i am still confused between csa- professional and dev associate. not sure which one to start with although i know that the bottom line is that i have to first gain my experience before trying the dumps.

i did this exam and passed. now that i am done with thois aws solutions architect associate exam dumps, i am now looking forward to dev associate next. but i have to gain some experience which will help me understand when i will enrollee for it.

apart from the aws certification dumps here my company also helped me a great deal. i happened to be chosen to attend the 5-day class of aws. we met with persons all over the world and the global training was just awesome. all that i learnt there was more like a pretest for my aws associate class. make sure you don’t miss on such opportunities.

before you sit down for your exams make sure you are comfy answering questions in alias records of route 53. most of the questions were from this area. i advise that if you want to answer this perfectly pleas go thro aws exam dumps on this site. they are easy to understand and even to implement.

hey can someone tell me is this aws dumps is still valid. thinking of taking my exams in three months’ time.

i did the exam today and it was quite challenging. however, after carefully going thro aws solutions architech association dumps, i dint feel much of a bizarre answering the exam questions. at least i passed though not as i expected.

thanks to this forum i got my aws certificate solutions architect - associate dumps here and passed. it wasn’t an easy exam but from the comments i can see that others did pass too.