Pass Your Amazon AWS Certified Database - Specialty Exam Easy!

Amazon AWS Certified Database - Specialty Exam Questions & Answers, Accurate & Verified By IT Experts

Instant Download, Free Fast Updates, 99.6% Pass Rate

Amazon AWS Certified Database - Specialty Practice Test Questions in VCE Format

| File | Votes | Size | Date |

|---|---|---|---|

File Amazon.selftestengine.AWS Certified Database - Specialty.v2024-04-12.by.hannah.137q.vce |

Votes 1 |

Size 5.25 MB |

Date Apr 12, 2024 |

File Amazon.actualtests.AWS Certified Database - Specialty.v2022-02-10.by.dylan.128q.vce |

Votes 1 |

Size 4.52 MB |

Date Feb 10, 2022 |

File Amazon.selftestengine.AWS Certified Database - Specialty.v2022-01-18.by.zhangjie.119q.vce |

Votes 1 |

Size 5.26 MB |

Date Jan 18, 2022 |

File Amazon.test-king.AWS Certified Database - Specialty.v2021-12-14.by.wanggang.107q.vce |

Votes 1 |

Size 3.14 MB |

Date Dec 14, 2021 |

File Amazon.certkiller.AWS Certified Database - Specialty.v2021-10-18.by.harper.94q.vce |

Votes 1 |

Size 3.53 MB |

Date Oct 18, 2021 |

File Amazon.practiceexam.AWS Certified Database - Specialty.v2021-04-29.by.alex.80q.vce |

Votes 1 |

Size 263.95 KB |

Date Apr 28, 2021 |

File Amazon.examanswers.AWS Certified Database - Specialty.v2020-03-17.by.harley.45q.vce |

Votes 3 |

Size 114.42 KB |

Date Mar 17, 2020 |

Amazon AWS Certified Database - Specialty Practice Test Questions, Exam Dumps

Amazon AWS Certified Database - Specialty (AWS Certified Database - Specialty) exam dumps vce, practice test questions, study guide & video training course to study and pass quickly and easily. Amazon AWS Certified Database - Specialty AWS Certified Database - Specialty exam dumps & practice test questions and answers. You need avanset vce exam simulator in order to study the Amazon AWS Certified Database - Specialty certification exam dumps & Amazon AWS Certified Database - Specialty practice test questions in vce format.

Your Foundational Guide to the Amazon AWS Certified Database - Specialty Certification

In the evolving landscape of cloud computing, specialization has become a key differentiator for technology professionals. The AWS Certified Database - Specialty certification stands as a testament to an individual's deep expertise in the broad portfolio of Amazon Web Services database solutions. This credential is not merely a badge; it is a validation of one's ability to design, recommend, maintain, and troubleshoot complex database architectures tailored to specific business requirements. Achieving this certification signals to employers and peers that you possess a comprehensive understanding of both the theoretical and practical aspects of cloud-based database management, positioning you as a valuable asset in any data-driven organization.

The demand for skilled database professionals who can navigate the complexities of the cloud is at an all-time high. Companies are migrating their critical data workloads to the cloud to leverage its scalability, flexibility, and cost-effectiveness. The AWS Certified Database - Specialty exam directly addresses this industry need by focusing on the skills required to manage this transition and optimize database performance in a cloud environment. It covers a wide array of topics, from selecting the right database for a specific workload to implementing robust security, disaster recovery, and monitoring strategies. This makes it a highly relevant and sought-after certification for today's IT professionals.

Passing this exam demonstrates more than just knowledge of individual AWS services. It proves you can think like a database architect, analyzing application needs, anticipating future growth, and designing solutions that are secure, resilient, performant, and cost-efficient. The preparation journey itself forces you to delve into the nuances of each database service, understanding its unique features, limitations, and ideal use cases. This deep-seated knowledge is what enables certified professionals to drive business transformation, helping organizations unlock the full potential of their data by using the right tools for the job.

The credential serves as a powerful career accelerator. For database administrators, it provides a clear path to modernizing their skills for the cloud era. For developers and solutions architects, it deepens their understanding of the data layer, enabling them to build more robust and scalable applications. Ultimately, the AWS Certified Database - Specialty certification is about demonstrating mastery over one of the most critical components of modern IT infrastructure. It validates your ability to handle data with the expertise and confidence required to support mission-critical systems and drive innovation within your organization.

Understanding the Value of Specialty Certifications in Cloud Computing

While foundational and associate-level AWS certifications provide a broad overview of the cloud platform, specialty certifications cater to a different purpose. They are designed for professionals who wish to validate their expertise in a specific technical domain. The AWS Certified Database - Specialty exam is a prime example, focusing exclusively on the intricacies of database technologies within the AWS ecosystem. This level of focus allows for a much deeper and more rigorous assessment of skills, setting a higher bar for qualification and making the credential more meaningful to employers seeking experts in this field.

The value of a specialty certification lies in its specificity. When an organization needs to migrate a large-scale Oracle database or design a globally distributed, low-latency application, they require a professional who has more than just a general understanding of cloud services. They need someone who understands the subtle differences between Amazon Aurora and Amazon RDS, the performance characteristics of DynamoDB, and the migration pathways using the Database Migration Service. The AWS Certified Database - Specialty certification acts as a reliable indicator that an individual possesses this specialized, in-demand knowledge.

Furthermore, pursuing a specialty certification encourages a more profound level of learning. The preparation process requires candidates to move beyond basic concepts and engage with advanced topics, best practices, and real-world architectural patterns. This journey enhances critical thinking and problem-solving skills, as candidates must learn to evaluate trade-offs between different database solutions based on factors like performance, cost, security, and operational overhead. This depth of knowledge is invaluable in professional roles where making the right architectural decision can have a significant impact on a project's success.

From a career perspective, holding a specialty certification like the AWS Certified Database - Specialty can unlock new opportunities. It differentiates you in a competitive job market and can lead to more senior roles with greater responsibility. It demonstrates a commitment to continuous learning and a passion for your technical domain. For consultants and professional services teams, it builds credibility and trust with clients, assuring them that their critical data workloads are in the hands of a verified expert. In essence, it is a strategic investment in your professional development that yields substantial returns.

Who is the Ideal Candidate for This Certification?

The AWS Certified Database - Specialty exam is not an entry-level certification. It is specifically tailored for individuals who have substantial hands-on experience working with database technologies. The ideal candidate typically has a background as a database administrator, database engineer, solutions architect, or a DevOps engineer with a strong focus on data management. This professional should be comfortable with both relational and non-relational database concepts and have practical experience in designing, implementing, and maintaining database systems. The exam is designed to challenge seasoned professionals and validate their advanced skill set.

AWS recommends a specific level of experience for those attempting this exam. It is suggested that candidates have at least five years of experience with common database technologies. This experience should encompass tasks such as performance tuning, optimization, setting up high-availability configurations, and implementing backup and recovery strategies. In addition to general database experience, AWS recommends a minimum of two years of hands-on experience working with AWS. This includes practical familiarity with deploying and managing both on-premises and AWS cloud-based relational and non-relational databases.

The target audience for the AWS Certified Database - Specialty certification also includes individuals who are responsible for making architectural decisions about data stores. This means they should be ableto analyze application requirements and recommend the most appropriate AWS database service. For example, they should know when to choose Amazon Aurora for a high-throughput relational workload, Amazon DynamoDB for a key-value store requiring single-digit millisecond latency, or Amazon Redshift for a large-scale data warehousing solution. This ability to map business needs to technical solutions is a core competency tested in the exam.

Ultimately, the ideal candidate is someone who is passionate about data and understands its central role in modern applications. They are not just focused on a single database engine but have a broad understanding of the different types of databases and their specific use cases. They are proactive about security, obsessed with performance, and committed to building resilient and scalable systems. If you are a professional who lives and breathes databases and wants to prove your expertise on the world's leading cloud platform, then the AWS Certified Database - Specialty certification is the right goal for you.

Decoding the Exam's Core Objectives and Validated Skills

To effectively prepare for the AWS Certified Database - Specialty exam, it is crucial to understand what it aims to validate. The exam is not a simple test of factual recall about different services. Instead, it assesses your ability to apply your knowledge to solve complex, real-world problems. The core objective is to certify that you can understand the key features of various AWS database services and differentiate between them to select the right tool for a specific task. This involves a deep understanding of their architectural nuances, performance characteristics, and pricing models.

One of the primary skills validated is the ability to analyze needs and requirements to design and recommend appropriate database solutions. This goes beyond simply picking a service from a list. It means evaluating workload patterns, data access requirements, scalability needs, and disaster recovery objectives. A certified professional is expected to design a complete solution that includes considerations for high availability using features like Multi-AZ deployments, read replicas for scaling read traffic, and global databases for low-latency access across different regions. This design-oriented thinking is a significant focus of the exam.

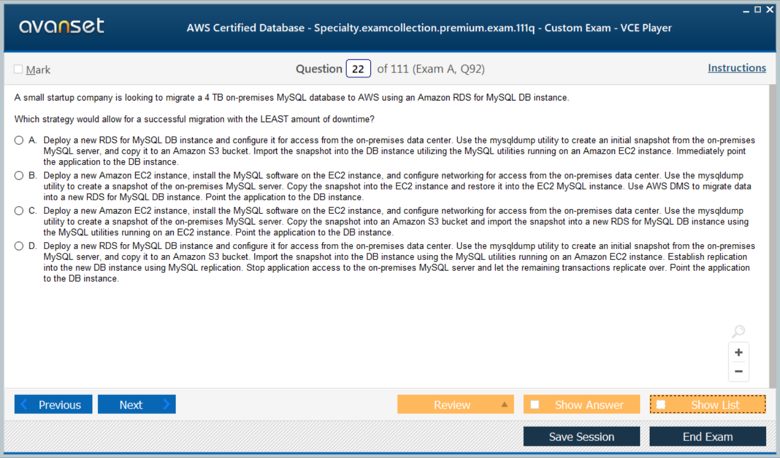

The certification also validates a candidate's proficiency in deploying, migrating, and managing database solutions on AWS. This includes knowing how to automate deployments using Infrastructure as Code, planning and executing complex data migrations from on-premises environments to the cloud, and performing ongoing operational tasks. Skills related to data migration are particularly important, covering the use of tools like the AWS Database Migration Service (DMS) and the Schema Conversion Tool (SCT) to handle both homogeneous and heterogeneous migrations with minimal downtime.

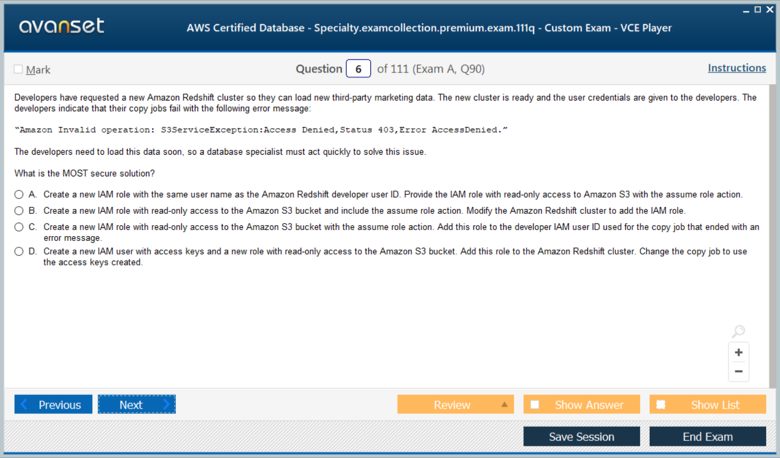

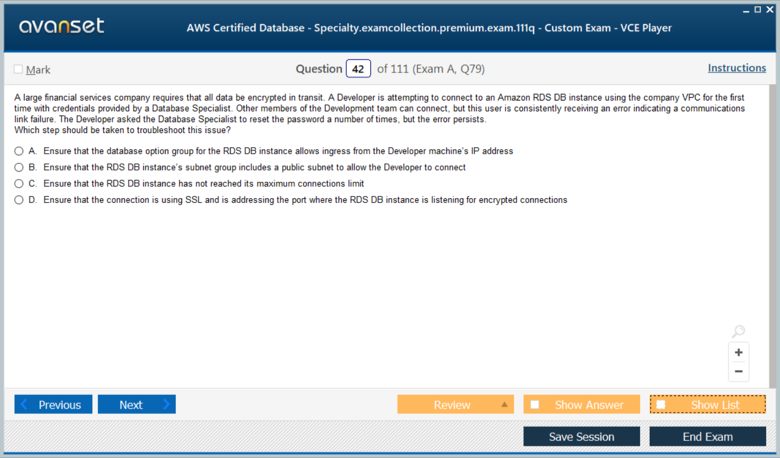

Finally, the exam places a strong emphasis on the operational aspects of database management, including monitoring, troubleshooting, and security. Candidates are expected to know how to use tools like Amazon CloudWatch and Performance Insights to monitor database health and diagnose performance bottlenecks. They must also demonstrate a thorough understanding of security best practices, such as implementing encryption for data at rest and in transit, managing access control using IAM policies and database authentication mechanisms, and setting up auditing to track database activity. These validated skills reflect the comprehensive responsibilities of a modern database specialist.

Navigating the Prerequisites: Recommended Experience and Knowledge

While there are no strict, mandatory prerequisites to sit for the AWS Certified Database - Specialty exam, AWS provides strong recommendations for a reason. Attempting the exam without the suggested background knowledge and experience can be an exceptionally challenging endeavor. The recommended five years of experience in common database technologies ensures that a candidate has a solid foundation in core database principles. This includes a deep understanding of SQL, data modeling, indexing strategies, query optimization, and transaction management, which are concepts that apply across different database systems.

The recommendation for at least two years of hands-on experience with AWS is equally critical. This practical experience is where you learn the nuances of how AWS services work in the real world. It involves more than just reading documentation; it means having actually provisioned an RDS instance, configured a DynamoDB table, troubleshot a slow query in Aurora, or restored a database from a snapshot. This hands-on familiarity is essential for answering the scenario-based questions that are prevalent in the exam, as they often require you to make judgments based on practical knowledge of service behaviors and limitations.

Experience with both relational and non-relational databases is another key prerequisite. The AWS Certified Database - Specialty exam covers a wide spectrum of database types, and you need to be proficient in both. This means understanding the structured nature of relational databases like PostgreSQL and MySQL, as well as the flexible schemas of NoSQL databases like DynamoDB (key-value and document) and Neptune (graph). A candidate should be ableto articulate the trade-offs between these different models and know when one is a better fit for a given application than another.

The required experience also extends to understanding how databases integrate with the broader AWS ecosystem. This includes knowledge of networking concepts like VPCs, subnets, and security groups, as well as identity and access management with IAM. You should also be familiar with how applications running on services like EC2 or Lambda connect to your databases, and how to monitor everything using CloudWatch. The exam assumes a holistic understanding of how database solutions fit within a larger cloud architecture, making this broad, practical knowledge an indispensable part of your preparation.

A High-Level Overview of the Exam Domains

The structure of the AWS Certified Database - Specialty exam is defined by five distinct domains, each with a specific weighting that indicates its importance. Understanding these domains is the first step in creating an effective study plan. The first and most heavily weighted domain is "Workload-Specific Database Design," which constitutes 26% of the exam. This area tests your ability to select the optimal database service by analyzing workload requirements, as well as your skills in designing for high availability, scalability, and cost-effectiveness. It is the architectural heart of the certification.

The second domain, "Deployment and Migration," accounts for 20% of the exam content. This section focuses on the practical aspects of getting your databases running on AWS. It covers automating the deployment process, strategizing and executing data migrations from various sources, and validating the success of those migrations. A significant portion of this domain involves understanding the capabilities and limitations of tools like the AWS Database Migration Service. This is where your practical, hands-on skills are put to the test.

The remaining three domains each make up 18% of the exam, creating a balanced assessment of operational and security skills. The "Management and Operations" domain covers the day-to-day tasks of a database specialist, such as implementing backup and restore strategies, performing maintenance, and managing the overall operational health of the database environment. "Monitoring and Troubleshooting" focuses on your ability to proactively monitor for issues, set up effective alerting, diagnose performance problems using AWS tools, and optimize queries and configurations for better performance.

The final domain, "Database Security," is a critical component that also accounts for 18% of the exam. This domain assesses your knowledge of securing the data layer from all angles. It includes topics like encrypting data both at rest and in transit, implementing robust access control and authentication mechanisms, configuring auditing solutions to track activity, and identifying potential security vulnerabilities within a database architecture. A thorough understanding of security best practices is non-negotiable for anyone aspiring to earn the AWS Certified Database - Specialty certification.

Key AWS Database Services to Know

Success in the AWS Certified Database - Specialty exam requires in-depth knowledge of a core set of AWS database services. At the forefront are the relational database offerings. Amazon RDS is a foundational service that provides managed relational databases with support for several popular engines like MySQL, PostgreSQL, MariaDB, Oracle, and SQL Server. You must understand its features for high availability (Multi-AZ), read scaling (Read Replicas), and automated backups. Amazon Aurora, a cloud-native relational database, is also critical. You need to know its unique architecture, performance advantages, and features like Global Database and Serverless v2.

The non-relational, or NoSQL, services are equally important. Amazon DynamoDB is a key-value and document database service that is central to many modern applications requiring high performance at scale. A deep understanding of its data modeling concepts, capacity modes (provisioned vs. on-demand), indexing with global and local secondary indexes, and features like DynamoDB Streams and transactional capabilities is essential. You should be prepared for questions that test your ability to choose the right partition key and design for efficient access patterns to avoid performance issues.

The exam also covers a range of specialized, purpose-built databases. Amazon ElastiCache, which offers managed Redis and Memcached, is the go-to service for in-memory caching and session storage. Amazon DocumentDB is a managed document database service with MongoDB compatibility. Amazon Neptune is a managed graph database service used for building applications that work with highly connected datasets. For data warehousing, you must be proficient with Amazon Redshift, understanding its columnar storage architecture, data distribution styles, and workload management features.

Finally, you need to be familiar with the services that support database operations. The AWS Database Migration Service (DMS) and the AWS Schema Conversion Tool (SCT) are fundamental for migration tasks. For monitoring and performance tuning, Amazon CloudWatch and RDS Performance Insights are indispensable. Security services like AWS Key Management Service (KMS) for managing encryption keys and AWS Identity and Access Management (IAM) for controlling access are also integral to the exam content. A comprehensive study plan must include dedicated time for each of these key services.

Mastering Workload-Specific Database Design

The first domain of the AWS Certified Database - Specialty exam, "Workload-Specific Database Design," is the most significant, accounting for 26% of the total score. Mastering this domain is not just about memorizing facts; it is about developing the architectural mindset required to build effective and efficient database solutions on AWS. This domain challenges you to act as a database solutions architect, analyzing the unique characteristics of an application's workload and selecting the most appropriate database service from the extensive AWS portfolio. This requires a profound understanding of the trade-offs between different database types, consistency models, and feature sets.

Success in this domain hinges on your ability to deconstruct a problem statement into a set of technical requirements. You will be presented with scenarios that describe an application's data structure, access patterns, latency requirements, and expected transaction volume. Your task is to translate these business needs into a concrete database design. This involves choosing between relational and non-relational models, deciding on a specific AWS service like Aurora, DynamoDB, or ElastiCache, and justifying your choice based on the workload's characteristics. A deep familiarity with the ideal use cases for each AWS database is therefore paramount.

This domain also extends beyond just selecting a service. It requires you to design a comprehensive solution that addresses key non-functional requirements. You must consider how to build a system that is highly available, resilient to failures, and capable of scaling to meet future demand. This involves architecting for fault tolerance, planning for disaster recovery, and ensuring performance does not degrade as the workload grows. Furthermore, you will be expected to make these design decisions while being mindful of cost, demonstrating that you can build solutions that are not only technically sound but also economically viable.

Ultimately, this domain tests your holistic understanding of database architecture in the cloud. It forces you to think critically about how different components of a solution fit together. You need to consider data consistency needs, compliance requirements, and the operational burden of your proposed design. Preparing for this section requires a combination of theoretical knowledge, hands-on experience, and a structured approach to problem-solving. By mastering workload-specific design, you are not just preparing for the exam; you are building the core competency of a true database specialist.

Selecting the Right Database Service for Your Workload

A central task within the design domain is selecting the most suitable database service for a given workload. The AWS Certified Database - Specialty exam will present you with various scenarios, and you must choose the optimal service. This requires a nuanced understanding of different data models and their corresponding AWS implementations. For traditional applications requiring complex transactions and strong consistency, such as e-commerce platforms or financial systems, relational databases are often the best fit. In AWS, this means choosing between Amazon RDS with a specific engine or the cloud-native Amazon Aurora, weighing factors like performance, cost, and management overhead.

For workloads that demand extreme scalability and flexible schemas, non-relational (NoSQL) databases are the preferred choice. The exam will test your ability to identify these use cases. For example, if a scenario describes a need for single-digit millisecond latency for a high-volume key-value lookup, such as a user session store or a real-time bidding platform, Amazon DynamoDB is the obvious answer. You must understand its partitioning scheme, the importance of a good key design, and its different consistency models to answer related questions correctly.

The exam also delves into more specialized, purpose-built databases. If a scenario involves building a social network, a fraud detection system, or a recommendation engine that relies on analyzing relationships between entities, Amazon Neptune, the managed graph database service, would be the appropriate choice. Similarly, for caching frequently accessed data to reduce latency and offload a primary database, Amazon ElastiCache (with Redis or Memcached) is the go-to solution. For analytical workloads that involve complex queries over large datasets, such as business intelligence reporting, the columnar storage and massively parallel processing capabilities of Amazon Redshift are ideal.

Making the right selection involves a careful analysis of the workload's characteristics. You need to consider the type of data (structured, semi-structured, or unstructured), the query patterns (simple lookups vs. complex joins), the required latency, the expected throughput, and the consistency requirements (strong vs. eventual). The exam will often present distractors, so your ability to critically evaluate the scenario and pinpoint the key requirements that drive the database choice is crucial. A systematic approach to dissecting the problem is key to consistently selecting the correct service.

Designing for High Availability and Disaster Recovery

A core responsibility of a database specialist is to ensure that data is durable and accessible, even in the face of failures. The AWS Certified Database - Specialty exam places significant emphasis on your ability to design for high availability (HA) and disaster recovery (DR). High availability refers to designing systems that can withstand component failures within a single region, such as the loss of a server or an entire availability zone, with minimal to no downtime. For services like Amazon RDS and Aurora, the primary mechanism for achieving HA is the Multi-AZ deployment option.

You must understand precisely how Multi-AZ works for different database engines. For RDS, it involves a synchronous replication of data to a standby instance in a different Availability Zone. In the event of a primary instance failure, RDS automatically fails over to the standby. For Amazon Aurora, the architecture is different, with a shared storage volume that is replicated across three Availability Zones. This allows for even faster failover times, typically under a minute. The exam will test your knowledge of these mechanisms, their recovery point objectives (RPO), and recovery time objectives (RTO).

Disaster recovery, on the other hand, involves planning for larger-scale events that could impact an entire AWS region. Your DR strategy must address how you would recover your database operations in a different geographical region. This often involves cross-region replication. For Amazon RDS, you can configure cross-region read replicas and promote one to a standalone instance in a DR scenario. Amazon Aurora Global Database provides a more advanced solution with low-latency global reads and fast cross-region failover capabilities, typically under a minute. You need to know the trade-offs between these options in terms of cost, complexity, RPO, and RTO.

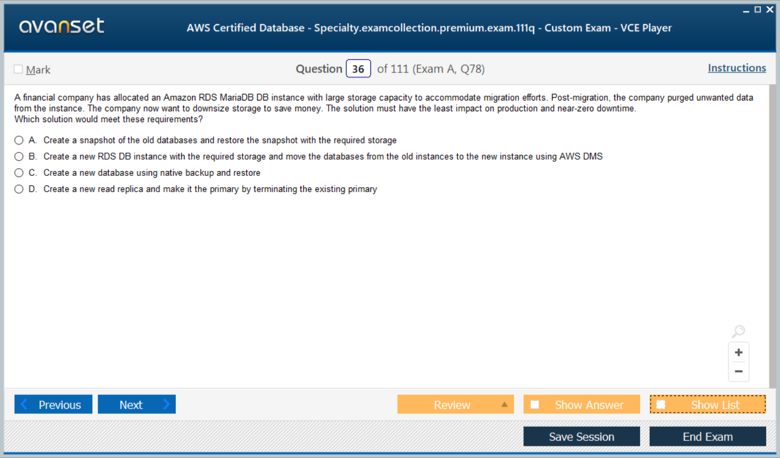

Beyond replication, backup and restore strategies are a fundamental part of both HA and DR. The exam expects you to be an expert on AWS backup mechanisms, including automated snapshots and the Point-in-Time Recovery (PITR) feature. You should understand how to perform a restore, the implications of restoring to a new instance, and how to copy snapshots to another region for DR purposes. Designing a robust HA and DR plan involves combining these different features into a cohesive strategy that meets the specific RPO and RTO requirements of the business.

Architecting for Scalability and Performance

As applications grow, their databases must be able to handle increased load without a degradation in performance. The AWS Certified Database - Specialty exam rigorously tests your ability to architect for scalability and optimize for performance. Scalability can be achieved in two primary ways: vertical scaling (scaling up) and horizontal scaling (scaling out). Vertical scaling involves increasing the resources of a single database instance, such as its CPU, RAM, or storage. On AWS, this is as simple as changing the instance type for an RDS or Aurora database. You should know when this is an appropriate strategy and also be aware of its limitations.

Horizontal scaling, or scaling out, involves adding more instances to share the load. For read-heavy workloads, this is commonly achieved using read replicas. You must understand how read replicas work for both RDS and Aurora, including the asynchronous replication mechanism and potential replication lag. For Amazon Aurora, you can have up to 15 read replicas, providing massive read scalability. The exam will test your ability to design architectures that effectively leverage read replicas to improve application performance.

For write scalability, the options are more nuanced. Traditional relational databases typically scale writes vertically. However, services like Amazon Aurora are designed for higher write throughput. For extreme write scalability, NoSQL databases like Amazon DynamoDB are often the answer. You must have a deep understanding of DynamoDB's scaling model, including provisioned throughput and on-demand capacity modes. Knowing how to design a partition key that distributes writes evenly across partitions is a critical skill for achieving massive scale with DynamoDB.

Performance optimization is the other side of the coin. This involves tuning the database to make the most efficient use of its resources. For the exam, you need to be familiar with concepts like proper indexing strategies to speed up queries, query optimization techniques, and the use of caching with services like Amazon ElastiCache to reduce the load on the primary database. You should also know how to use tools like RDS Performance Insights to identify performance bottlenecks, such as slow-running queries or resource contention, and take corrective action.

Ensuring Compliance in Your Database Design

In today's regulatory environment, ensuring that database solutions meet compliance requirements is a non-negotiable aspect of their design. The AWS Certified Database - Specialty exam includes questions that assess your ability to incorporate compliance and security controls into your database architecture from the very beginning. This starts with understanding the shared responsibility model, where AWS is responsible for the security of the cloud, while you are responsible for security in the cloud. For databases, this means you are responsible for configuring security features correctly.

A key aspect of compliance is data encryption. You must be an expert in implementing encryption for data at rest and data in transit. For data at rest, this involves enabling encryption on your RDS instances, Aurora clusters, or DynamoDB tables. You should understand the role of AWS Key Management Service (KMS) in managing the encryption keys and the difference between AWS-managed keys and customer-managed keys (CMKs). For data in transit, you need to know how to enforce SSL/TLS connections to your database to protect data as it travels over the network.

Many compliance standards, such as PCI DSS, HIPAA, and GDPR, have specific requirements for auditing and logging. Your database design must include a strategy for capturing and retaining audit trails. This could involve enabling logging features within the database engine itself, suchas the audit log in MySQL or PostgreSQL on RDS, and configuring them to send logs to Amazon CloudWatch Logs for centralized storage and analysis. You should also be familiar with AWS CloudTrail, which records API calls made to your AWS account, providing an audit trail of management actions taken on your database resources.

Access control is another critical component of compliance. Your design must implement the principle of least privilege, ensuring that users and applications only have the permissions they need to perform their tasks. This involves a combination of AWS Identity and Access Management (IAM) for controlling access to the AWS management APIs, and the native authentication and authorization mechanisms within the database engine itself. For some services, like RDS and Aurora, you can even use IAM database authentication for a more integrated and secure approach to managing credentials.

Cost Optimization Strategies for AWS Database Solutions

While technical excellence is paramount, a successful database architect must also design solutions that are cost-effective. The AWS Certified Database - Specialty exam will test your ability to compare the costs of different database solutions and apply optimization strategies. This requires a solid understanding of the pricing models for various AWS database services. For example, RDS and Aurora are typically priced based on instance hours, storage consumed, and data transfer. DynamoDB, on the other hand, has pricing based on read and write capacity units and storage.

One of the first steps in cost optimization is right-sizing your database instances. This involves selecting an instance type that matches the performance requirements of your workload without being oversized and wasteful. You can use monitoring tools like Amazon CloudWatch to analyze CPU and memory utilization over time to make informed right-sizing decisions. For workloads with variable or unpredictable traffic patterns, services like Amazon Aurora Serverless v2 or DynamoDB on-demand capacity mode can be highly cost-effective, as they automatically scale capacity up and down and you only pay for what you use.

Storage costs can also be a significant part of your bill. You should be familiar with the different storage options available for services like RDS, such as General Purpose SSD (gp2 and gp3) and Provisioned IOPS SSD (io1 and io2), and know when to use each. For DynamoDB, enabling Time to Live (TTL) is a great way to automatically delete old, unneeded items from your tables, which can significantly reduce storage costs. Similarly, implementing proper lifecycle policies for your database backups and snapshots can prevent you from paying for long-term storage of data that is no longer needed.

Finally, you should be aware of the different purchasing options that can lead to substantial savings. For workloads with a stable and predictable resource need, using Reserved Instances for RDS can offer significant discounts compared to on-demand pricing in exchange for a one- or three-year commitment. Understanding the various cost levers available and how to apply them to different scenarios is a key skill. The exam will expect you to be able to analyze a set of requirements and recommend a solution that balances performance, availability, and cost.

A Comprehensive Guide to Deployment and Migration

The second domain of the AWS Certified Database - Specialty exam, "Deployment and Migration," carries a substantial weight of 20%. This domain shifts the focus from theoretical design to the practical, hands-on tasks involved in bringing a database to life on the AWS platform. It covers the entire lifecycle, from the initial deployment of a new database solution to the complex process of migrating existing data from on-premises data centers or other cloud environments. Success in this domain requires a blend of technical knowledge about AWS services and a strategic understanding of project execution and validation.

This section of the exam evaluates your ability to implement the designs conceptualized in the first domain. It is not enough to know which database to use; you must also know how to provision it efficiently, securely, and, most importantly, in a repeatable manner. This involves moving beyond manual, console-based setups and embracing automation. Furthermore, the domain tests your expertise in one of the most common and critical tasks for any cloud adoption journey: data migration. You will need to demonstrate a deep understanding of the tools, techniques, and strategies required to move data with minimal risk and downtime.

The questions in this domain are often scenario-based, requiring you to choose the best approach for a given situation. For instance, you might be asked to select the most appropriate migration strategy for a large, mission-critical database where downtime must be kept to an absolute minimum. This requires knowledge of concepts like change data capture (CDC) and the services that support it. You will also be tested on your ability to plan for the entire migration process, from initial data assessment and schema conversion to the final cutover and validation.

Mastering this domain is crucial as it represents the bridge between planning and execution. It is where architectural blueprints become functioning systems. A database specialist must be proficient not only in designing resilient and performant databases but also in the practical mechanics of deploying and migrating them. This part of your preparation for the AWS Certified Database - Specialty exam will solidify your skills as a hands-on practitioner, capable of delivering end-to-end database solutions on AWS.

Automating Database Deployments with Infrastructure as Code

In modern cloud environments, manual deployments are prone to error, difficult to replicate, and slow to execute. The AWS Certified Database - Specialty exam expects candidates to be proficient in automating database deployments using Infrastructure as Code (IaC). IaC is the practice of managing and provisioning infrastructure through machine-readable definition files rather than physical hardware configuration or interactive configuration tools. The primary tool for this within the AWS ecosystem is AWS CloudFormation. You must have a solid understanding of how to use CloudFormation to define and deploy your database resources.

Using CloudFormation, you can create a template that describes all the AWS resources needed for your database solution, such as the RDS instance, the security groups, the database parameter groups, and the IAM roles. This template, written in YAML or JSON, serves as the single source of truth for your infrastructure. You should be familiar with the syntax for defining common database resources and their properties. For example, you should know how to specify the engine type, instance class, Multi-AZ configuration, and backup retention period for an RDS instance within a CloudFormation template.

The benefits of using IaC are numerous and are frequently tested concepts. It enables you to create consistent and repeatable environments, which is critical for development, testing, and production. It also allows you to version-control your infrastructure, just as you would with application code, making it easy to track changes and roll back if necessary. The exam might present a scenario where a company needs to deploy identical database environments across multiple regions or accounts, and using CloudFormation would be the most efficient and reliable solution.

Beyond just the initial deployment, you should also understand how to manage updates to your infrastructure using CloudFormation change sets. Change sets allow you to preview the changes that CloudFormation will make to your stack before you execute them, giving you an opportunity to verify that the proposed changes are correct. This is a crucial safety mechanism that helps prevent accidental misconfigurations. Proficiency in IaC is a hallmark of a modern cloud professional, and it is an essential skill for anyone preparing for the AWS Certified Database - Specialty exam.

Choosing the Right Data Migration Strategy

Data migration is a complex undertaking, and choosing the right strategy is critical to its success. The AWS Certified Database - Specialty exam will test your ability to evaluate different migration scenarios and select the most appropriate approach. Broadly, migrations can be categorized as homogeneous or heterogeneous. A homogeneous migration is one where the source and target database engines are the same, for example, migrating from a self-managed MySQL database on-premises to Amazon RDS for MySQL. These migrations are generally more straightforward.

A heterogeneous migration, on the other hand, involves changing the database engine as part of the move, such as migrating from an on-premises Oracle database to Amazon Aurora with PostgreSQL compatibility. These migrations are significantly more complex because they require schema and code conversion in addition to the data migration itself. You must understand the challenges associated with heterogeneous migrations, such as differences in data types, stored procedures, and proprietary database features. This is where tools like the AWS Schema Conversion Tool (SCT) become indispensable.

Another key consideration is the acceptable amount of downtime. For non-critical systems, a simple offline migration using a backup and restore method might be sufficient. However, for mission-critical applications, downtime must be minimized. In these cases, a strategy that involves continuous data replication is required. This allows you to keep the source and target databases in sync while you perform testing and prepare for the final cutover. This approach, often facilitated by AWS Database Migration Service (DMS), enables a migration with near-zero downtime.

The exam will present you with scenarios that have different constraints related to downtime, data volume, network bandwidth, and database engine type. Your task is to weigh these factors and choose the optimal strategy. This could involve using services like AWS Snowball for large-scale offline data transfers, setting up a DMS replication instance, or using native database replication tools. A deep understanding of the different migration patterns and the AWS services that support them is essential for this part of the exam.

Leveraging AWS Database Migration Service (DMS) and Schema Conversion Tool (SCT)

For many migration scenarios on the AWS Certified Database - Specialty exam, the AWS Database Migration Service (DMS) and the AWS Schema Conversion Tool (SCT) will be the correct answer. It is therefore imperative that you have a deep understanding of what these services do, how they work together, and their limitations. AWS DMS is a managed service that helps you migrate databases to AWS easily and securely. Its key feature is its support for both homogeneous and heterogeneous migrations and its ability to perform continuous data replication using Change Data Capture (CDC).

You should understand the architecture of AWS DMS, which consists of a replication instance, a source endpoint, and a target endpoint. The replication instance is an EC2 instance that runs the replication software, and you need to choose an appropriate instance size based on the volume of data you are migrating. DMS can be used for a one-time migration or for ongoing replication. The CDC feature is particularly powerful as it captures changes from the source database's transaction logs and applies them to the target, allowing for minimal downtime migrations.

While DMS handles the movement of data, the AWS Schema Conversion Tool (SCT) handles the conversion of the database schema and application code. SCT is primarily used for heterogeneous migrations. It analyzes the source database schema, including objects like stored procedures, functions, and triggers, and automatically converts them to a format compatible with the target database engine. It also provides a detailed assessment report that highlights any objects that cannot be converted automatically and provides guidance on how to manually remediate them.

The synergy between SCT and DMS is a common topic in the exam. Typically, you would first use SCT to assess the migration complexity and convert the schema. Then, once the target schema is created, you would use DMS to perform the full data load and enable ongoing replication. Knowing when and how to use these two services together is a critical skill. You should also be aware of their supported sources and targets and any prerequisites for using them, such as required database permissions or specific engine versions.

Executing and Validating a Successful Data Migration

The final phase of any migration project is the execution of the cutover and the validation of the migrated data. The AWS Certified Database - Specialty exam will test your knowledge of the best practices for these critical steps. The execution of the cutover is the point at which you switch your application traffic from the old source database to the new target database on AWS. For minimal downtime migrations using DMS, this process needs to be carefully orchestrated.

The typical cutover process involves stopping application traffic to the source database, ensuring that all pending changes have been replicated by DMS to the target, and then re-pointing your application's connection strings to the new database. After a brief period of testing to ensure the application is working correctly with the new database, you can resume traffic. Understanding this sequence of events and the coordination required is important. You should also be prepared for questions about fallback strategies in case the cutover does not go as planned.

Data validation is an equally crucial step to ensure the integrity of the migration. You cannot simply assume that the data was moved correctly; you must verify it. AWS DMS provides built-in data validation capabilities. It can compare the data in the source and target tables row by row and report any mismatches. You should know how to enable and configure this feature and interpret its results. For large tables, DMS can perform validation in chunks to manage performance impact.

Beyond the built-in tools, you may also need to perform your own validation checks. This could involve running specific queries on both the source and target databases to compare row counts, check for data consistency, or test application-specific business logic. Having a comprehensive validation plan is a key part of a successful migration. The exam will expect you to understand the importance of validation and the various methods available to perform it thoroughly, ensuring a high level of confidence in the migrated data.

Day-to-Day Management and Operational Tasks

The third domain of the AWS Certified Database - Specialty exam is "Management and Operations," which accounts for 18% of the questions. This domain focuses on the ongoing responsibilities of a database specialist after a database has been deployed. It covers the routine tasks required to keep the database environment healthy, secure, and performing optimally. A key aspect of this is managing database configurations. On AWS, this is primarily done through parameter groups and option groups for services like RDS.

You must have a detailed understanding of parameter groups. These are containers for engine configuration values that are applied to one or more database instances. There are default parameter groups, but the best practice is to create your own custom parameter groups so you have fine-grained control over your database settings. You should know how to modify parameters, the difference between static and dynamic parameters (and that static ones require an instance reboot to take effect), and how to associate a parameter group with a database instance.

Option groups are used to enable and configure additional features for certain database engines, such as Oracle Statspack or Microsoft SQL Server Transparent Data Encryption (TDE). Similar to parameter groups, you need to know how to create custom option groups and attach them to your instances. The exam may ask you to identify the correct method for enabling a specific database feature, and the answer will likely involve an option group.

Another critical operational task is managing maintenance and patching. AWS performs regular maintenance on its managed database services to apply updates to the underlying operating system and database engine. You should understand how to configure the maintenance window for your database instances to control when these activities occur. You can also proactively apply minor version upgrades yourself. The exam will test your understanding of these processes and the potential impact they can have on database availability.

Implementing Robust Backup and Restore Procedures

A cornerstone of database operations is having a solid backup and restore strategy. The AWS Certified Database - Specialty exam dedicates significant attention to this topic. You are expected to be an expert on the backup capabilities of the various AWS database services. For Amazon RDS and Aurora, the primary backup mechanism is automated snapshots. You need to know how to enable this feature, configure the backup retention period (up to 35 days), and understand that these backups enable Point-in-Time Recovery (PITR).

Point-in-Time Recovery is a critical feature that allows you to restore your database to any specific second within your backup retention period. This is made possible because, in addition to the daily snapshots, RDS also continuously uploads transaction logs to Amazon S3. The exam will test your understanding of how to perform a PITR restore, and you should know that it always creates a new database instance. You cannot restore directly to an existing instance.

In addition to automated backups, you can also take manual snapshots. Manual snapshots are not subject to the backup retention period and are kept until you explicitly delete them. This makes them ideal for long-term archival purposes or for creating a baseline before a major change. You should also know how to copy snapshots, both within the same region and to other regions. Copying snapshots to another region is a key component of many disaster recovery strategies.

The restore process itself is also a testable topic. You need to know the steps involved in restoring from a snapshot and the considerations that apply. For example, when you restore an RDS instance, it will come up with a new endpoint address, and you will need to attach the correct security groups and parameter groups. Testing your restore procedures regularly is a critical best practice to ensure that your backups are valid and that you can meet your recovery time objectives in a real disaster scenario.

Advanced Monitoring and Security for the AWS Certified Database - Specialty Exam

The final two domains of the AWS Certified Database - Specialty exam, "Monitoring and Troubleshooting" and "Database Security," each represent 18% of the total score. While distinct, these domains are deeply interconnected, as effective security often relies on robust monitoring, and troubleshooting can frequently uncover underlying security issues. This part of the guide focuses on the advanced skills required to master these crucial areas. Excelling here requires a proactive mindset, shifting from simply reacting to problems to anticipating them and building systems that are both resilient and secure by design.

The monitoring and troubleshooting domain tests your ability to maintain the health and performance of your database solutions. It is not enough to deploy a database; you must be able to observe its behavior, identify deviations from the norm, and diagnose the root cause of any issues that arise. This involves a deep familiarity with AWS-native monitoring tools and a systematic approach to problem-solving. It is about transforming raw data and metrics into actionable insights that drive performance optimization and improve reliability.

Simultaneously, the database security domain assesses your proficiency in protecting your organization's most valuable asset: its data. This goes far beyond basic access control. It encompasses a multi-layered security strategy that includes encryption, network isolation, auditing, and authentication. The exam will present you with complex scenarios that require you to apply security best practices to real-world database architectures. A successful candidate must demonstrate a thorough understanding of the threat landscape and the AWS services and features available to mitigate those threats.

Mastering these domains is what elevates a competent database administrator to an expert database specialist. It demonstrates that you can not only build and manage databases but also operate them at a high level of performance and protect them against a wide range of security risks. Your preparation for the AWS Certified Database - Specialty exam must include dedicated and in-depth study of these operational and security principles, as they are fundamental to running mission-critical workloads in the cloud.

Developing Effective Monitoring and Alerting Strategies

Proactive monitoring is the foundation of a well-managed database environment. The AWS Certified Database - Specialty exam expects you to know how to develop and implement a comprehensive monitoring and alerting strategy. The goal of such a strategy is to detect potential issues before they impact your application's users. This begins with identifying the key performance indicators (KPIs) for your specific database and workload. These metrics serve as the baseline for normal operation and are the basis for your alerting.

For a relational database like Amazon RDS or Aurora, critical metrics to monitor include CPU utilization, available memory, disk space, and I/O operations per second (IOPS). High CPU utilization could indicate inefficient queries, while a sudden drop in available memory might signal a memory leak. You should also monitor database-specific metrics, such as the number of active connections. For a NoSQL database like Amazon DynamoDB, key metrics include consumed read and write capacity units, throttled requests, and request latency. Monitoring these helps ensure you have provisioned adequate capacity and that your application is performing as expected.

Once you have identified the key metrics, the next step is to set up alerting. The primary service for this in AWS is Amazon CloudWatch Alarms. You must be proficient in creating alarms based on static thresholds or anomaly detection. For example, you could configure an alarm to trigger and send a notification via Amazon Simple Notification Service (SNS) whenever CPU utilization on your RDS instance exceeds 80% for a sustained period. This allows you to be notified of potential problems automatically, enabling a rapid response.

A truly effective strategy goes beyond simple threshold-based alarms. It involves creating a holistic view of your system's health. This can be achieved by creating custom CloudWatch Dashboards that consolidate key metrics from your databases, application servers, and other related services in a single place. This provides a unified view that can help you quickly correlate events across different parts of your architecture during a troubleshooting session. The exam will test your ability to choose the right metrics and design an alerting strategy that is both effective and minimizes false positives.

Go to testing centre with ease on our mind when you use Amazon AWS Certified Database - Specialty vce exam dumps, practice test questions and answers. Amazon AWS Certified Database - Specialty AWS Certified Database - Specialty certification practice test questions and answers, study guide, exam dumps and video training course in vce format to help you study with ease. Prepare with confidence and study using Amazon AWS Certified Database - Specialty exam dumps & practice test questions and answers vce from ExamCollection.

Amazon AWS Certified Database - Specialty Video Course

Top Amazon Certification Exams

- AWS Certified Solutions Architect - Associate SAA-C03

- AWS Certified Solutions Architect - Professional SAP-C02

- AWS Certified AI Practitioner AIF-C01

- AWS Certified Cloud Practitioner CLF-C02

- AWS Certified DevOps Engineer - Professional DOP-C02

- AWS Certified Machine Learning Engineer - Associate MLA-C01

- AWS Certified CloudOps Engineer - Associate SOA-C03

- AWS Certified Data Engineer - Associate DEA-C01

- AWS Certified Machine Learning - Specialty

- AWS Certified Developer - Associate DVA-C02

- AWS Certified Advanced Networking - Specialty ANS-C01

- AWS Certified Security - Specialty SCS-C03

- AWS Certified Security - Specialty SCS-C02

- AWS Certified SysOps Administrator - Associate

- AWS-SysOps

Site Search:

@FABIOLA, IMPOV, the official course will equip you with all the information & knowledge required to achieve the objectives of the DBS-C01 exam. BUT! it’ll be wise for you to dl the practice Qs offered here to see the exam format & question types. when you combine them, it’ll be much easier for you to succeed in the final assessment…..HTH ….

hello folks….nei1!!!! can taking the official AWS Certified Database – Specialty course alone help me achieve the passing score in this amazon DBS-C01 exam??

@jennifer, IMO, free of charge amazon dbs-c01 questions are authentic & dependable as I met most of these items in the main exam...try this file out and i’m sure it’ll make a huge difference. GL!

u know, my best friend told me to try DBS-C01 practice test questions offered here for my exam. after training with them for a couple of weeks, i nailed my test. BTW most of the assessment questions came from this file. guys, you’ve done an excellent job! XOXO!!

hi friends… i want to retake my exam because i scored poorly in the first try. will this Amazon DBS-C01 examtest help me out?? is it authentic & dependable??? tia!!!

just aced my exam… can’t thank exam-collection enough for the free dbs-c01 practice test! this material complements really well with the official training course for amazon aws certified database - specialty assessment. TBH, it was a vital contributor to my success in the test. GJ guys!!!

well, it was a good experience practicing with all the DBS-C01 questions available here multiple times….. All of them are provided with detailed answers which helped me to understand the exam topics better... w/o them, i wouldn’t have been able to clear my test successfully. thx!