Pass Your Dell DEA-1TT4 Exam Easy!

Dell DEA-1TT4 Exam Questions & Answers, Accurate & Verified By IT Experts

Instant Download, Free Fast Updates, 99.6% Pass Rate

Dell DEA-1TT4 Practice Test Questions in VCE Format

| File | Votes | Size | Date |

|---|---|---|---|

File Dell.testkings.DEA-1TT4.v2023-09-01.by.harriet.61q.vce |

Votes 1 |

Size 989.55 KB |

Date Sep 01, 2023 |

File Dell.test-inside.DEA-1TT4.v2021-11-11.by.clara.69q.vce |

Votes 1 |

Size 608.76 KB |

Date Nov 11, 2021 |

File Dell.pass4sure.DEA-1TT4.v2021-04-28.by.harrison.64q.vce |

Votes 1 |

Size 658.31 KB |

Date Apr 28, 2021 |

File Dell.pass4sureexam.DEA-1TT4.v2020-10-08.by.hamza.37q.vce |

Votes 2 |

Size 362.64 KB |

Date Oct 08, 2020 |

File Dell.examlabs.DEA-1TT4.v2020-07-29.by.wangxiuying.35q.vce |

Votes 2 |

Size 353.4 KB |

Date Jul 29, 2020 |

File Dell.onlinetest.DEA-1TT4.v2020-05-26.by.wangmin.33q.vce |

Votes 2 |

Size 361.77 KB |

Date May 26, 2020 |

File Dell.Braindumps.DEA-1TT4.v2019-10-25.by.Steven.30q.vce |

Votes 4 |

Size 152.36 KB |

Date Oct 27, 2019 |

Dell DEA-1TT4 Practice Test Questions, Exam Dumps

Dell DEA-1TT4 (Information Storage and Management v4 exam) exam dumps vce, practice test questions, study guide & video training course to study and pass quickly and easily. Dell DEA-1TT4 Information Storage and Management v4 exam exam dumps & practice test questions and answers. You need avanset vce exam simulator in order to study the Dell DEA-1TT4 certification exam dumps & Dell DEA-1TT4 practice test questions in vce format.

Mastering the Foundations: A Comprehensive Guide to the Dell DEA-1TT4 Exam

The DEA-1TT4 Exam, formally known as the Associate - Information Storage and Management Version 4.0 exam, serves as a crucial benchmark for professionals entering the field of data storage. Passing this exam validates a candidate's foundational knowledge of storage-related technologies, concepts, and solutions. It demonstrates an understanding of how storage components work together within a modern data center to meet business requirements for data availability, security, and performance. This certification is often a prerequisite for more advanced credentials and is highly regarded in the industry as a sign of competence. Preparing for the DEA-1TT4 Exam requires a structured approach, beginning with the very basics of what constitutes information and why its management is critical.

The curriculum for the DEA-1TT4 Exam is designed to be comprehensive, covering a wide array of topics that are essential for any aspiring storage administrator or engineer. It delves into the evolution of storage, from simple direct-attached disks to complex, virtualized, and cloud-integrated environments. Candidates are expected to understand not just the 'what' but also the 'why' behind different storage architectures and technologies. This includes grasping the trade-offs between performance, cost, and capacity, and how different solutions address specific business challenges. The exam is not merely a test of memorization but of conceptual understanding and the ability to apply knowledge to practical scenarios.

Success in the DEA-1TT4 Exam opens doors to various career opportunities in IT infrastructure, cloud computing, and data management. It provides a solid platform upon which to build a career, offering the necessary vocabulary and understanding to engage in meaningful discussions about storage strategy and implementation. This five-part guide is structured to walk you through the core domains tested in the examination. This first part will lay the groundwork, focusing on the fundamental principles of data, information, and the modern data center, which are the building blocks for all other topics covered by the DEA-1TT4 Exam.

The Digital Transformation and the Role of Data

In the contemporary digital economy, data is frequently described as the new oil—a valuable asset that can be refined to drive business intelligence, innovation, and competitive advantage. The journey to passing the DEA-1TT4 Exam begins with a deep appreciation for this concept. Data in its raw form consists of basic facts and figures, such as numbers, text, or images. When this data is processed, organized, and given context, it transforms into meaningful information. This information is the lifeblood of modern organizations, informing everything from operational decisions to long-term strategic planning. Understanding this distinction is fundamental.

The exponential growth of data, often referred to as the data explosion, is a key driver behind the need for skilled storage professionals. This growth is fueled by various sources, including social media, the Internet of Things (IoT), mobile devices, and traditional business applications. Organizations are faced with the challenge of not only storing this massive volume of data but also ensuring its accessibility, integrity, and security. The DEA-1TT4 Exam tests a candidate's awareness of these challenges and the technologies developed to address them. The value of data is directly linked to its availability; if it cannot be accessed when needed, its value diminishes significantly.

This transformation requires a shift from traditional data management approaches to more modern, agile, and scalable solutions. Businesses need infrastructure that can handle structured data, such as that found in relational databases, as well as unstructured data, like videos, emails, and sensor readings. A key theme throughout the DEA-1TT4 Exam preparation is how to build and manage a storage environment that is robust and flexible enough to support these diverse data types. This involves understanding the characteristics of data and how those characteristics influence the choice of storage platform, from high-performance flash arrays to cost-effective archival systems.

Understanding the Modern Data Center

The data center is the physical heart of the digital enterprise, housing the critical IT infrastructure that stores, processes, and disseminates data and applications. For the purpose of the DEA-1TT4 Exam, a candidate must understand the data center not just as a room full of servers, but as a complex ecosystem of interconnected components. These core components include compute, storage, and networking systems, all supported by robust physical infrastructure such as power, cooling, and security systems. The seamless integration and management of these elements are paramount to ensuring business continuity and operational efficiency.

A modern data center is defined by its ability to adapt to changing business needs, a concept often referred to as agility. Key characteristics include virtualization, automation, and a software-defined approach. Virtualization allows for the abstraction of physical resources, enabling multiple applications and operating systems to run on a single physical server, thereby improving resource utilization and reducing costs. Automation streamlines routine administrative tasks, freeing up IT staff to focus on more strategic initiatives. The DEA-1TT4 Exam curriculum emphasizes how these principles apply specifically to the storage domain, leading to more efficient and responsive data management.

Furthermore, the data center must provide high levels of availability and security. Availability is often measured by uptime percentages and is achieved through redundancy at every level, from power supplies to network links and storage arrays. Security involves protecting data from both internal and external threats through a layered defense-in-depth strategy. This includes physical security measures to control access to the facility, as well as logical security controls to protect the data itself. A thorough understanding of these data center pillars is essential for any question on the DEA-1TT4 Exam that involves infrastructure design or management.

Core Components of a Storage System

At the heart of any data management strategy is the storage system itself. To excel in the DEA-1TT4 Exam, one must possess a detailed knowledge of what constitutes a modern storage array. At the most fundamental level, a storage system is composed of physical disk drives, which are the media where data is permanently stored. These can be traditional hard disk drives (HDDs) with spinning platters or solid-state drives (SSDs) that use flash memory. The choice between HDD, SSD, or a hybrid approach depends on the specific requirements for performance, capacity, and cost for the workload being supported.

Beyond the physical drives, an intelligent storage system contains several other critical components. The controllers, or storage processors, are the brains of the system. They run the operating environment of the array, manage data placement, and handle all read and write requests from the connected hosts or servers. Cache is another vital component. It is a small amount of very high-speed volatile memory (RAM) used to store frequently accessed data. By servicing read requests from cache instead of the slower backend disks, the storage system can dramatically improve application performance.

The connectivity of the storage system is facilitated through front-end and back-end ports. Front-end ports connect the storage system to the servers that consume the storage. These ports support various protocols, such as Fibre Channel or iSCSI. Back-end ports, on the other hand, connect the controllers to the disk drives within the array. Understanding the data path, from the host through the front-end ports, into the cache and controllers, and finally to the back-end disks, is a core concept that candidates for the DEA-1TT4 Exam must master to diagnose performance issues and design efficient storage solutions.

Intelligent Storage Systems Explained

Modern storage arrays are far more than simple collections of disks. They are sophisticated, intelligent systems designed to provide advanced features that enhance data management, performance, and protection. A key topic within the DEA-1TT4 Exam syllabus is the concept of an intelligent storage system. These systems integrate powerful hardware with advanced software to automate and optimize storage tasks that were once performed manually. This intelligence is what differentiates a modern enterprise storage array from a basic Just a Bunch of Disks (JBOD) enclosure.

One of the primary features of an intelligent storage system is virtualization. Storage virtualization abstracts the physical location and characteristics of the storage from the servers that use it. This allows administrators to pool storage resources from multiple physical arrays and present them as a single, unified resource. This simplifies management, improves utilization, and enables seamless data migration between different tiers of storage without disrupting applications. Understanding how virtualization works at the block level is critical for the DEA-1TT4 Exam.

Other intelligent features include automated storage tiering, thin provisioning, and data reduction technologies. Automated tiering dynamically moves data between different types of disks (e.g., from fast SSDs to slower, high-capacity HDDs) based on its access frequency. Thin provisioning allocates storage capacity to applications on an as-needed basis, improving overall capacity utilization. Data reduction techniques, such as deduplication and compression, reduce the physical amount of storage required to store a given data set. A firm grasp of these features and their benefits is essential for answering questions related to storage efficiency on the DEA-1TT4 Exam.

Key Storage Performance Metrics

Performance is one of the most critical aspects of storage system design and management. The DEA-1TT4 Exam requires candidates to be familiar with the key metrics used to measure and evaluate storage performance. The three most important metrics are Input/Output Operations Per Second (IOPS), throughput (or bandwidth), and latency (or response time). Understanding the relationship between these three metrics and how they relate to different application workloads is fundamental to becoming a competent storage professional. IOPS measures the number of read and write operations a storage system can perform in one second and is particularly important for transactional workloads like databases.

Throughput, typically measured in megabytes or gigabytes per second (MB/s or GB/s), represents the amount of data that can be transferred between a host and the storage system in a given period. Throughput is a critical metric for workloads that involve large, sequential data transfers, such as video streaming, data warehousing, and backup operations. A system can have high IOPS but low throughput, or vice versa, depending on the size of the I/O operations. Small, random I/O requests will drive high IOPS, while large, sequential I/O requests will result in high throughput.

Latency is arguably the most important metric from the perspective of the end-user or application. It measures the time it takes to complete a single I/O request, from the moment it is sent by the host to the moment a confirmation is received. It is usually measured in milliseconds (ms) or microseconds (µs). Low latency is crucial for applications where responsiveness is key, such as online transaction processing (OLTP) systems and virtual desktop infrastructure (VDI). The DEA-1TT4 Exam will test your ability to identify which performance metric is most relevant for a given application scenario and how different storage technologies impact these metrics.

RAID Levels and Data Protection

Data protection is a paramount concern for any organization, and RAID (Redundant Array of Independent Disks) is a foundational technology for protecting data against disk drive failures. A significant portion of the DEA-1TT4 Exam is dedicated to understanding different RAID levels and their respective characteristics. RAID combines multiple physical disk drives into a single logical unit to provide data redundancy, improved performance, or both. It is not a backup solution but rather a form of fault tolerance that allows a storage system to continue operating even after one or more drives have failed.

Different RAID levels offer different balances of protection, performance, and usable capacity. For example, RAID 1 (mirroring) provides excellent read performance and high data protection by writing identical data to two separate disks, but it has a 50% capacity overhead. RAID 5 (striping with distributed parity) offers a good balance of performance and capacity efficiency by striping data across multiple disks and distributing parity information. It can withstand the failure of a single drive. RAID 6 is similar but uses double parity, allowing it to tolerate the failure of two drives simultaneously, offering even greater protection.

The DEA-1TT4 Exam will expect candidates to know the details of common RAID levels, including RAID 0, 1, 5, 6, and nested levels like RAID 1+0 (RAID 10). This includes understanding the write penalty associated with parity-based RAID levels (RAID 5 and 6), which is the extra I/O operations required to calculate and write parity for every host write. Knowing which RAID level is appropriate for a specific application—for example, RAID 10 for a high-performance database versus RAID 6 for a large-capacity archive—is a key skill tested in the examination.

Preparing Your Mindset for the DEA-1TT4 Exam

Beyond technical knowledge, success on the DEA-1TT4 Exam requires the right preparation strategy and mindset. This is an associate-level exam, which means it is designed to test a broad range of foundational concepts rather than deep expertise in a single niche area. Therefore, your study should focus on breadth of knowledge across all the exam domains. Avoid getting bogged down in the minute details of a specific vendor's implementation and instead focus on the underlying principles and industry-standard terminology that are universally applicable.

A structured study plan is essential. Begin by reviewing the official exam objectives, which provide a detailed outline of all the topics that may be covered. Allocate your study time based on your existing familiarity with each topic. Use a combination of study materials, including official courseware, textbooks, and online resources, to gain different perspectives on the concepts. Hands-on experience, even in a lab environment, can be invaluable for solidifying your understanding of how different components interact in a real-world scenario. Don't underestimate the value of practice exams to familiarize yourself with the question format and identify areas where you need further review.

Finally, approach the DEA-1TT4 Exam with confidence. The goal of the certification is to validate that you have the necessary foundational knowledge to be effective in a storage-related role. As you progress through your studies, focus on understanding the "why" behind each technology, not just memorizing facts. Why is block-level access different from file-level access? Why would you choose iSCSI over Fibre Channel in a certain environment? This deeper level of comprehension will not only help you pass the DEA-1TT4 Exam but will also serve as a solid foundation for your entire career in information storage and management.

Navigating Storage Architectures for the DEA-1TT4 Exam

A central theme of the DEA-1TT4 Exam is the understanding of different storage architectures. The way storage is connected to and accessed by compute systems has a profound impact on performance, scalability, cost, and manageability. The three primary architectures that every candidate must master are Direct-Attached Storage (DAS), Network-Attached Storage (NAS), and Storage Area Networks (SAN). Each of these architectures was developed to solve specific challenges and has its own unique set of characteristics, benefits, and drawbacks. This part of the guide will delve into each of these architectures in detail.

Your preparation for the DEA-1TT4 Exam must include the ability to differentiate between these models clearly. It is not enough to simply know the definitions; you must understand the underlying access methods—block-level versus file-level—and the protocols used in each. For instance, knowing that DAS provides block-level access directly to a single host is the first step. The next is understanding the implications of this, such as its simplicity and high performance for a single server, but also its limitations in terms of sharing and scalability. This deeper, contextual knowledge is what the exam aims to test.

As we explore each architecture, we will consistently relate the concepts back to practical use cases and the types of questions you might encounter on the DEA-1TT4 Exam. The goal is to build a mental framework that allows you to analyze a given business or application requirement and correctly identify the most appropriate storage architecture. This analytical skill is highly valued in the industry and is a key indicator of a candidate's readiness to take on real-world storage administration challenges. Let's begin by examining the oldest and simplest of the three: Direct-Attached Storage.

Direct-Attached Storage (DAS) in Detail

Direct-Attached Storage, or DAS, refers to a storage system that is directly connected to a single computer or server and is not accessible via a network. This is the most straightforward storage architecture. The connection is typically made using protocols like Serial Attached SCSI (SAS), SATA, or even older parallel SCSI. In its simplest form, the internal hard drive of your laptop or desktop computer is a form of DAS. In a server environment, DAS can be internal drives within the server chassis or an external disk enclosure connected directly to the server's Host Bus Adapter (HBA).

The primary advantage of DAS is its simplicity and low cost. There are no complex networks to configure or manage, making it easy to deploy. Because the storage is dedicated to a single server, it can offer very high performance with low latency, as there is no network overhead to contend with. This makes DAS an excellent choice for localized, high-performance needs, such as a database running on a single server or a boot drive for an operating system. The DEA-1TT4 Exam will expect you to recognize these benefits and identify scenarios where DAS is a suitable solution.

However, the limitations of DAS are significant and are a key reason for the development of networked storage. The most prominent drawback is that storage in a DAS configuration cannot be easily shared with other servers. This leads to the creation of "islands of storage," where one server may have excess capacity while another is running out, resulting in poor resource utilization. Furthermore, managing multiple DAS systems can be complex and inefficient, as each must be managed individually. Centralized management, backup, and data protection are difficult to achieve in a DAS-only environment, a critical point to remember for the DEA-1TT4 Exam.

Understanding Network-Attached Storage (NAS)

Network-Attached Storage, or NAS, was developed to address the sharing and utilization challenges of DAS. A NAS system is a dedicated, file-serving storage device that connects to a standard Ethernet network. Unlike DAS, which is managed by the server it is attached to, a NAS device has its own lightweight operating system and is managed as an independent entity on the network. It allows multiple clients and servers to access and share the same data concurrently over the network, making it ideal for collaborative environments and centralized file storage. The DEA-1TT4 Exam requires a clear understanding of this file-sharing capability.

The key characteristic of NAS is that it provides file-level access to data. This means that the NAS system itself manages the file system and presents data to clients as complete files and folders. Clients access the data using file-based protocols such as Network File System (NFS), which is common in Linux and UNIX environments, or Common Internet File System/Server Message Block (CIFS/SMB), which is prevalent in Windows environments. This abstraction of the file system simplifies access for clients, as they do not need to manage the underlying block-level storage layout.

NAS solutions range from small, single-drive devices for home use to large, highly scalable enterprise systems capable of storing petabytes of data. They are relatively easy to install and configure, as they leverage existing Ethernet infrastructure. Common use cases include user home directories, departmental file shares, and web content repositories. For the DEA-1TT4 Exam, it is important to associate NAS with unstructured data, file-level access, and protocols like NFS and CIFS/SMB. You should also understand its architecture, which typically consists of a NAS head (the controller) and storage shelves.

The Architecture of a Storage Area Network (SAN)

A Storage Area Network, or SAN, represents a more advanced and high-performance approach to networked storage. A SAN is a dedicated, high-speed network that connects servers to a shared pool of block-level storage devices. The primary purpose of a SAN is to make storage devices appear to servers as if they were locally attached disks. This provides the performance and features of DAS with the sharing, scalability, and centralized management benefits of networked storage. This concept of providing block-level access over a network is a fundamental topic for the DEA-1TT4 Exam.

Unlike NAS, which uses a standard Ethernet network, traditional SANs utilize a specialized networking technology called Fibre Channel (FC). Fibre Channel is designed specifically for storage traffic and provides high throughput and very low latency. The SAN consists of three main components: servers with Fibre Channel HBAs, a network of Fibre Channel switches that form the "fabric," and the storage systems themselves. This dedicated network isolates storage traffic from regular LAN traffic, ensuring predictable performance for mission-critical applications. More recently, SANs can also be built using Ethernet with a protocol called iSCSI, which we will cover later.

The block-level access provided by a SAN is its defining feature. The SAN presents logical unit numbers (LUNs) to the servers, which are chunks of raw storage capacity. The server's operating system then formats these LUNs with its own file system (e.g., NTFS for Windows, ext4 for Linux), just as it would with a local disk. This makes SAN the ideal platform for structured data and performance-intensive applications like large databases, transaction processing systems, and server virtualization environments. For the DEA-1TT4 Exam, you must clearly distinguish this block-level access model from the file-level model of NAS.

Comparing DAS, NAS, and SAN

The ability to compare and contrast DAS, NAS, and SAN is a critical skill for the DEA-1TT4 Exam. The comparison can be broken down into several key criteria: access method, protocol, performance, scalability, and manageability. DAS provides block-level access to a single host using protocols like SAS or SATA. NAS provides file-level access to multiple clients over a standard Ethernet network using NFS or CIFS/SMB. SAN provides block-level access to multiple servers over a dedicated network, traditionally using Fibre Channel, or sometimes Ethernet with iSCSI.

In terms of performance, DAS typically offers the lowest latency for a single host. SAN is designed for high performance and low latency across multiple hosts, making it suitable for enterprise applications. NAS performance can be affected by the overhead of the file system and the contention on the shared Ethernet network, making it generally better suited for file sharing than for high-performance transactional workloads. However, modern high-end NAS systems can deliver excellent performance.

Scalability and manageability are where networked storage shines. DAS is difficult to scale and manage centrally. NAS offers good scalability and simplified, centralized management of file data. SAN provides the highest level of scalability and centralized management for block storage, allowing administrators to provision, monitor, and protect storage for hundreds of servers from a single interface. The DEA-1TT4 Exam will often present scenarios and ask you to choose the most appropriate architecture, so understanding these trade-offs is essential. A table comparing these attributes is a useful study aid.

Use Cases and Scenarios for Each Architecture

Applying theoretical knowledge to practical scenarios is a key component of the DEA-1TT4 Exam. Let's consider some common use cases for each storage architecture. A small business needs a simple, low-cost solution to store the boot drive and application data for a single, non-critical application server. In this case, DAS is an ideal fit due to its simplicity, low cost, and dedicated performance. There is no need for the complexity and expense of networked storage when data sharing is not a requirement.

Now, imagine a marketing department with 50 employees who need to collaborate on large graphic design files, presentations, and videos. They need a central repository that is easily accessible from both their Windows and Mac workstations. This is a classic use case for NAS. The file-level access, support for both CIFS/SMB and NFS protocols, and ease of deployment on the existing office Ethernet network make NAS the perfect solution for this collaborative, unstructured data environment.

Finally, consider a large enterprise that is deploying a virtual server farm with 200 virtual machines running a mix of business-critical applications, including a large customer relationship management (CRM) database. This environment demands high performance, low latency, high availability, and the ability to seamlessly migrate virtual machines between physical servers. This scenario is the primary domain of a SAN. The shared block-level storage provided by the SAN allows all physical hosts to access the same datastores, which is a prerequisite for advanced virtualization features like live migration and high-availability clustering. The DEA-1TT4 Exam will test your ability to perform this kind of analysis.

Fibre Channel (FC) and iSCSI SANs

While we have established that SANs provide block-level access over a network, the DEA-1TT4 Exam requires a more detailed understanding of the protocols used to achieve this. The two dominant SAN protocols are Fibre Channel (FC) and Internet Small Computer System Interface (iSCSI). Fibre Channel is a purpose-built, high-speed networking protocol designed specifically for storage. It is known for its high performance, reliability, and lossless nature, which means it has built-in mechanisms to ensure data packets are not dropped. An FC SAN requires specialized hardware, including Fibre Channel HBAs in the servers and Fibre Channel switches for the network fabric.

iSCSI, on the other hand, is a protocol that allows SCSI commands to be encapsulated within standard TCP/IP packets and transported over an Ethernet network. This allows organizations to build a SAN using their existing Ethernet infrastructure, including standard network interface cards (NICs) and Ethernet switches. This can significantly reduce the cost and complexity of deploying a SAN, making it an attractive option for small and medium-sized businesses. While early versions of iSCSI could not match the performance of Fibre Channel, modern 10, 25, or even 100 Gigabit Ethernet has narrowed the performance gap considerably.

The DEA-1TT4 Exam will expect you to know the key characteristics of both protocols. You should associate Fibre Channel with high performance, reliability, and specialized hardware. You should associate iSCSI with cost-effectiveness, ease of use, and leveraging existing Ethernet infrastructure. It is also important to understand the concept of a converged network, where a single Ethernet infrastructure can be used to carry both traditional LAN traffic and iSCSI storage traffic, often using technologies like VLANs to keep the traffic logically separated.

How the DEA-1TT4 Exam Tests Architectural Knowledge

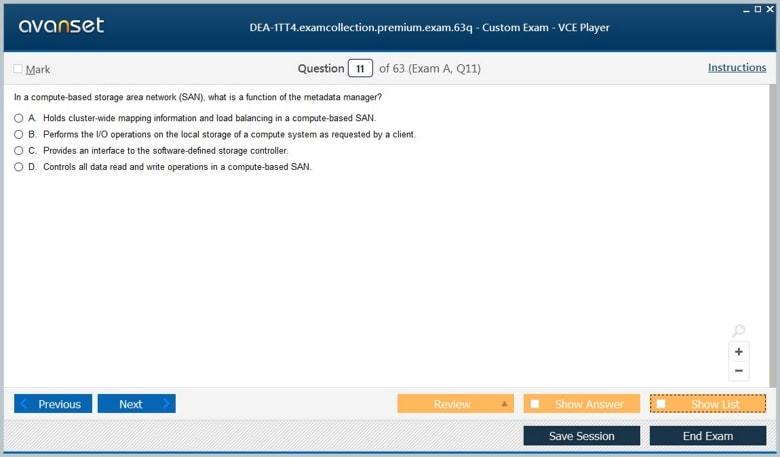

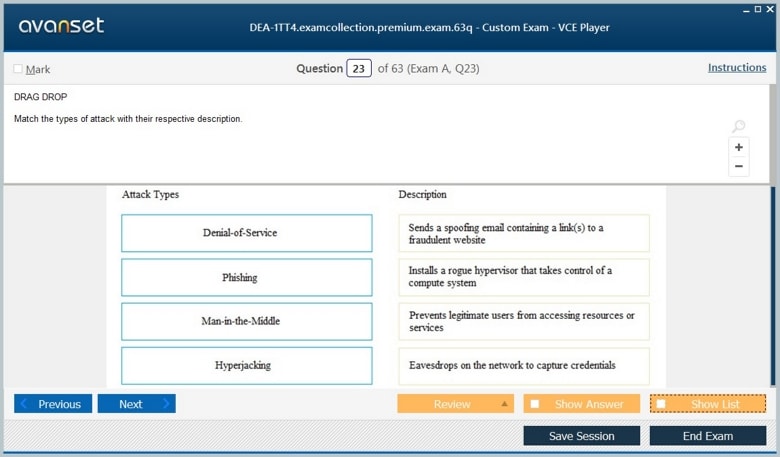

The DEA-1TT4 Exam employs various question formats to assess your understanding of storage architectures. You may encounter multiple-choice questions that ask you to identify the defining characteristic of a specific architecture, such as "Which of the following provides file-level access to data over an Ethernet network?" You might also see scenario-based questions that describe a business problem and ask you to select the most appropriate storage solution from a list of options. These questions test your ability to apply your knowledge.

Another common type of question involves comparing and contrasting the architectures. For example, a question might ask you to identify a key advantage of SAN over NAS for a database application, with the correct answer likely revolving around the performance benefits of block-level access. You may also be asked to identify the correct protocols associated with each architecture (e.g., SAS for DAS, NFS/CIFS for NAS, FC/iSCSI for SAN).

To prepare effectively, you should not just memorize the facts but create a mental model of how each system works. Visualize the data path from the application to the storage for each architecture. Draw diagrams that show the components and how they are interconnected. This deeper level of understanding will enable you to answer not only direct definitional questions but also the more complex analytical questions that are a hallmark of a well-designed certification exam like the DEA-1TT4 Exam. This solid architectural foundation is essential as we move into more advanced topics in the subsequent parts of this guide.

The Role of Networking in the DEA-1TT4 Exam

Having established the foundational storage architectures in the previous part, we now turn our attention to the critical element that enables networked storage: the connectivity. For the DEA-1TT4 Exam, a detailed understanding of the networking technologies and protocols that underpin Storage Area Networks (SANs) is not just beneficial, it is essential. This domain goes beyond simply knowing the names of the protocols; it requires a grasp of how they function, the components they use, and the infrastructure they require. This part will focus on the three key SAN connectivity options: Fibre Channel (FC), iSCSI, and Fibre Channel over Ethernet (FCoE).

The way data is transported between servers and storage has a direct impact on the performance, reliability, and scalability of the entire IT infrastructure. The DEA-1TT4 Exam will test your knowledge of the layers of the protocol stacks, the specialized hardware involved, and the logical constructs used to manage and secure the storage network. For example, understanding the difference between a Fibre Channel Host Bus Adapter (HBA) and a standard Ethernet Network Interface Card (NIC) is fundamental. Similarly, knowing what zoning is and why it is used in an FC SAN is a key piece of knowledge.

As we dissect each protocol, we will highlight the specific concepts that are most likely to appear on the DEA-1TT4 Exam. We will explore the physical and logical components, the addressing schemes, and the key services that make these networks function. This in-depth look at connectivity will provide you with the confidence to tackle some of the more technical questions on the exam and will build upon the architectural knowledge we have already established, preparing you for a comprehensive understanding of modern storage systems.

Fibre Channel (FC) Protocol Stack

Fibre Channel is a high-speed data transfer protocol that was designed from the ground up to transport SCSI commands over a network, making it the de facto standard for high-performance enterprise SANs for many years. To properly prepare for the DEA-1TT4 Exam, you must be familiar with the Fibre Channel protocol stack, which is organized into five layers, designated FC-0 through FC-4. This layered model is analogous to the OSI model for data networking, with each layer performing a specific function. A solid understanding of these layers is crucial.

The FC-0 layer is the physical layer. It defines the physical interface, including the cables (copper or fiber optic), connectors, and signaling standards. It is responsible for transmitting the raw bits across the physical medium. The FC-1 layer is the transmission protocol layer. It is responsible for encoding and decoding the data for transmission. It takes the 8-bit data from the upper layers and converts it into a 10-bit transmission character, a process known as 8b/10b encoding, which helps with error detection and maintaining signal integrity.

The FC-2 layer is the framing and flow control layer. This is one of the most important layers for the DEA-1TT4 Exam. It defines the rules for framing the data into larger structures, manages the flow of data between devices to prevent buffer overruns, and handles the sequence of data delivery. The FC-3 layer is intended for common services, but it is not widely implemented and is less critical for the exam. Finally, the FC-4 layer, the protocol mapping layer, is where upper-level protocols like SCSI are encapsulated into Fibre Channel frames for transport across the SAN fabric.

Components of a Fibre Channel SAN

A functioning Fibre Channel SAN is composed of several specialized hardware components that a candidate for the DEA-1TT4 Exam must be able to identify and describe. The first component is the Host Bus Adapter (HBA). This is a card installed in the server that provides the physical connection to the FC network. Each port on an HBA has a unique 64-bit address called a World Wide Name (WWN), which is similar in concept to a MAC address in an Ethernet network. This WWN is used to identify the server on the SAN.

The second key component is the Fibre Channel switch. Similar to an Ethernet switch, an FC switch is a device with multiple ports that connects the servers and the storage arrays. Switches are the building blocks of the SAN fabric. They intelligently route traffic between the connected devices based on their WWNs. Multiple switches can be interconnected to create a larger, more resilient fabric. The software running on these switches provides critical fabric services, such as name services that allow devices to discover each other.

The third component is the storage array itself, equipped with front-end ports that are Fibre Channel enabled. These ports connect the storage controllers to the SAN fabric, allowing them to receive I/O requests from the servers. Finally, the physical infrastructure, including fiber optic cabling, connects all these components. The DEA-1TT4 Exam will test your ability to recognize these components and understand their roles within the overall architecture of a Fibre Channel SAN. Understanding how they interact is key to grasping SAN concepts.

Understanding iSCSI and IP SAN

While Fibre Channel has long been the leader in high-performance SANs, iSCSI has emerged as a powerful and cost-effective alternative. The DEA-1TT4 Exam requires a thorough understanding of how iSCSI works and where it fits in the storage landscape. The iSCSI protocol, which stands for Internet Small Computer System Interface, enables the transport of SCSI block-level I/O over standard TCP/IP networks. This means that organizations can build a SAN using the same Ethernet infrastructure they use for their local area network (LAN).

In an iSCSI environment, the terminology is different from Fibre Channel. The server, which sends the I/O requests, is called the iSCSI initiator. The storage system, which responds to these requests, is called the iSCSI target. The initiator can be a software initiator, which is a driver that uses a standard Ethernet NIC to handle the iSCSI traffic, or a hardware initiator, which is a specialized iSCSI HBA that offloads the TCP/IP and iSCSI processing from the server's CPU, improving performance.

The iSCSI target presents one or more LUNs to the initiators. Communication between the initiator and target is established through a login process, and data is transferred in the form of iSCSI protocol data units (PDUs). Because iSCSI runs on top of TCP/IP, it can be routed across different networks, though it is most commonly used within a single data center LAN. For the DEA-1TT4 Exam, you must understand the initiator-target model, the difference between software and hardware initiators, and the fundamental advantage of iSCSI: leveraging ubiquitous and cost-effective Ethernet technology.

Fibre Channel over Ethernet (FCoE) Explained

Fibre Channel over Ethernet (FCoE) is a converged network protocol that was developed to combine the benefits of Fibre Channel with the ubiquity of Ethernet. It encapsulates Fibre Channel frames directly into Ethernet frames, allowing both LAN traffic and SAN traffic to run over a single, unified 10 Gigabit Ethernet (or faster) network infrastructure. This convergence promises to reduce the number of adapters, cables, and switches required in a data center, thereby lowering costs and simplifying management. The DEA-1TT4 Exam will expect you to understand the purpose and basic mechanics of FCoE.

FCoE is not the same as iSCSI. While both use Ethernet as the transport, iSCSI encapsulates SCSI commands in TCP/IP, making it a routable Layer 3 protocol. FCoE, on the other hand, encapsulates the entire Fibre Channel frame at Layer 2 and is not routable. It requires a special type of Ethernet network known as a Data Center Bridging (DCB) or Converged Enhanced Ethernet (CEE) network. DCB adds several enhancements to standard Ethernet to make it lossless, which is a requirement for Fibre Channel traffic.

The key components in an FCoE environment are Converged Network Adapters (CNAs) in the servers and FCoE-capable switches. A CNA is a single adapter that can function as both a standard Ethernet NIC and a Fibre Channel HBA, presenting two different interfaces to the operating system. FCoE switches are capable of handling both standard Ethernet traffic and FCoE traffic. While FCoE has not seen the widespread adoption that was once predicted, it is still an important technology to understand for the DEA-1TT4 Exam, particularly its role in data center convergence.

Comparing FC, iSCSI, and FCoE

A crucial skill for the DEA-1TT4 Exam is the ability to compare and contrast the three major SAN protocols: Fibre Channel, iSCSI, and FCoE. The comparison can be framed around cost, performance, complexity, and required infrastructure. Fibre Channel is the highest-performing and most reliable option, but it is also the most expensive and complex, requiring a completely separate, dedicated network with specialized hardware and skill sets. It is the traditional choice for mission-critical, Tier 1 applications where performance and reliability are non-negotiable.

iSCSI is the most cost-effective and easiest to deploy, as it leverages existing Ethernet infrastructure and knowledge. Its performance has become highly competitive with the advent of faster Ethernet standards, making it an excellent choice for small-to-medium businesses and for Tier 2 or Tier 3 applications in larger enterprises. The trade-off is that it relies on the TCP/IP stack, which can introduce slightly more latency and CPU overhead on the hosts compared to a dedicated FC HBA.

FCoE sits in the middle. It aims to provide the performance and reliability characteristics of Fibre Channel while leveraging the convergence benefits of Ethernet. However, it requires a more advanced and expensive type of Ethernet network (DCB/CEE), and its adoption has been limited. For the DEA-1TT4 Exam, you should be able to articulate these trade-offs clearly. A question might ask you to choose the best protocol for a company with a limited budget and existing Ethernet expertise (iSCSI) or for a large financial institution's core trading platform (Fibre Channel).

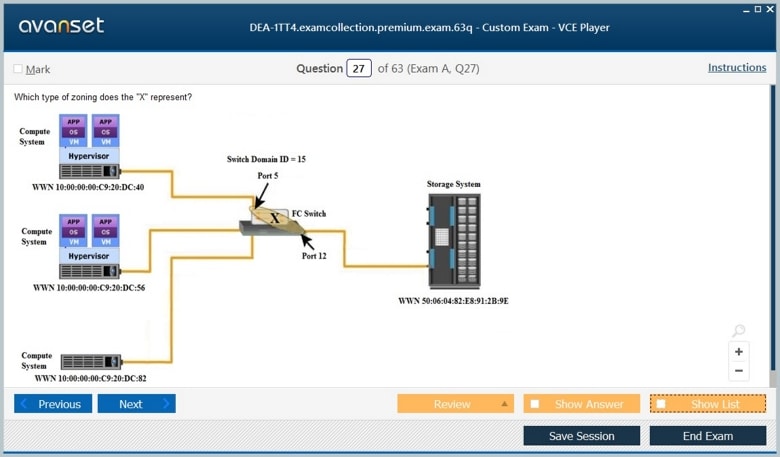

SAN Topologies and Fabric Services

The way in which SAN components are connected is referred to as the topology. The most common topology for modern FC and FCoE SANs is the switched fabric topology. In this model, all devices (servers and storage) connect to switches, which handle the routing of traffic. This provides high scalability, performance, and redundancy. A key concept for the DEA-1TT4 Exam is the idea of the SAN fabric. The fabric is the entire collection of interconnected switches that form the intelligent network. The fabric provides several essential services.

One of the most important fabric services is the Name Server. When a device connects to the fabric, it registers its WWN and other attributes with the Name Server. Other devices can then query the Name Server to discover the addresses of the devices they need to communicate with. This facilitates dynamic discovery within the SAN. Another critical service is flow control, which ensures that a sending device does not overwhelm a receiving device with too much data, preventing frame loss.

To manage and secure the SAN, logical constructs are used. Zoning is a fabric service that is used to control which devices can see and communicate with each other. It is a security mechanism that partitions the fabric into logical groups of devices. For example, a zone might be created that contains only a specific application server and the storage LUNs it is allowed to access. This prevents unauthorized servers from accessing that storage. A similar concept, LUN masking, is implemented on the storage array itself as an additional layer of security. Understanding zoning and LUN masking is absolutely critical for the DEA-1TT4 Exam.

Troubleshooting Common Connectivity Issues

While the DEA-1TT4 Exam is not a deep-dive troubleshooting exam, it does expect a foundational understanding of what can go wrong in a SAN environment. Connectivity problems are a common source of issues. These can be physical layer problems, such as a faulty cable, a bad Small Form-factor Pluggable (SFP) transceiver, or a port failure on a switch or HBA. Symptoms might include a complete loss of connectivity to storage or intermittent performance problems. Basic troubleshooting would involve checking physical connections, link lights, and error counters on switch ports.

Configuration errors are another frequent cause of problems. For example, in an FC SAN, incorrect zoning is a very common issue. If a server is not placed in the correct zone with its intended storage target, it will be unable to see its LUNs. Similarly, if the LUN masking on the storage array is not configured correctly to present the LUNs to the server's WWN, the server will not be able to access its storage. On the iSCSI side, common issues include incorrect IP addresses, firewall rules blocking the iSCSI port (TCP 3260), or misconfigured initiator or target names.

Performance issues can also be considered a form of connectivity problem. A slow or congested network link can severely impact application performance. This could be caused by an oversubscribed inter-switch link (ISL) in an FC SAN or by contention with other traffic on a shared Ethernet network in an iSCSI environment. Having a basic understanding of these common problem areas will help you reason through scenario-based questions on the DEA-1TT4 Exam that may touch upon operational aspects of SAN management.

The Shift to Modern Storage: A DEA-1TT4 Exam Perspective

The world of information storage is in a constant state of evolution. While the foundational architectures and protocols we have discussed are still critically important, the DEA-1TT4 Exam also reflects the industry's shift towards more agile, flexible, and software-driven solutions. This part of our guide focuses on these modern storage concepts, including virtualization, software-defined storage, cloud computing, and new storage media like flash. A strong grasp of these topics is essential for demonstrating up-to-date knowledge and for success on the exam.

The driving forces behind this modernization are the demands of modern applications and the need for greater operational efficiency. Businesses require IT infrastructure that can be provisioned rapidly, scaled on demand, and managed with a high degree of automation. Traditional storage silos are being replaced by pooled resources that are managed through software. The DEA-1TT4 Exam will test your understanding of how these new paradigms address the limitations of older models and the business benefits they provide.

As we explore each of these modern concepts, we will focus on the core principles and terminology that you are likely to encounter. We will explain how these technologies abstract complexity, improve resource utilization, and enable new IT service delivery models. This knowledge not only prepares you for the DEA-1TT4 Exam but also equips you with the vocabulary and understanding needed to navigate the contemporary IT landscape, where lines between on-premises and cloud, and between hardware and software, are increasingly blurred.

Storage Virtualization Explained

Storage virtualization is a key enabling technology for the modern data center and a significant topic on the DEA-1TT4 Exam. At its core, virtualization is the process of abstracting logical resources from the underlying physical resources. In the context of storage, it means creating a pool of storage from one or more physical storage arrays and presenting it to servers as a unified, centrally managed resource. This breaks the tight coupling between a server and a specific physical disk or array, providing immense flexibility and simplifying management.

Virtualization can occur at different places in the infrastructure. It can be host-based, where software on the server aggregates and manages storage. It can be network-based, where a specialized appliance or switch in the SAN performs the virtualization. Most commonly today, it is array-based, where the intelligence within the storage controller itself abstracts the back-end physical disks and presents virtual volumes, or LUNs, to the hosts. The DEA-1TT4 Exam will expect you to understand these different forms of virtualization.

The benefits of storage virtualization are numerous. It allows for non-disruptive data migration, meaning data can be moved from an old array to a new one without taking applications offline. It enables advanced features like thin provisioning, where storage capacity is allocated from the pool only as it is actually written by the application, improving capacity efficiency. It also provides a single point of management for diverse storage assets. Understanding these benefits and the problems they solve is crucial for answering scenario-based questions on the DEA-1TT4 Exam.

Exploring Software-Defined Storage (SDS)

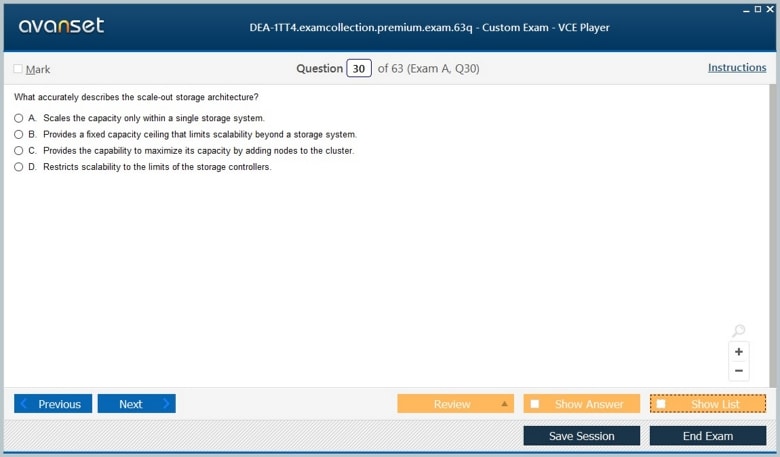

Software-Defined Storage (SDS) is an evolution of storage virtualization and a central pillar of the software-defined data center (SDDC). The core principle of SDS is the separation of the storage control plane from the data plane. The data plane is responsible for the actual storing and retrieving of data and typically runs on commodity, off-the-shelf server hardware. The control plane is the software intelligence that manages and provisions the storage, providing services like data protection, replication, and deduplication. This software-based control is the defining characteristic of SDS.

This separation provides tremendous flexibility and helps to avoid vendor lock-in. With SDS, an organization can build a scalable storage system using hardware from multiple different vendors, all managed under a single software control plane. The storage services are delivered by the software, not by the proprietary hardware of a traditional storage array. This approach allows for rapid innovation, as new features can be added by simply updating the software. The DEA-1TT4 Exam requires you to grasp this fundamental architectural shift away from monolithic, hardware-centric systems.

Key attributes of an SDS solution include automation through policy-based management, a standardized management interface (often via APIs), and the ability to scale out by simply adding more commodity servers (nodes) to the cluster. SDS can provide block, file, and object storage services from the same platform. Understanding that SDS is an architectural approach, not a single product, and being able to identify its key characteristics are essential for tackling related questions on the DEA-1TT4 Exam.

The Intersection of Cloud Computing and Storage

Cloud computing has fundamentally changed how IT services are consumed and delivered, and storage is at the heart of this transformation. The DEA-1TT4 Exam will test your understanding of basic cloud concepts and how they relate to storage. Cloud computing provides on-demand access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications) that can be rapidly provisioned with minimal management effort. The main service models are Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS).

From a storage perspective, cloud providers offer several types of services. Block storage services provide virtual disks (volumes) that can be attached to virtual machine instances, similar to a LUN in a SAN. This is used for operating systems and applications that require block-level access. File storage services offer a managed network file system, analogous to on-premises NAS, for sharing files among multiple virtual machines. Object storage is a highly scalable and cost-effective service designed for storing massive amounts of unstructured data, which we will discuss next.

Understanding the deployment models—public, private, and hybrid cloud—is also important. A public cloud is owned and operated by a third-party provider. A private cloud is infrastructure operated solely for a single organization. A hybrid cloud combines public and private clouds, allowing data and applications to be shared between them. The DEA-1TT4 Exam may present scenarios where you need to identify the appropriate cloud storage service or deployment model for a given business requirement, such as using the public cloud for disaster recovery or archival.

Go to testing centre with ease on our mind when you use Dell DEA-1TT4 vce exam dumps, practice test questions and answers. Dell DEA-1TT4 Information Storage and Management v4 exam certification practice test questions and answers, study guide, exam dumps and video training course in vce format to help you study with ease. Prepare with confidence and study using Dell DEA-1TT4 exam dumps & practice test questions and answers vce from ExamCollection.

Top Dell Certification Exams

Site Search:

VALID for ISMv5 ?!

Only 63 questions in the file. no problem if they are all on the exam, time will tell!

Premium dump is valid.

Dump is valid ?

Have someone pass the exam DEA-1TT4?

Does someone pass the exam with dump?

Great Premium vaild

Dump is valid ?